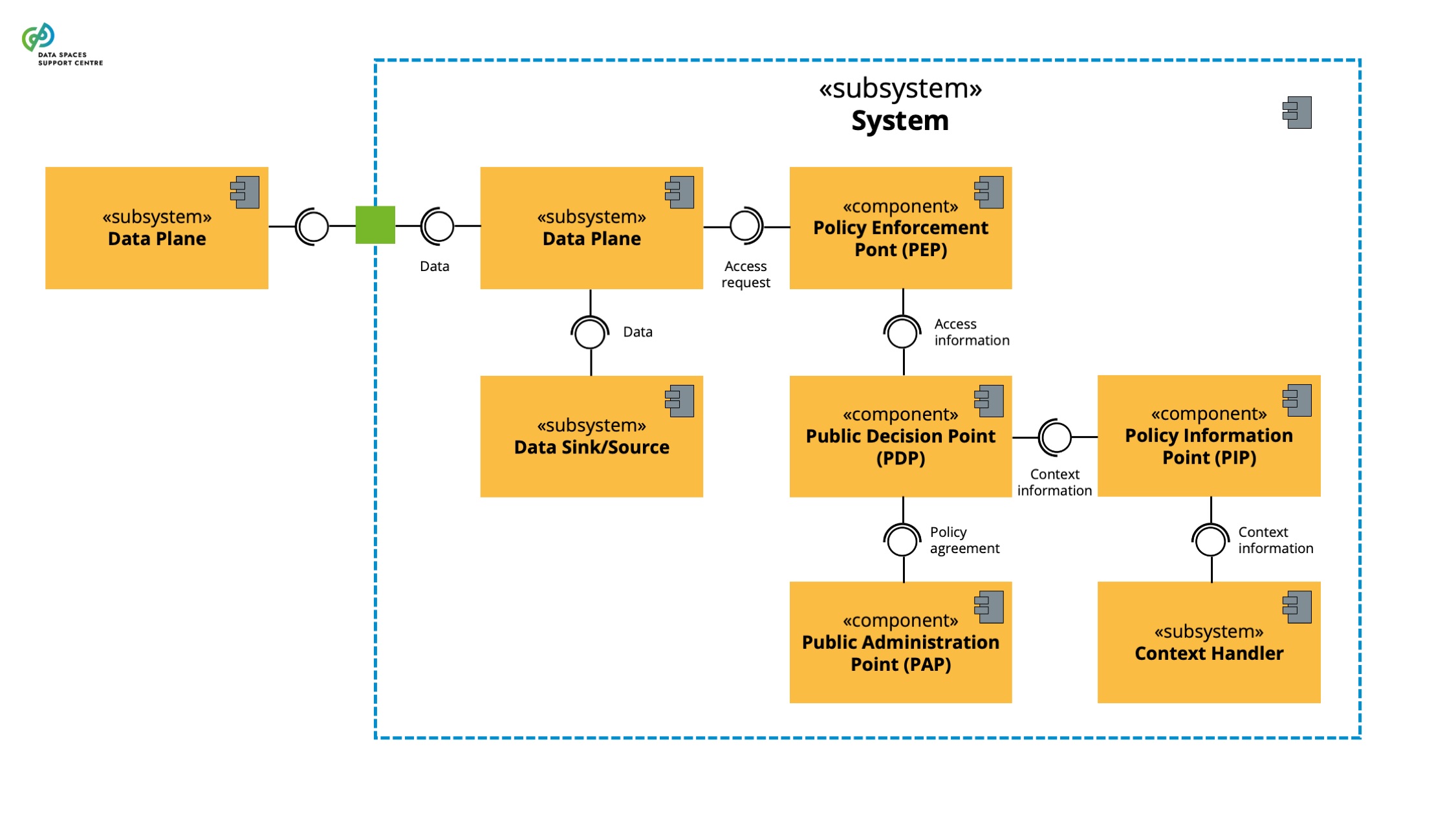

| Access Control

Sources (vsn) :

- Access & Usage Policies Enforcement (bv20)

- Access & Usage Policies Enforcement (bv30)

|

Systems and policies that regulate who can access specific data resources and under what conditions. |

| Accreditation

Sources (vsn) :

- Identity & Attestation Management (bv30)

- Trust Framework (bv30)

|

third-party attestation related to a conformity assessment body, conveying formal demonstration of its competence, impartiality and consistent operation in performing specific conformity assessment activities (ref. ISO/IEC 17000:2020(en), Conformity assessment — Vocabulary and general principles ) |

| Accreditation Body

Source (vsn) : Trust Framework (bv30)

|

Authoritative body that performs accreditation (ref. ISO/IEC 17000:2020(en), Conformity assessment — Vocabulary and general principles ) |

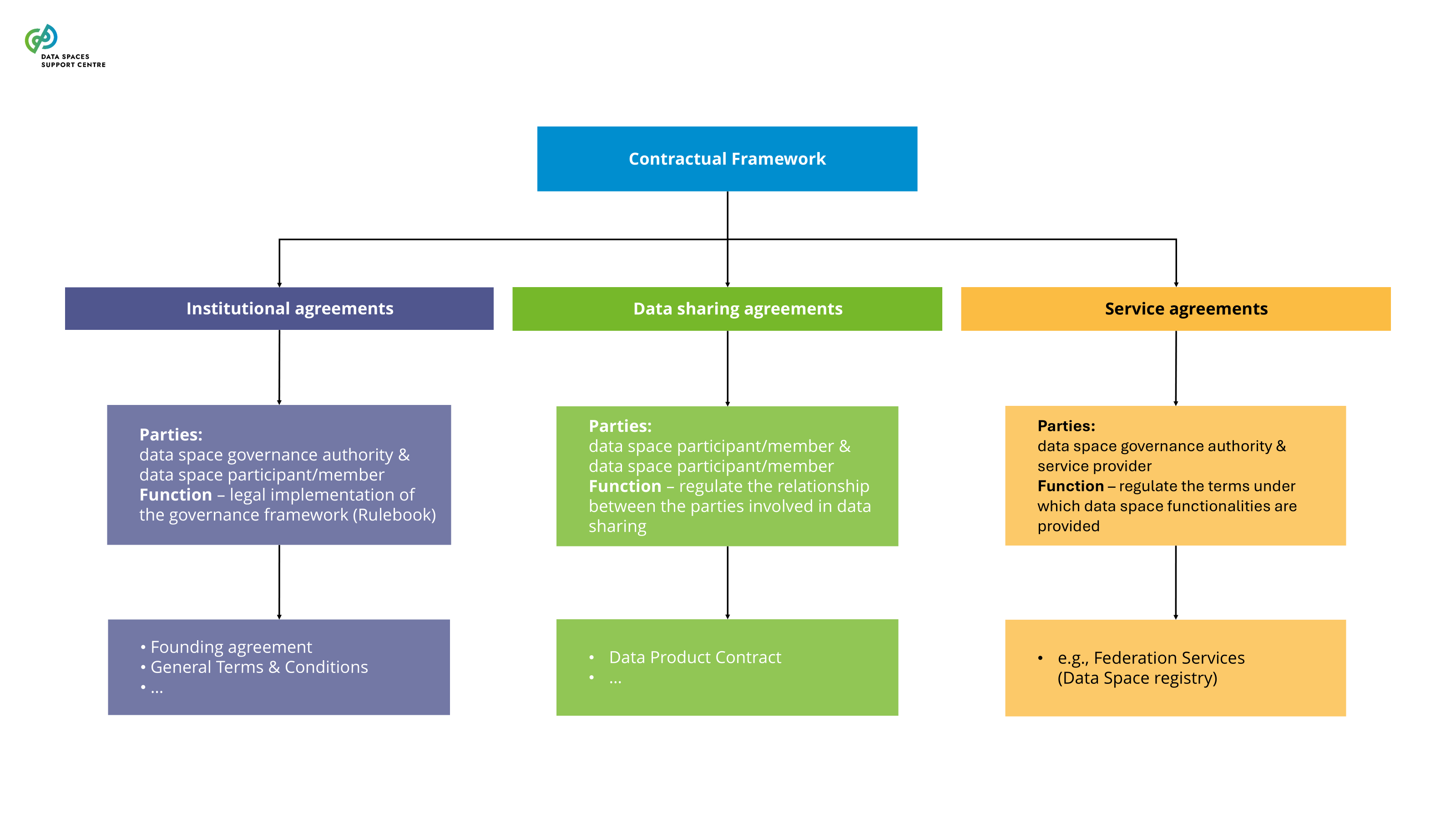

| Agreement

Source (vsn) : Contractual Framework (bv30)

|

A contract that states the rights and duties (obligations) of parties that have committed to (signed) it in the context of a particular data space. These rights and duties pertain to the data space and/or other such parties.

Note : This term was automatically generated as a synonym for: data-space-agreement

|

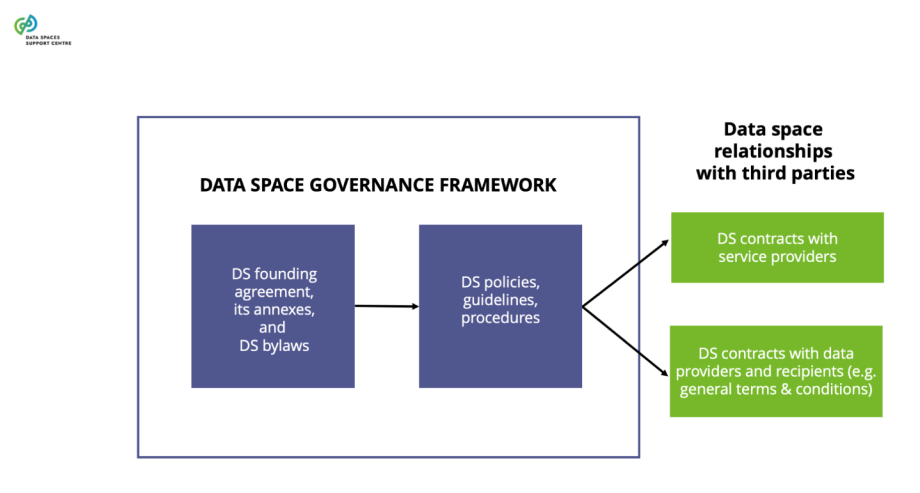

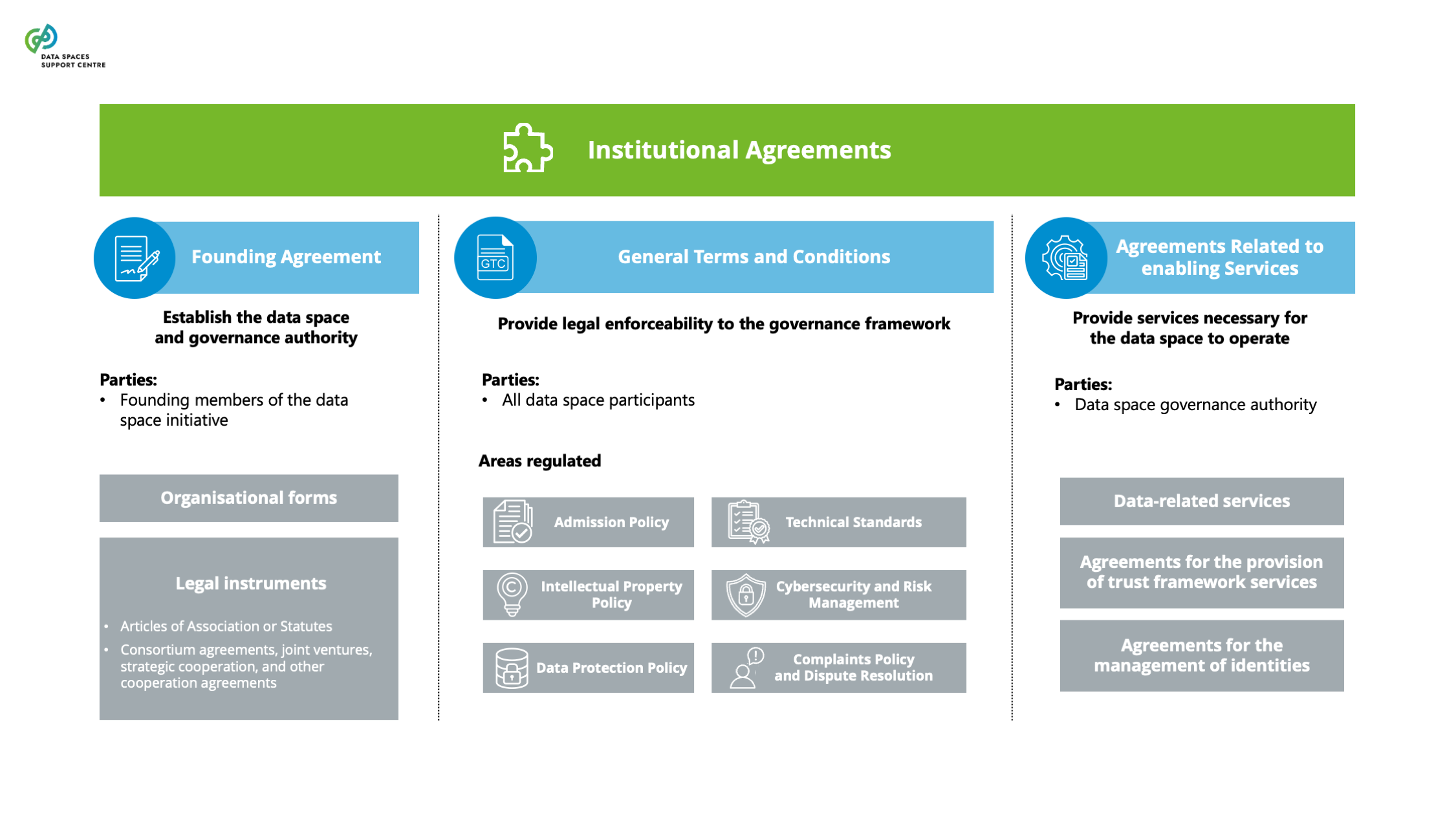

| Agreements Related To Enabling Services

Source (vsn) : Contractual Framework (bv20)

|

These agreements are entered into by the data space governance authority to provide the services necessary for the data space to operate. They can be classified as data-related services, agreements for the provision of trust framework services, and agreements for the management of identities. |

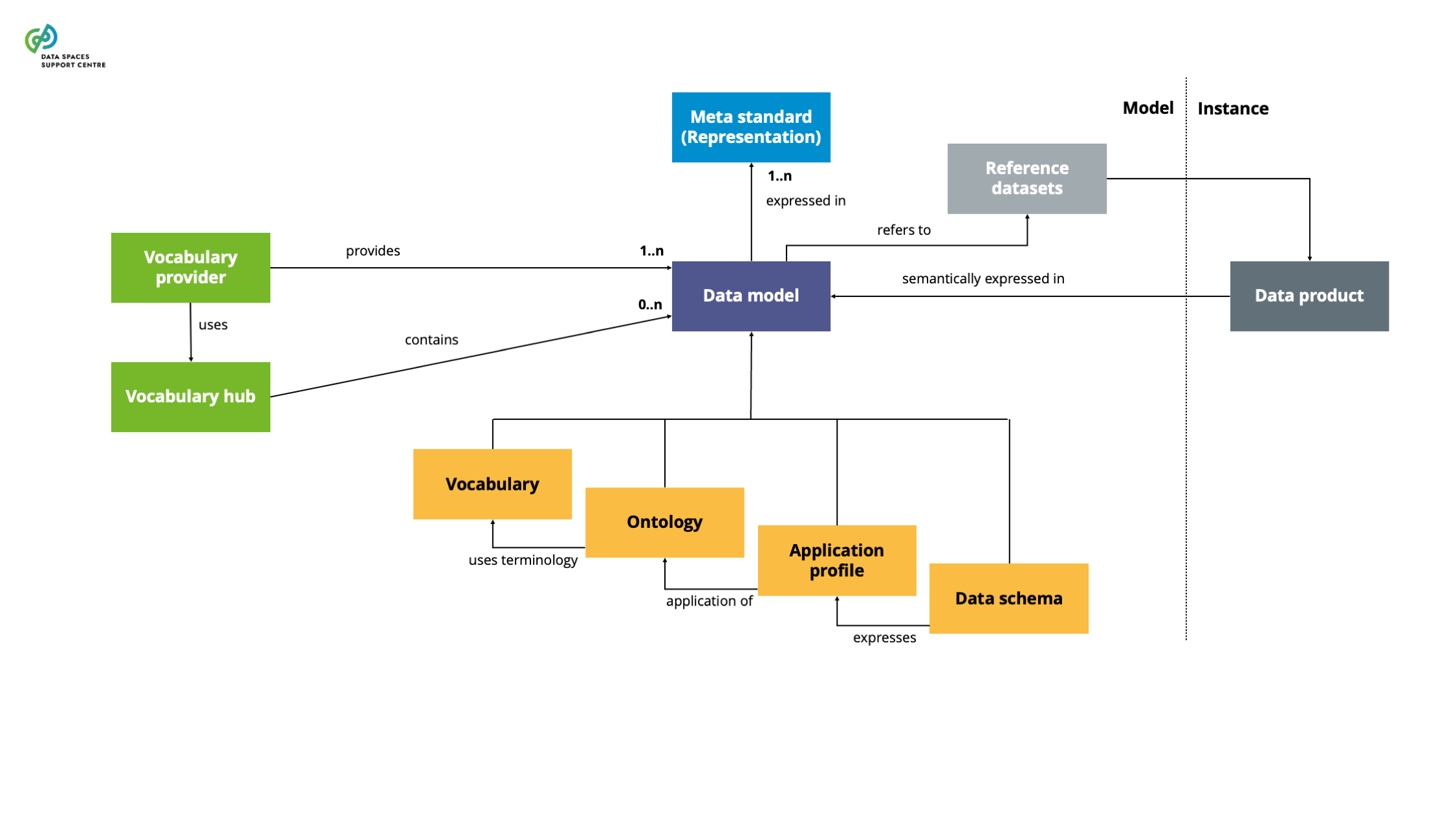

| Application Profile

Sources (vsn) :

- Data Models (bv20)

- Data Models (bv30)

|

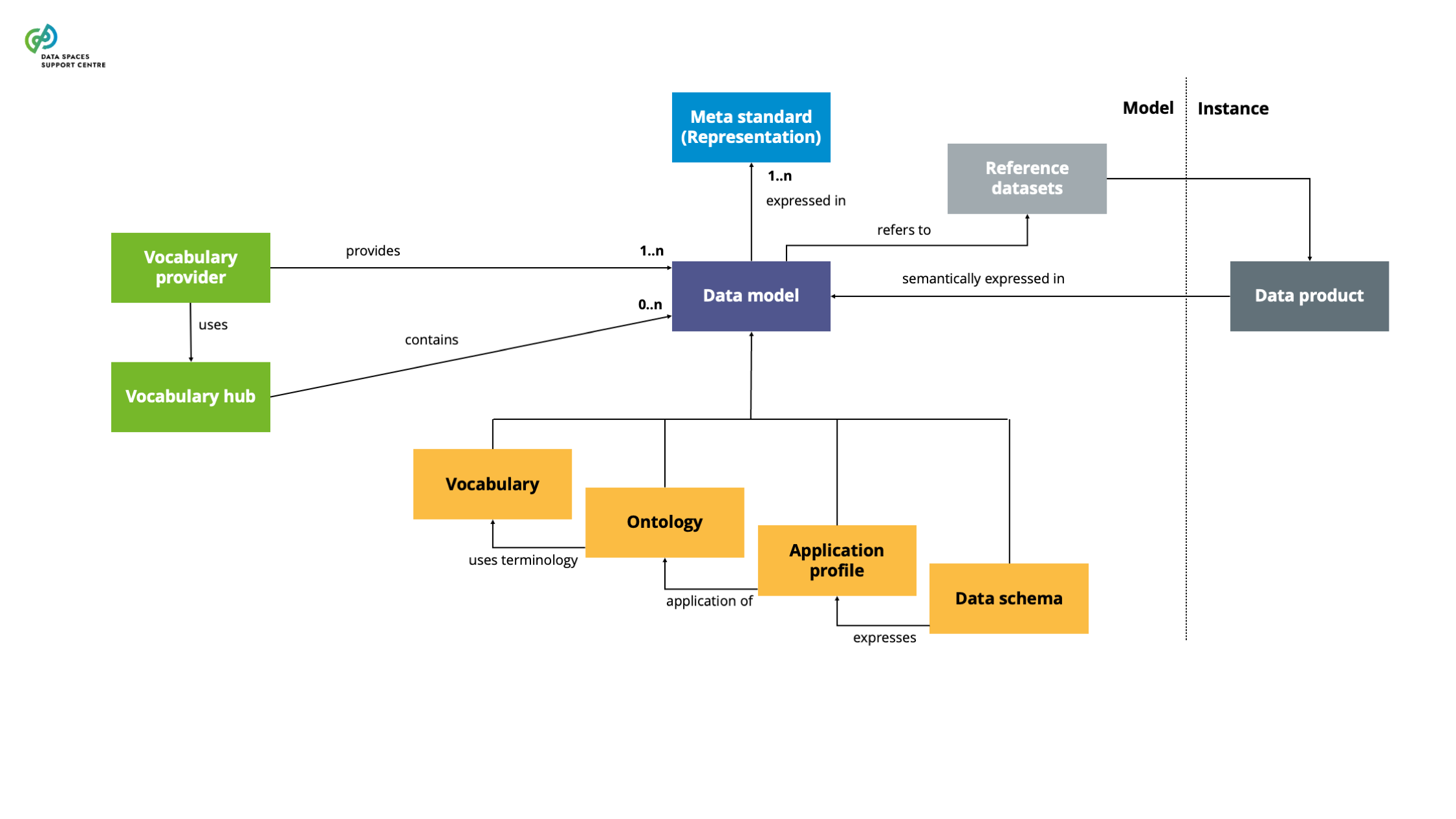

A data model that specifies the usage of information in a particular application or domain, often customised from existing data models (e.g., ontologies) to address specific application needs and domain requirements. |

| Assurance

Source (vsn) : 8 Identity and Trust (bv20)

|

An artefact that helps parties make trust decisions, such as certificates, commitments, contracts, warranties, etc. |

| Assurance

Source (vsn) : 8 Identity and Trust (bv30)

|

An artefact that helps parties make trust decisions about a claim, such as certificates, commitments, contracts, warranties, etc.

Explanatory Text : Also defined as: grounds for justified confidence that a claim has been or will be achieved [ISO/IEC/IEEE 15026-1:2019, 3.1.1]

|

| Attestation

Sources (vsn) :

- 8 Identity and Trust (bv30)

- Identity & Attestation Management (bv30)

- Trust Framework (bv30)

|

Issue of a statement, based on a decision, that fulfilment of specified requirements has been demonstrated (ref. ISO/IEC 17000:2020(en), Conformity assessment — Vocabulary and general principles ) |

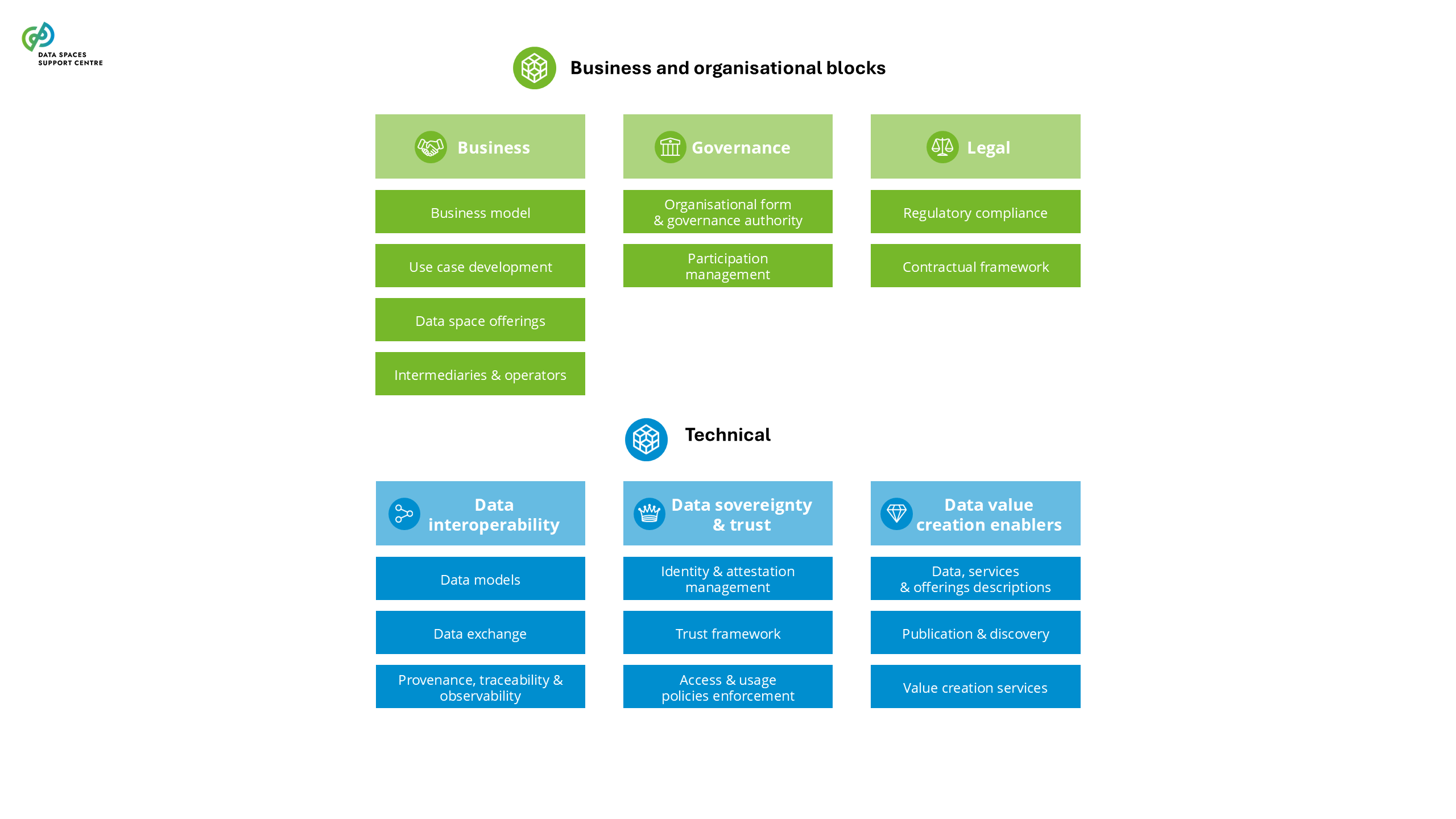

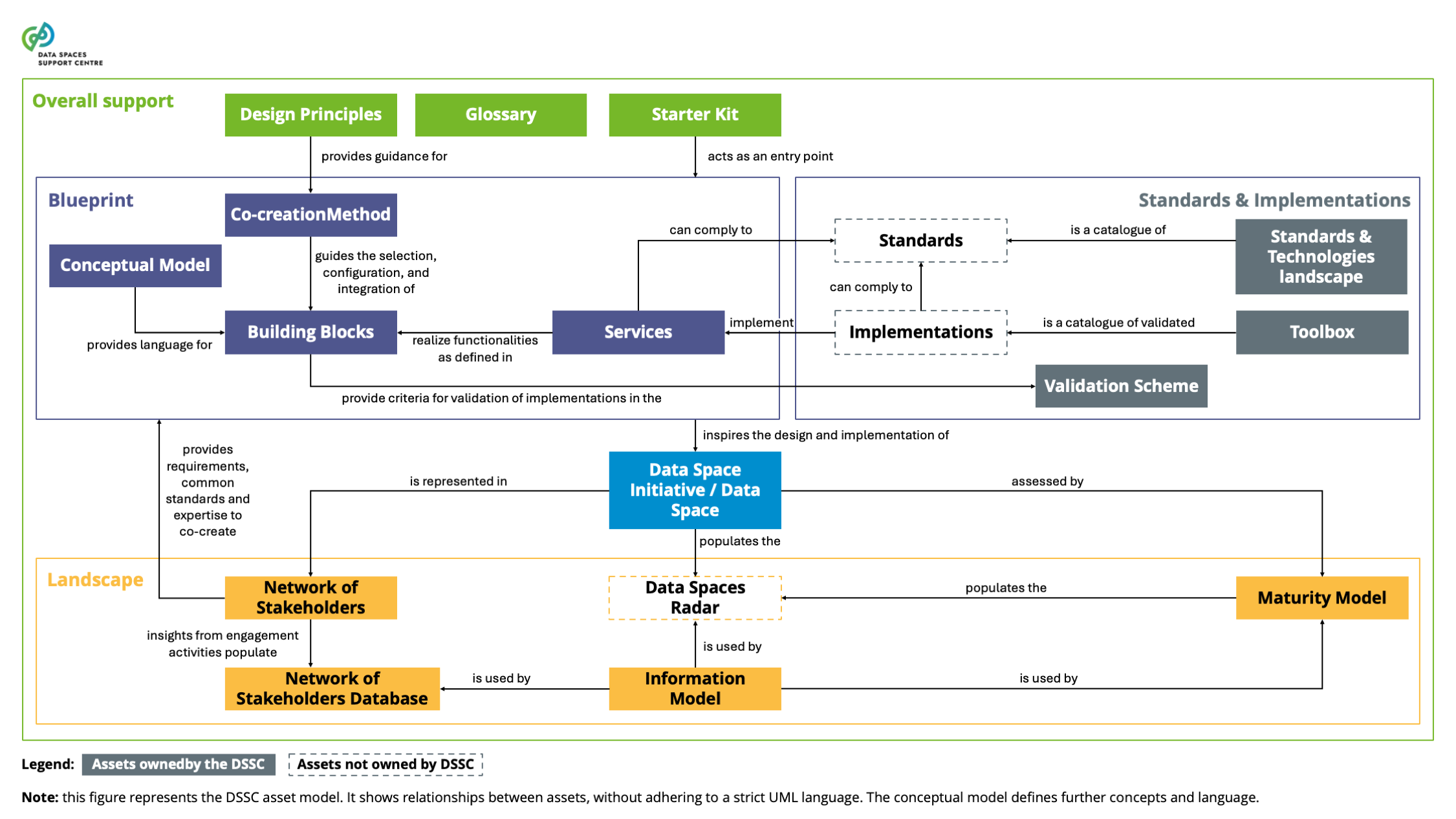

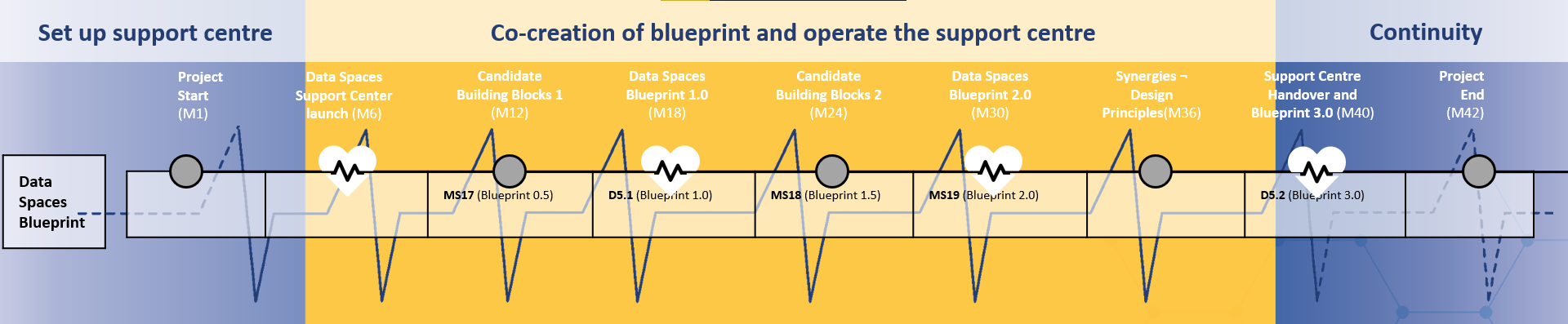

| Blueprint

Sources (vsn) :

- 9 Building Blocks and Implementations (bv20)

- 9 Building Blocks and Implementations (bv30)

|

A consistent, coherent and comprehensive set of guidelines to support the implementation, deployment and maintenance of data spaces.

Explanatory Text : The blueprint contains the conceptual model of data space, data space building blocks, and recommended selection of standards, specifications and reference implementations identified in the data spaces technology landscape.

Note : This term was automatically generated as a synonym for: data-spaces-blueprint

|

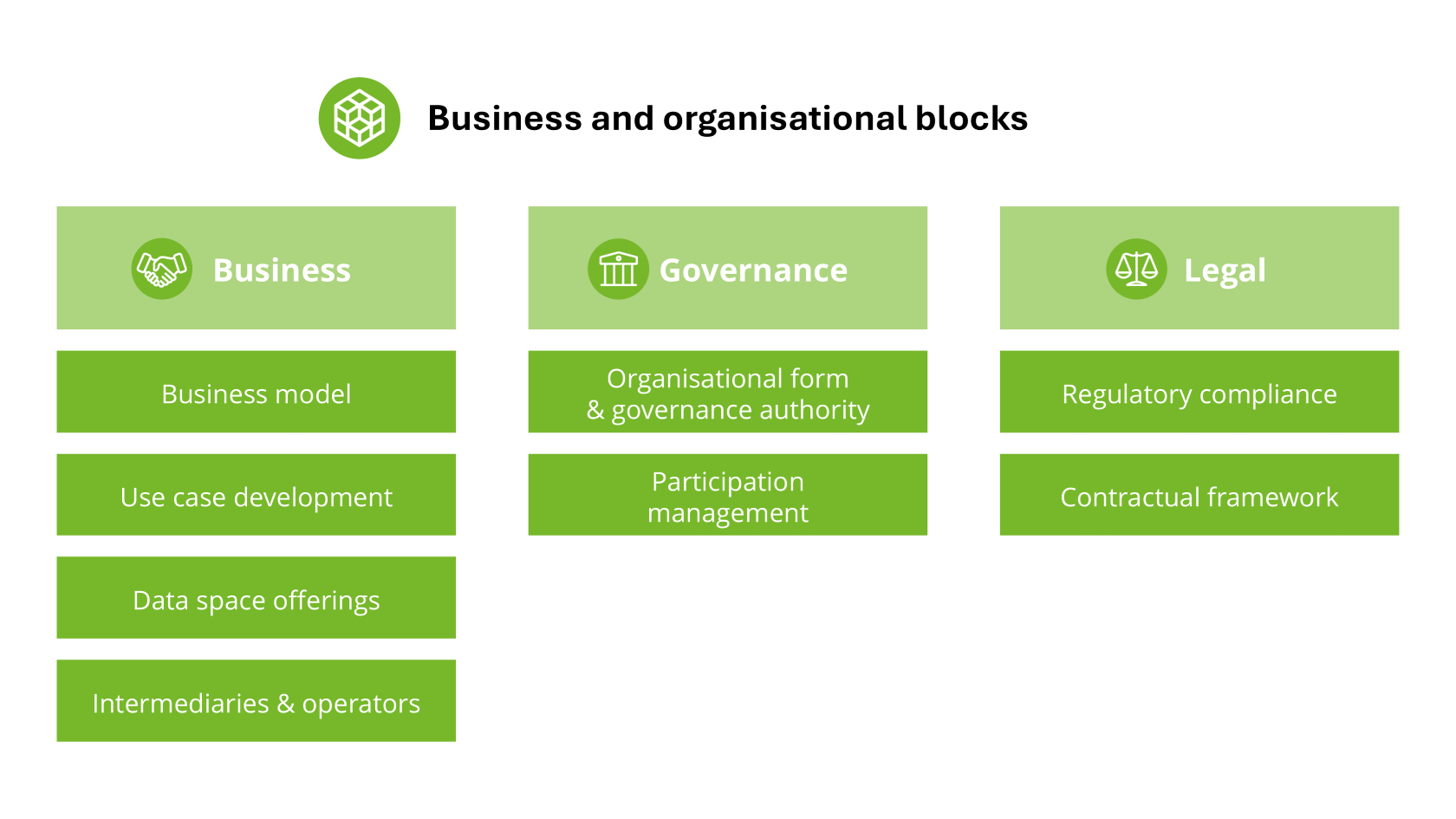

| Building Block

Sources (vsn) :

- 9 Building Blocks and Implementations (bv20)

- 9 Building Blocks and Implementations (bv30)

|

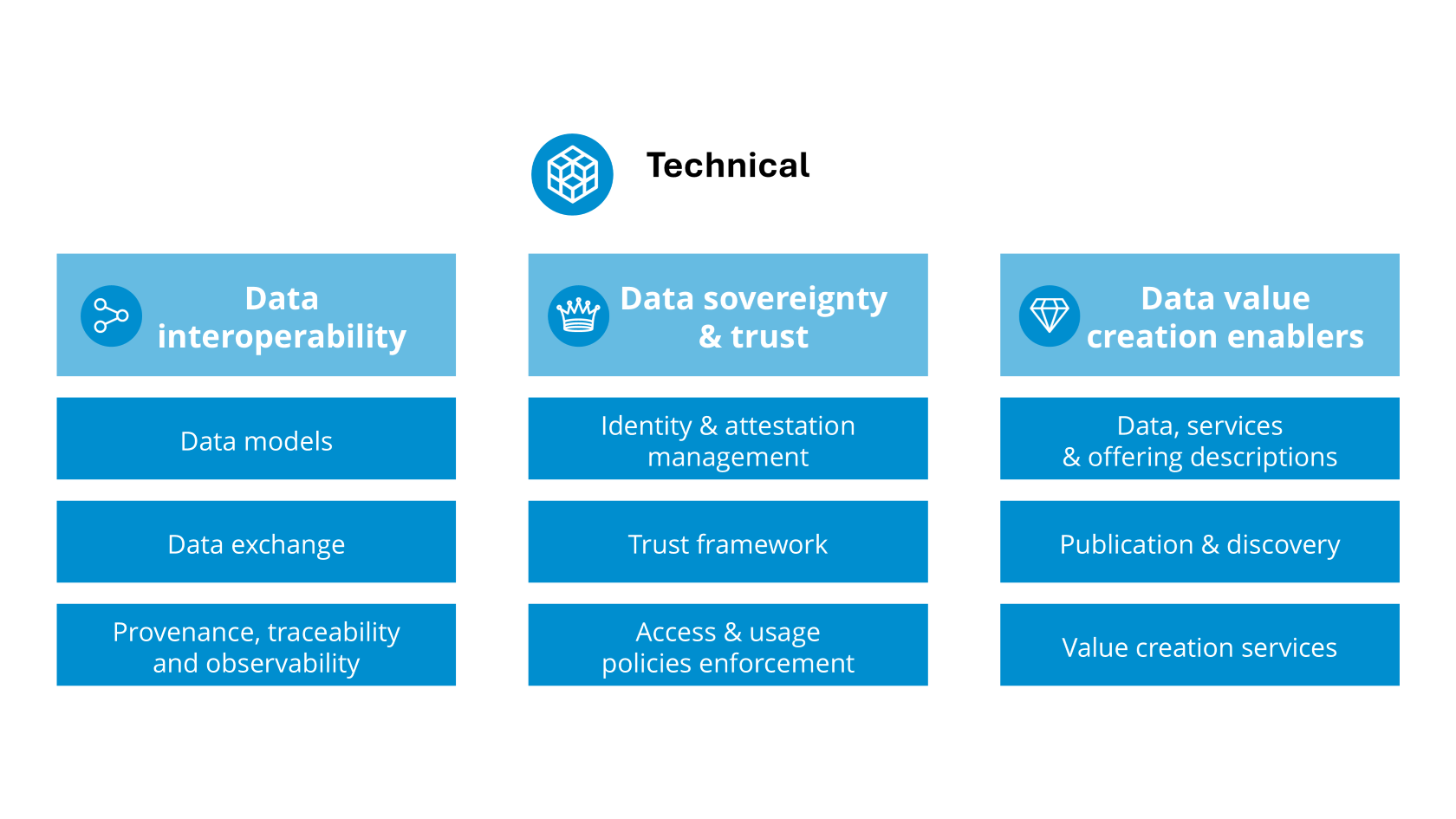

A description of related functionalities and/or capabilities that can be realised and combined with other building blocks to achieve the overall functionality of a data space.

Explanatory Texts :

- In the data space blueprint the building blocks are divided into organisational and business building blocks and technical building blocks.

- In many cases, the functionalities are implemented by Services

Note : This term was automatically generated as a synonym for: data-space-building-block

|

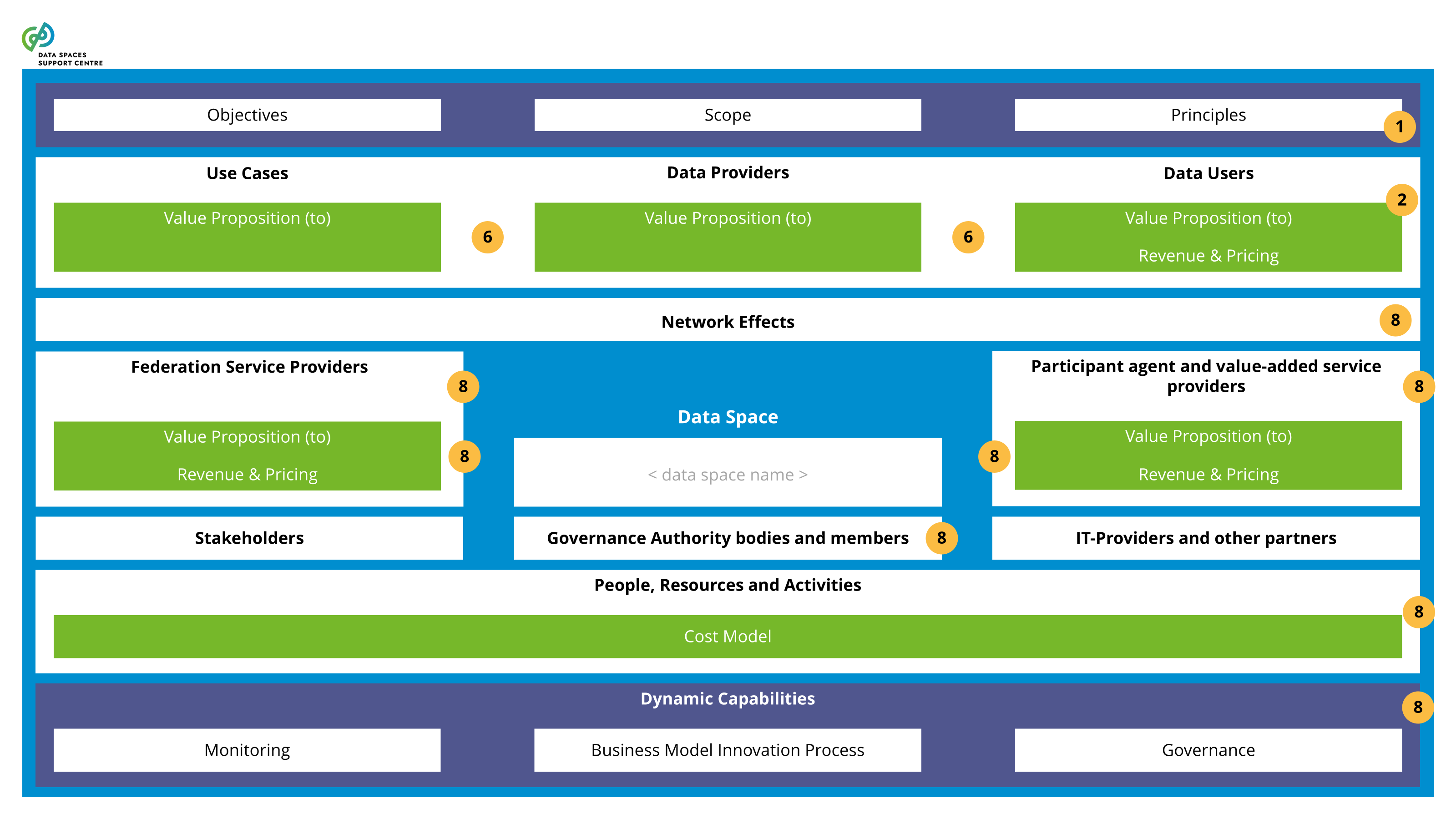

| Business Model

Source (vsn) : Business Model (bv30)

|

A description of the way an organisation creates, delivers, and captures value. Such a description typically includes for whom value is created (customer) and what the value proposition is.Typically, a tool called business model canvas is used to describe or design a business model, but alternatives that are more suitable for specific situations, such as data spaces, are available. |

| Candidate

Source (vsn) : Participation Management (bv30)

|

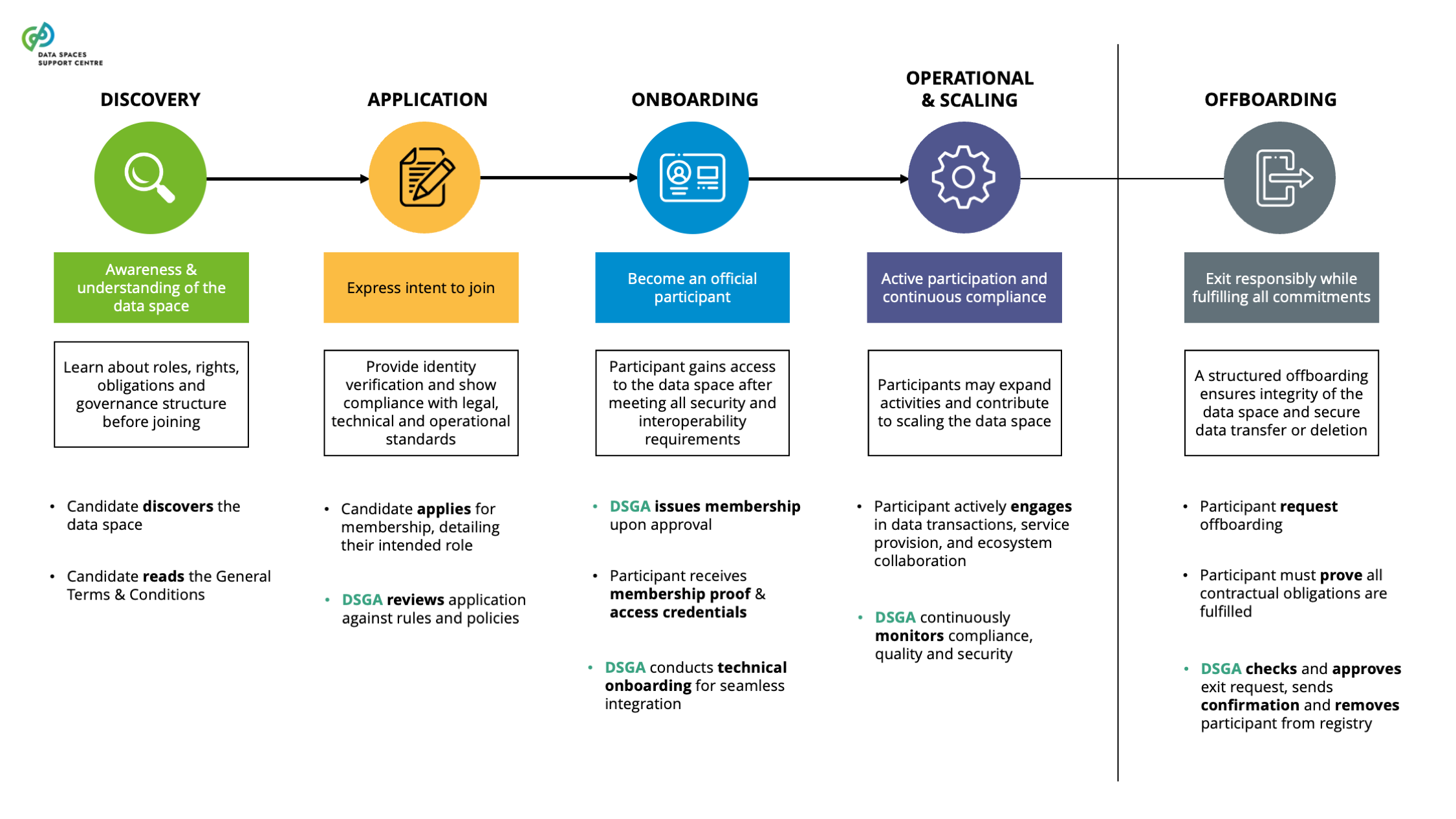

A party interested in joining a data space as a participant. |

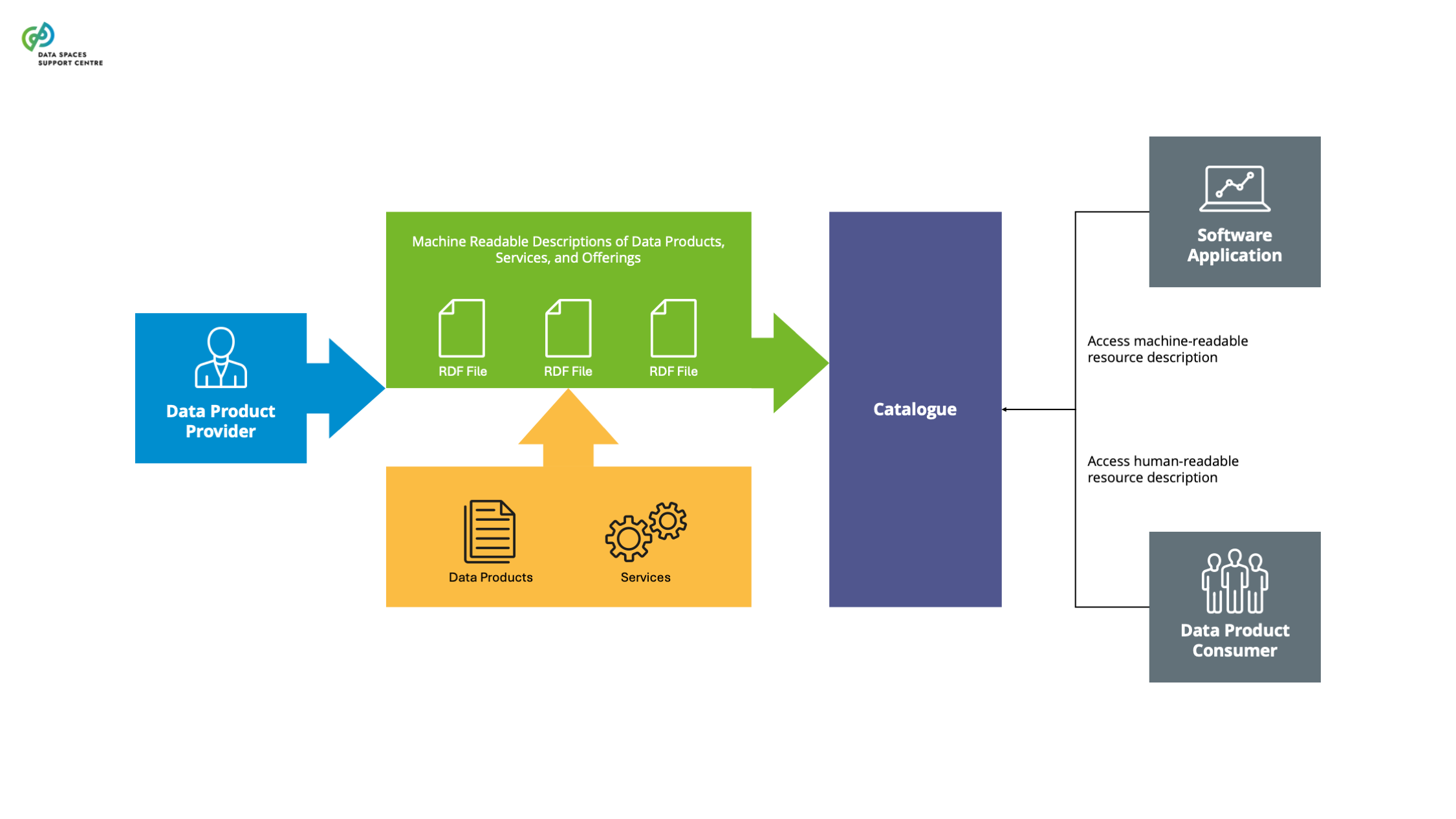

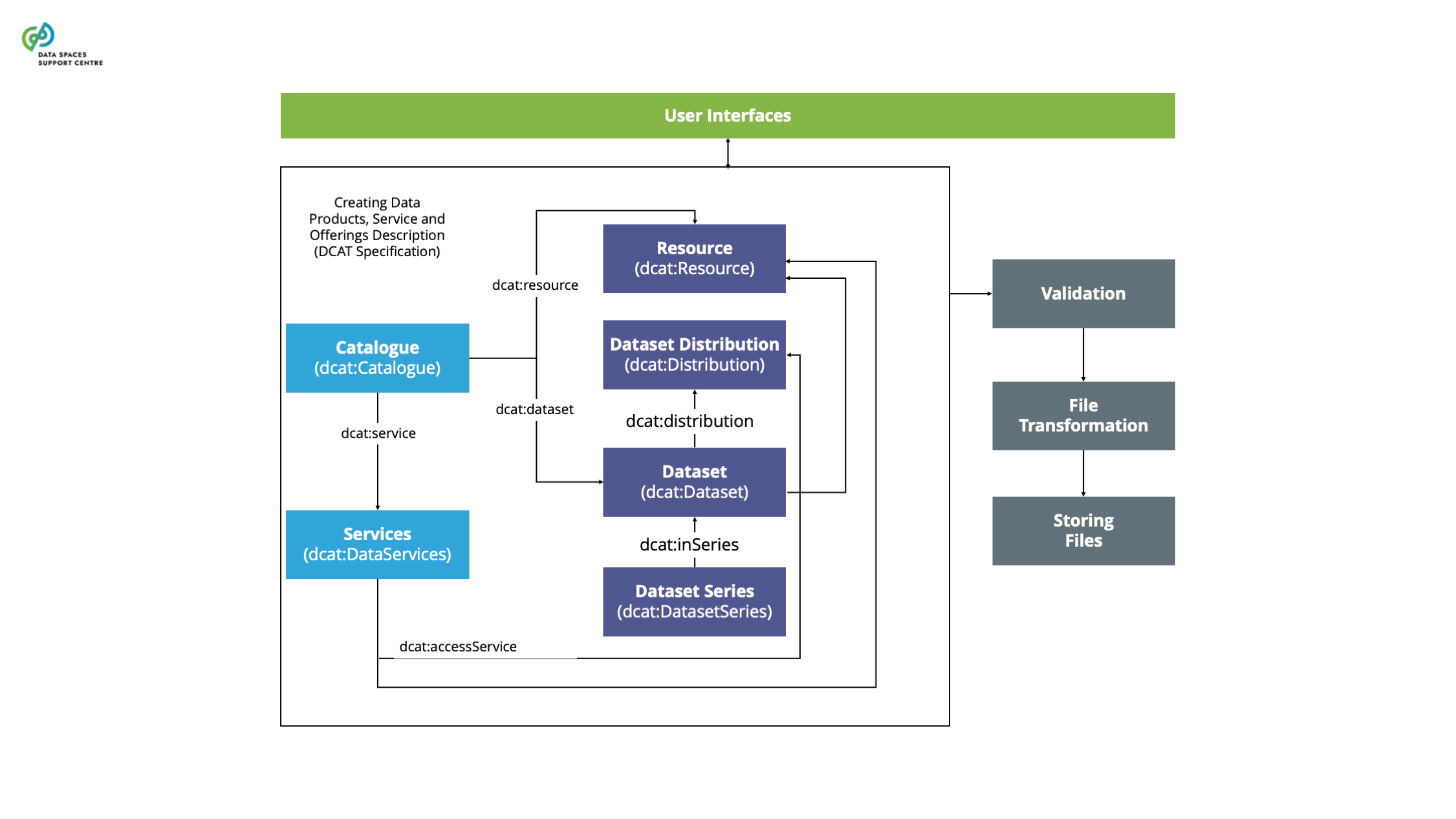

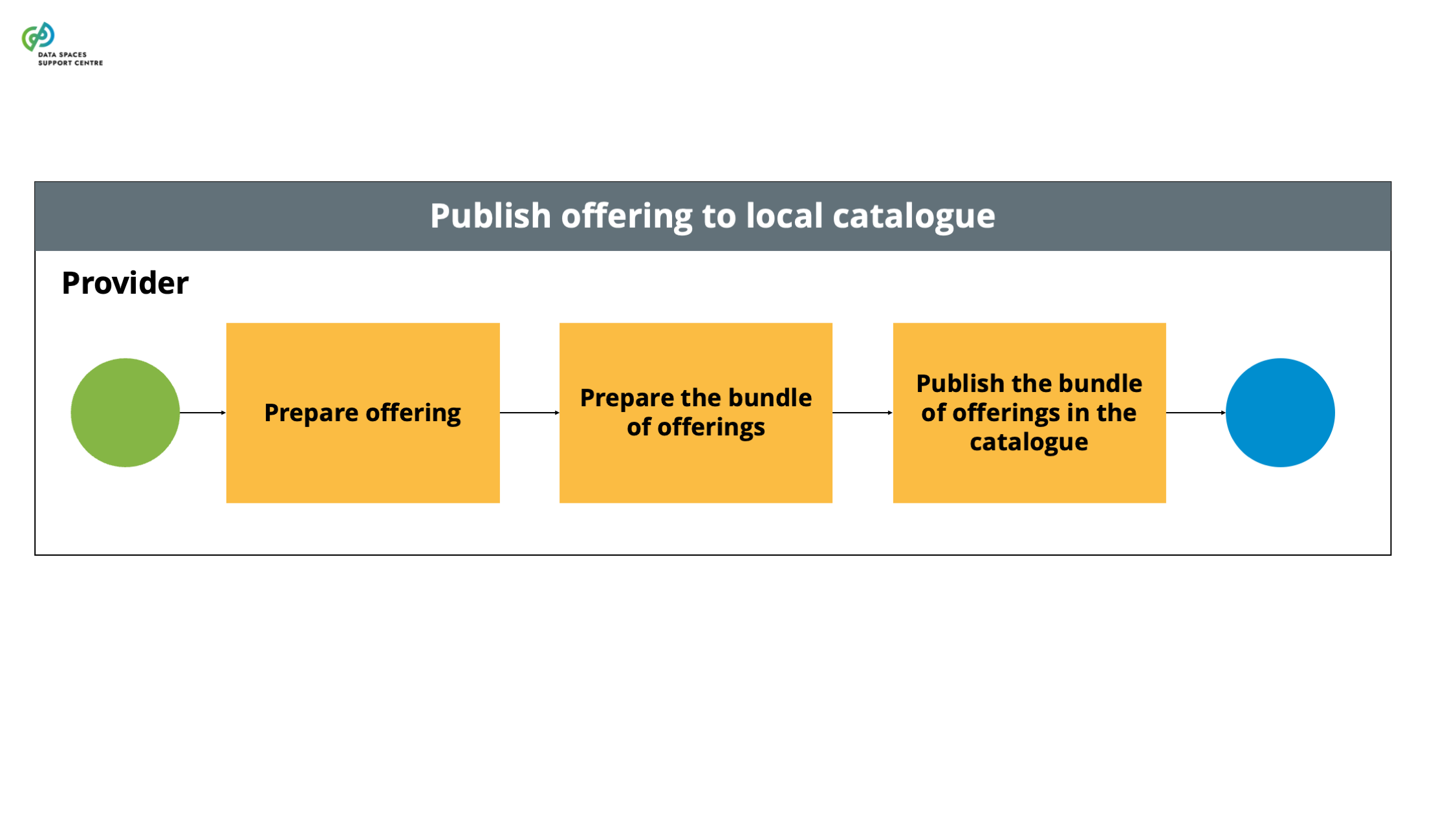

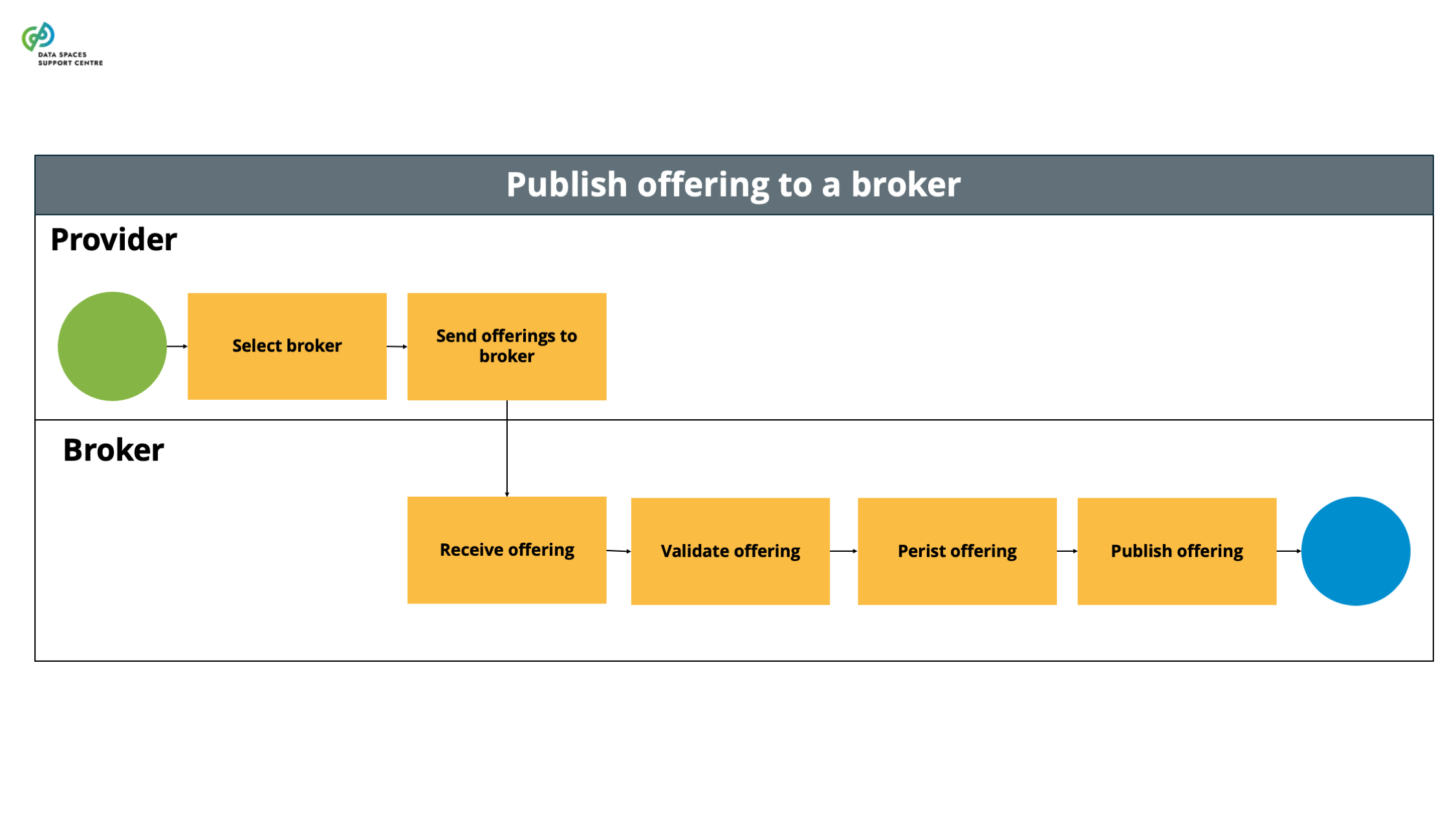

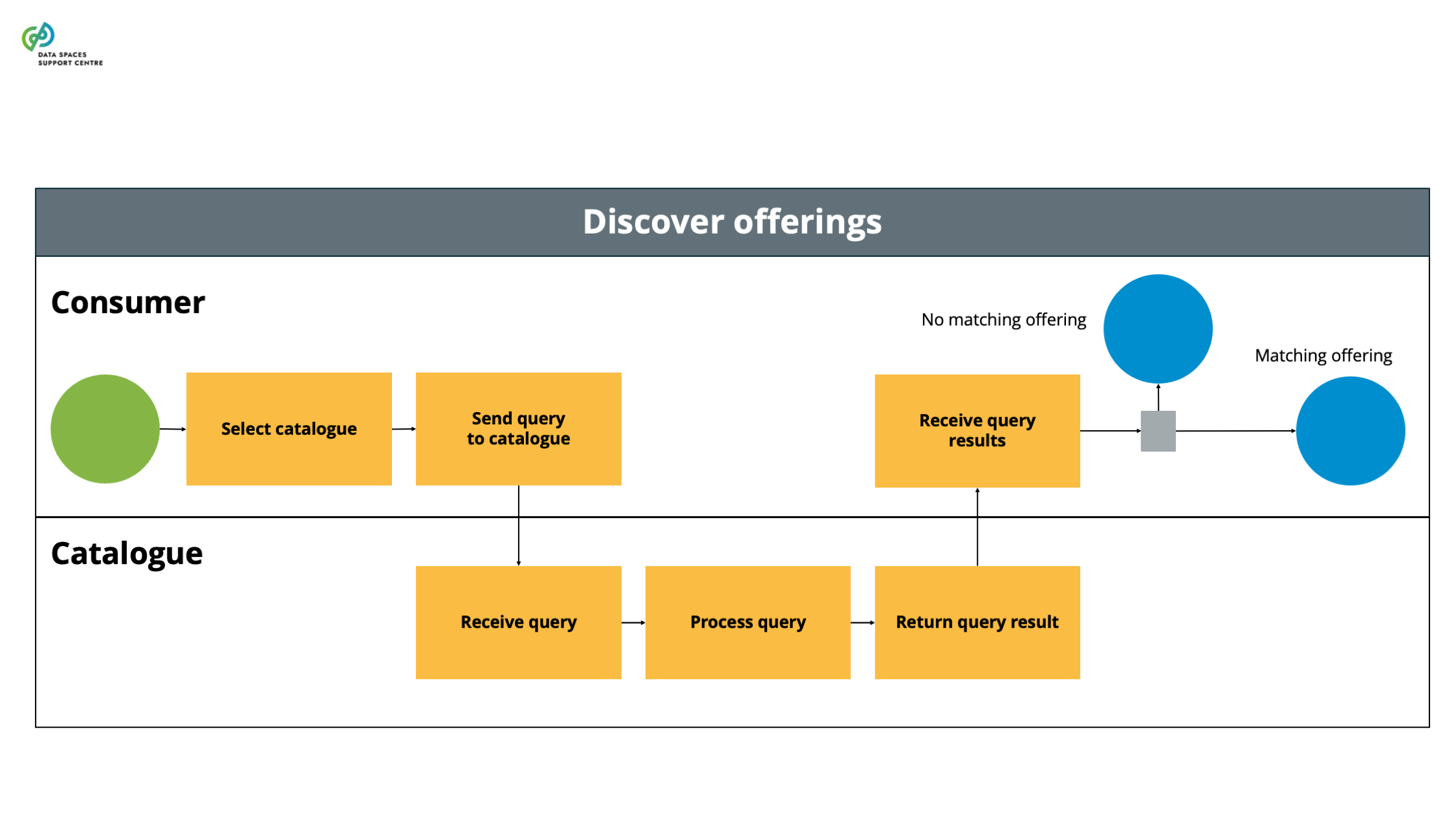

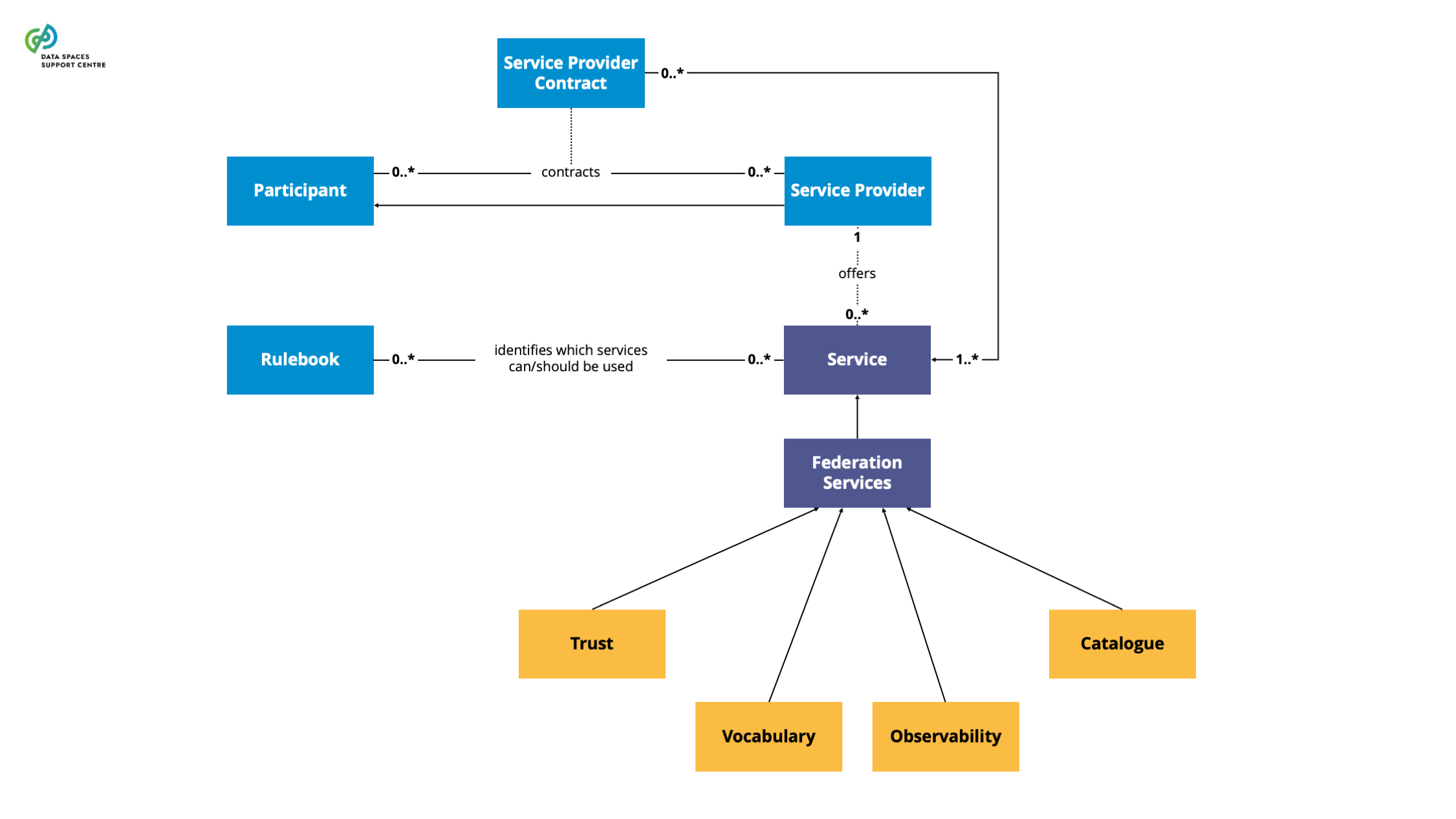

| Catalogue

Source (vsn) : Publication and Discovery (bv30)

|

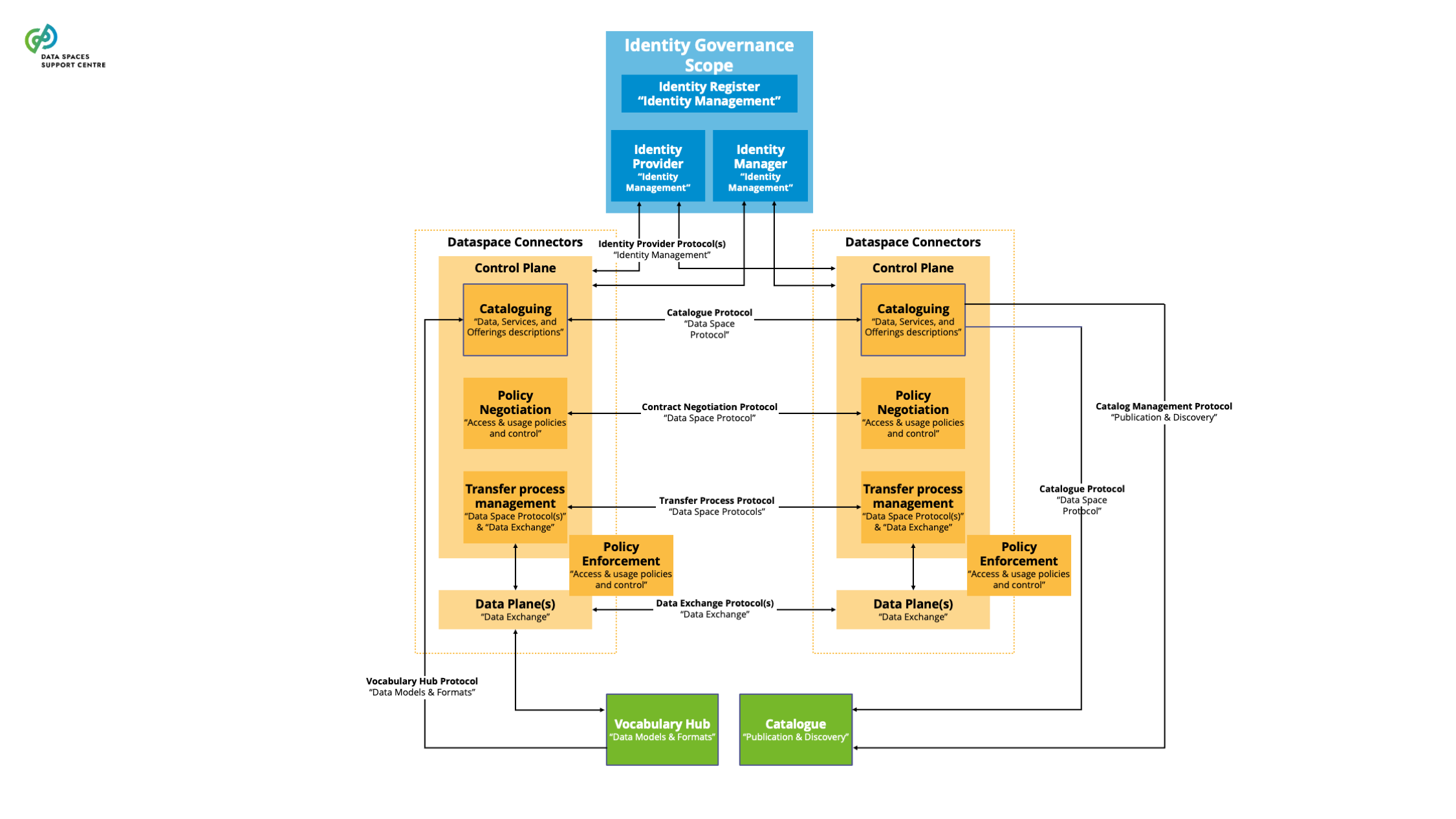

A functional component to provision and discover offerings of data and services in a data space. |

| Certification

Source (vsn) : Identity & Attestation Management (bv30)

|

third-party attestation related to an object of conformity assessment, with the exception of accreditation (ref. ISO/IEC 17000:2020(en), Conformity assessment — Vocabulary and general principles ) |

| Claim

Sources (vsn) :

- 8 Identity and Trust (bv30)

- Identity & Attestation Management (bv30)

- Trust Framework (bv30)

|

an assertion made about a subject . (ref. Verifiable Credentials Data Model v2.0 (w3.org) |

| Collaborative Business Model

Source (vsn) : Business Model (bv30)

|

A description of the way multiple organizations collectively create, deliver and capture value. Typically, this level of value creation would not be achievable by a single organization.A collaborative business model is better suited to express a value creation system consisting of multiple actors. Intangible values such as sovereignty, solidarity, and security cannot be expressed through a transactional approach (which is common in firm-centric business models) but require a system perspective. |

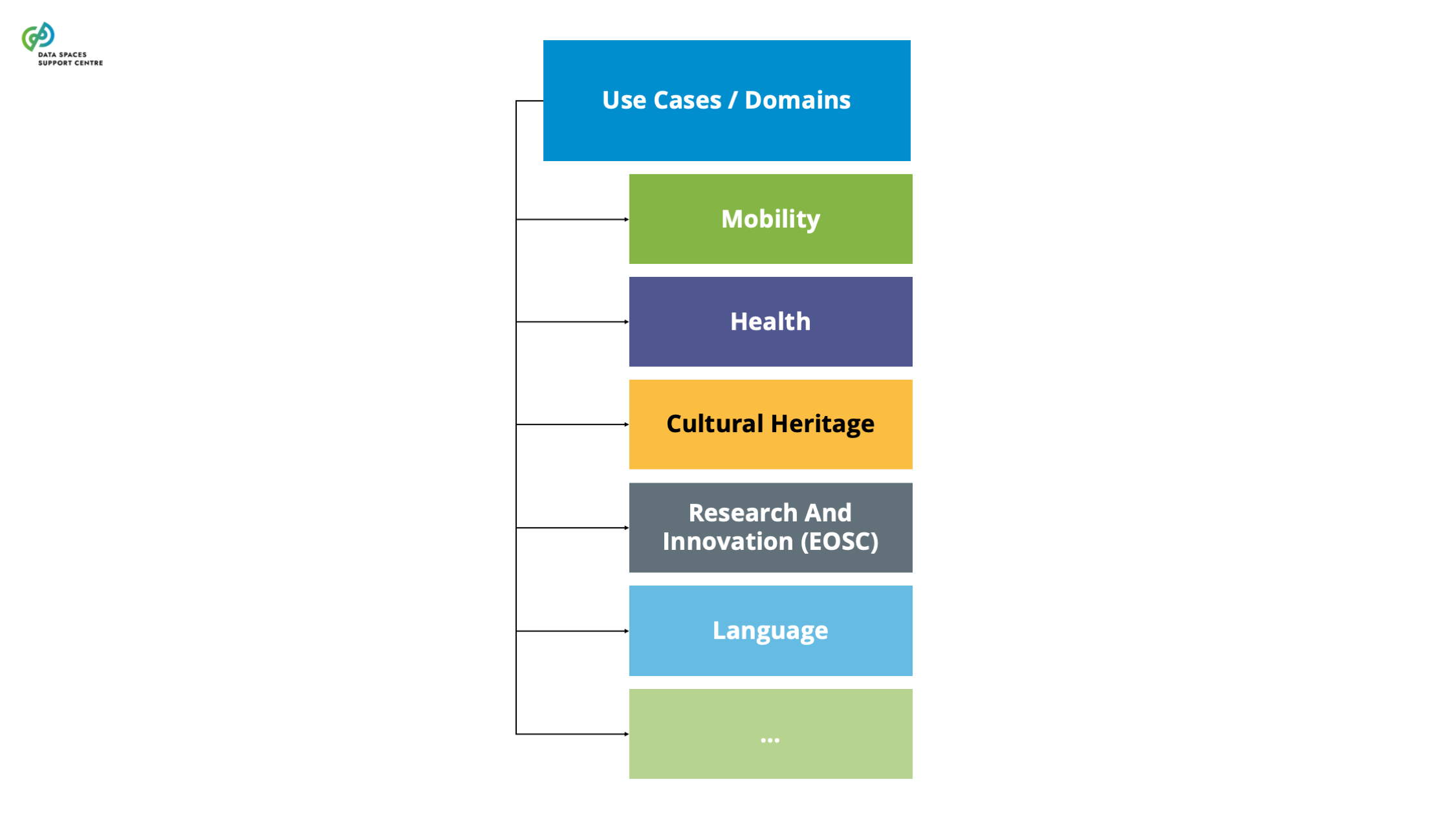

| Common European Data Spaces

Sources (vsn) :

- 11 Foundation of the European Data Economy Concepts (bv20)

- 11 Foundation of the European Data Economy Concepts (bv30)

|

Sectoral/domain-specific data spaces established in the European single market with a clear EU-wide scope that adheres to European rules and values.

Explanatory Texts :

- Common European Data Spaces are mentioned in the EU data strategy and introduced in the EC Staff Working Document on Common European Data Spaces and on this site: https://digital-strategy.ec.europa.eu/en/policies/data-spaces.

- It is furthermore referenced in the Data Governance Act with the following description: Purpose-, sector-specific or cross-sectoral interoperable frameworks of common standards and practices to share or jointly process data for, inter alia, development of new products and services, scientific research or civil society initiatives.

- Such sectoral/domain-specific Common European Data Spaces are being supported through EU-funding programmes, e.g. DIGITAL, Horizon Europe. In some domains (Health, Procurement) specific regulations towards the establishment of such data spaces are forthcoming.

|

| Community Heartbeat

Source (vsn) : 10 DSSC Specific Terms (bv30)

|

The regular release of the data spaces blueprint, data spaces building blocks, other DSSC assets and supporting activities, as outlined in a public roadmap. The regular releases aim to engage the community of practice into a rhythm of communication, co-learning and continuous improvement. |

| Community Of Practice

Source (vsn) : 10 DSSC Specific Terms (bv30)

|

The set of existing and emerging data space initiatives in all sectors and the set of (potential) data space component implementers. DSSC prioritises the data space projects funded by the Digital Europe Programme and other relevant programmes and will later expand to additional data space initiatives. |

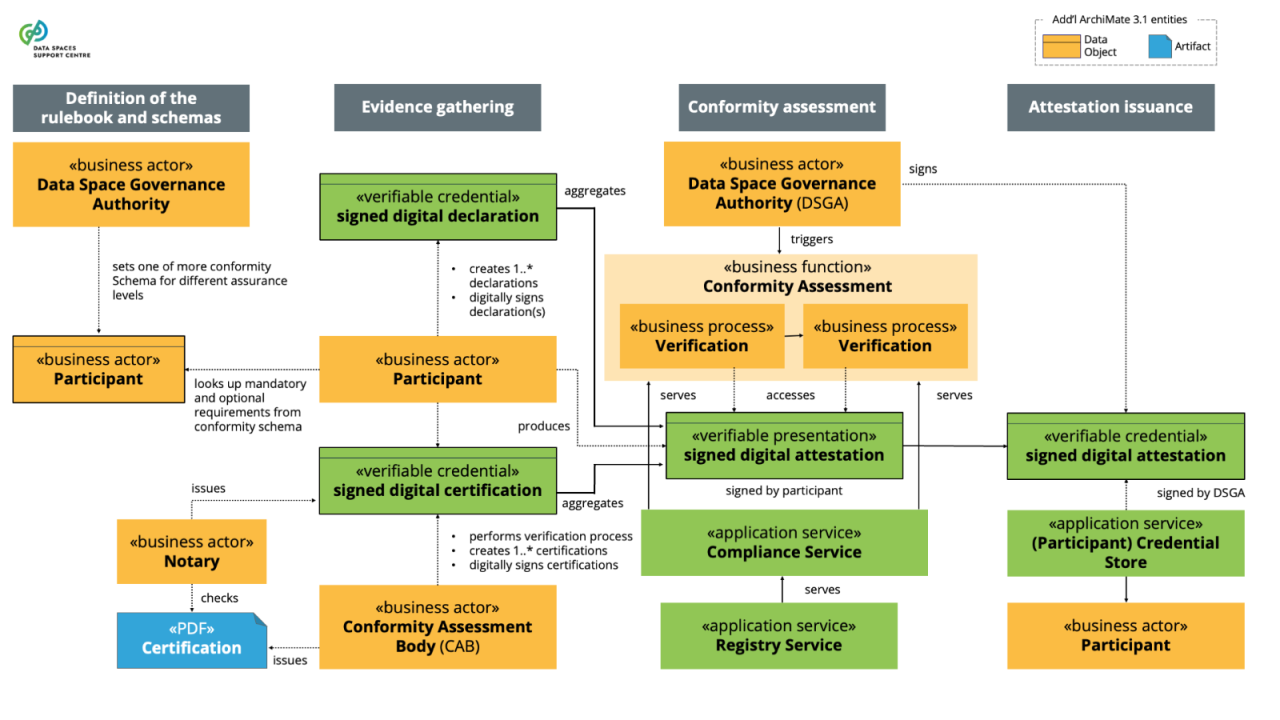

| Compliance Service

Source (vsn) : Identity & Attestation Management (bv30)

|

Service taking as input the Verifiable Credentials provided by the participants, checking them against the SHACL Shapes available in the Data Space Registry and performing other consistency checks based on rules in the data space conformity assessment scheme. |

| Component

Sources (vsn) :

- 9 Building Blocks and Implementations (bv20)

- 9 Building Blocks and Implementations (bv30)

|

A specification for a software or other artefact that realises one service or a set of services that fulfil functionalities described by one or more building blocks.

Explanatory Text : For technical components, that would typically be software, but for business components, this could consist of processes, templates or other artefacts.

Note : This term was automatically generated as a synonym for: data-space-component

|

| Component Architecture

Sources (vsn) :

- 9 Building Blocks and Implementations (bv20)

- 9 Building Blocks and Implementations (bv30)

|

An overview of all the data space components and their interactions, providing a high-level structure of how these components are organised and interact within data spaces.

Note : This term was automatically generated as a synonym for: data-space-component-architecture

|

| Conceptual Model Of Data Space

Source (vsn) : 10 DSSC Specific Terms (bv30)

|

A consistent, coherent and comprehensive description of the concepts and their relationships that can be used to unambiguously explain what data spaces and data space initiatives are about. |

| Conformity Assessment

Source (vsn) : Identity & Attestation Management (bv30)

|

demonstration that specified requirements are fulfilled (ref. ISO/IEC 17000:2020(en), Conformity assessment — Vocabulary and general principles ) |

| Conformity Assessment Body

Sources (vsn) :

- Identity & Attestation Management (bv30)

- Trust Framework (bv30)

|

A body that performs conformity assessment activities, excluding accreditation (ref. ISO/IEC 17000:2020(en), Conformity assessment — Vocabulary and general principles ) |

| Conformity Assessment Scheme

Source (vsn) : Identity & Attestation Management (bv30)

|

set of rules and procedures that describe the objects of conformity assessment, identifies the specified requirements and provides the methodology for performing conformity assessment. (ref. ISO/IEC 17000:2020(en), Conformity assessment — Vocabulary and general principles ). |

| Connected Product

Source (vsn) : Regulatory Compliance (bv20)

|

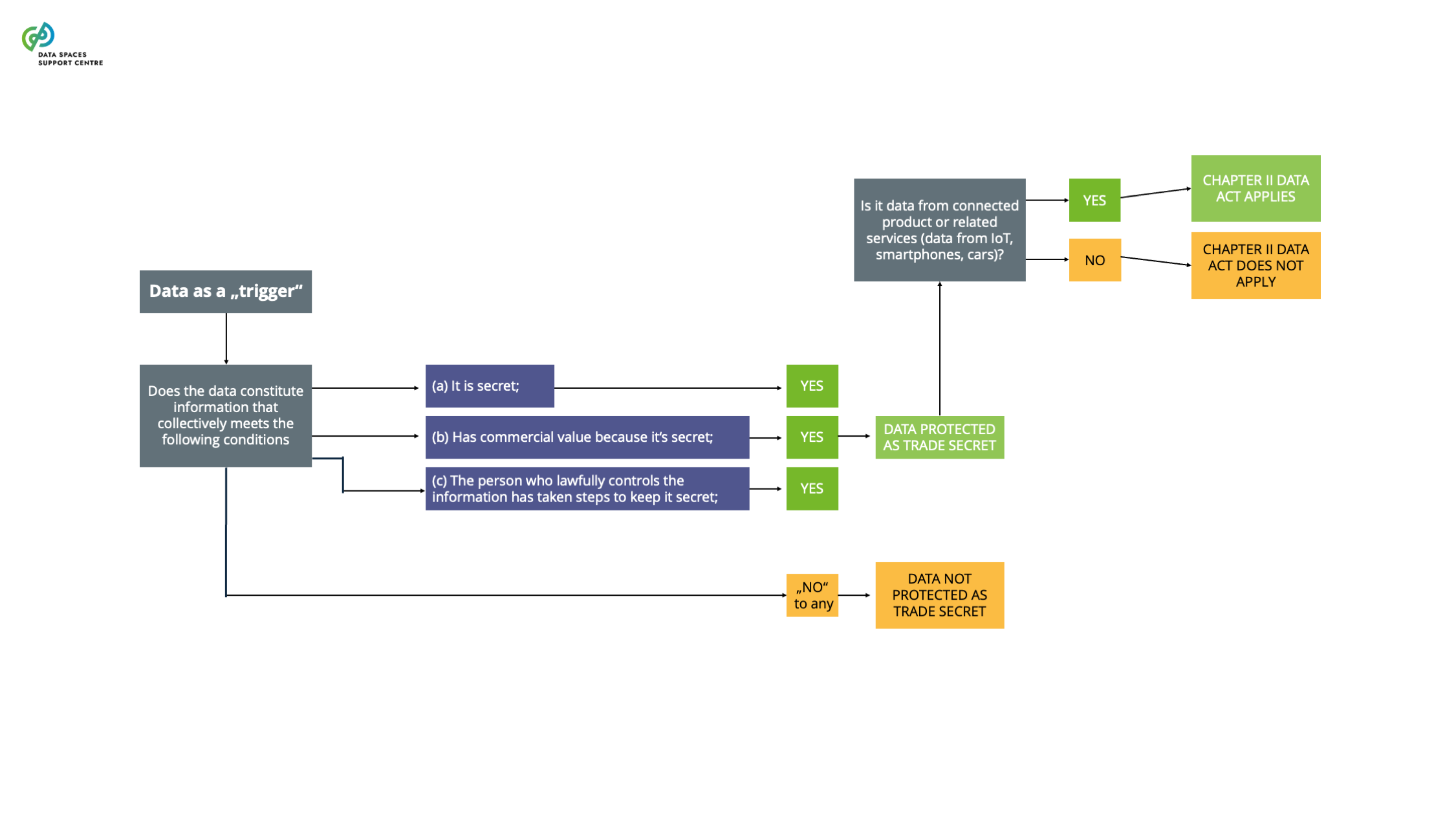

an item that obtains, generates or collects data concerning its use or environment and that is able to communicate product data via an electronic communications service, physical connection or on-device access, and whose primary function is not the storing, processing or transmission of data on behalf of any party other than the user (art. 2 (5) DA).

Explanatory Text : This term is defined as per the Data Act. This clarification ensures that the definition is understood within the specific regulatory context of the Data Act while allowing the same term to be used in other contexts with different meanings.

|

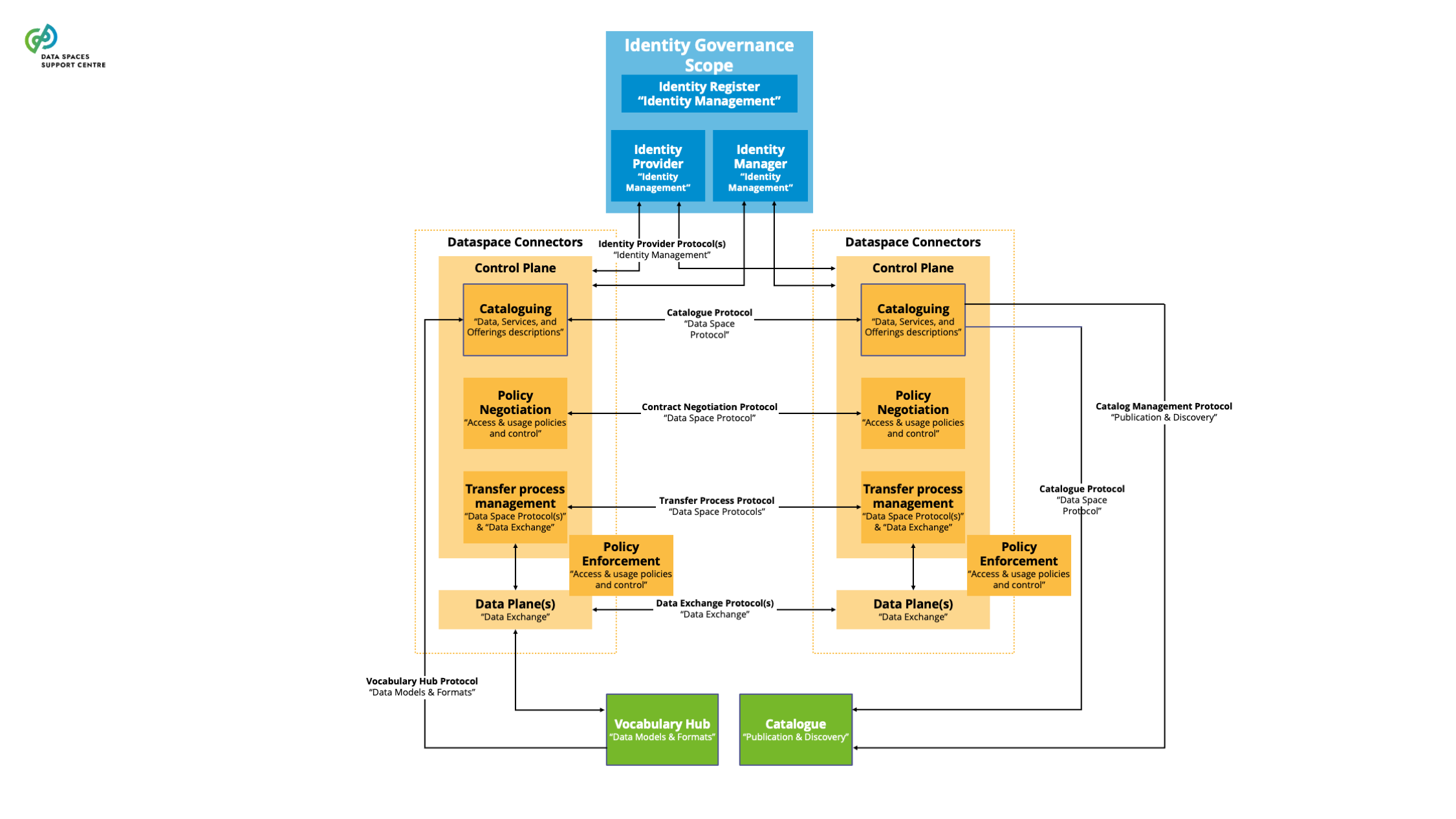

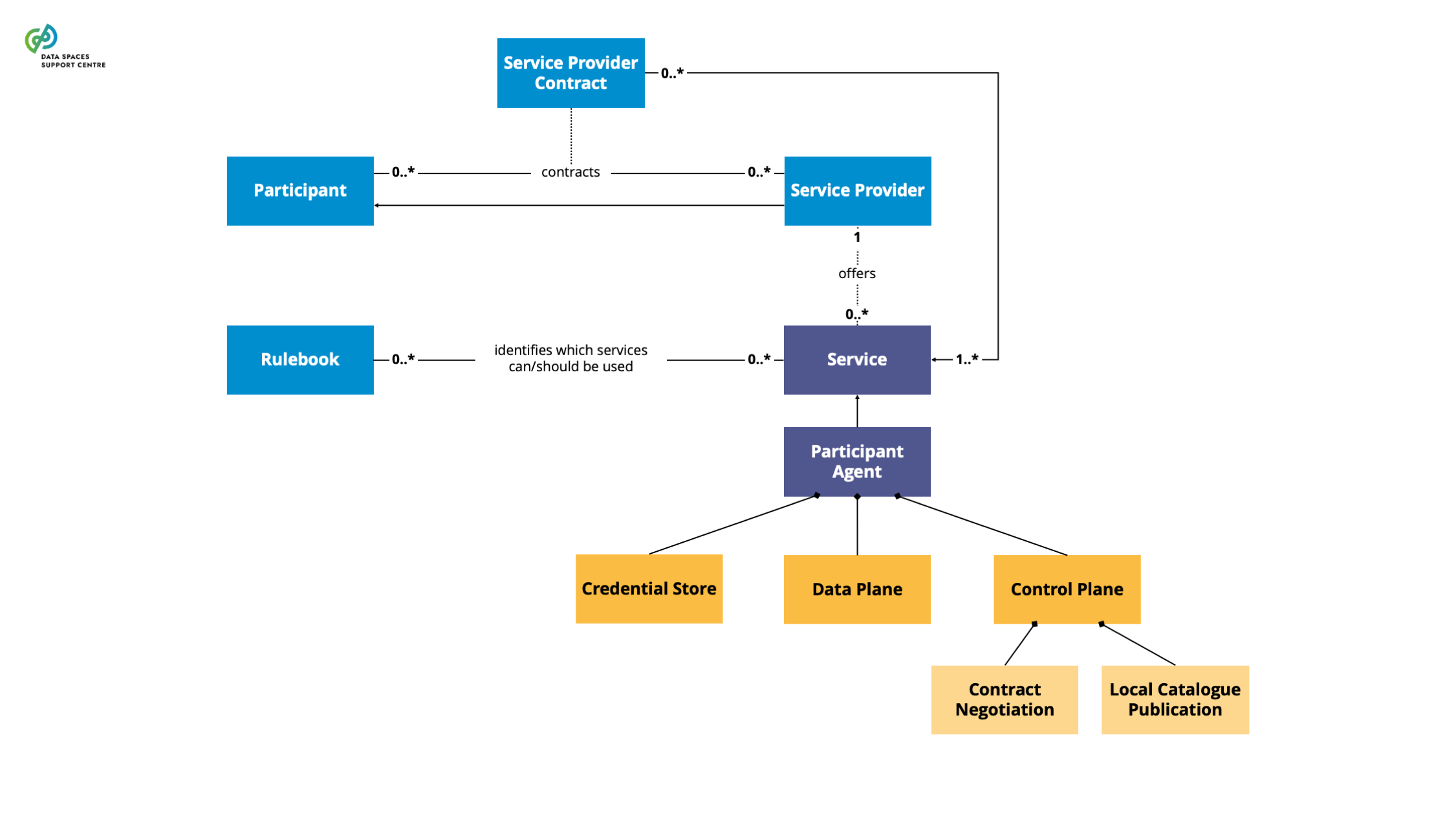

| Connector

Source (vsn) : 4 Data Space Services (bv20)

|

A technical component that is run by (or on behalf of) a participant and that provides participant agent services, with similar components run by (or on behalf of) other participants.

Explanatory Text : A connector can provide more functionality than is strictly related to connectivity. The connector can offer technical modules that implement data interoperability functions, authentication interfacing with trust services and authorisation, data product self-description, contract negotiation, etc. We use “participant agent services” as the broader term to define these services.

Note : This term was automatically generated as a synonym for: data-space-connector

|

| Consensus Protocol

Sources (vsn) :

- Data Exchange (bv20)

- Data Exchange_ (bv30)

|

The data exchange protocol that is globally accepted in a domain

Explanatory Text : In some domains the data exchange protocols are ‘de facto’ standards (e.g. NGSI for smart cities).

|

| Consent

Source (vsn) : Regulatory Compliance (bv20)

|

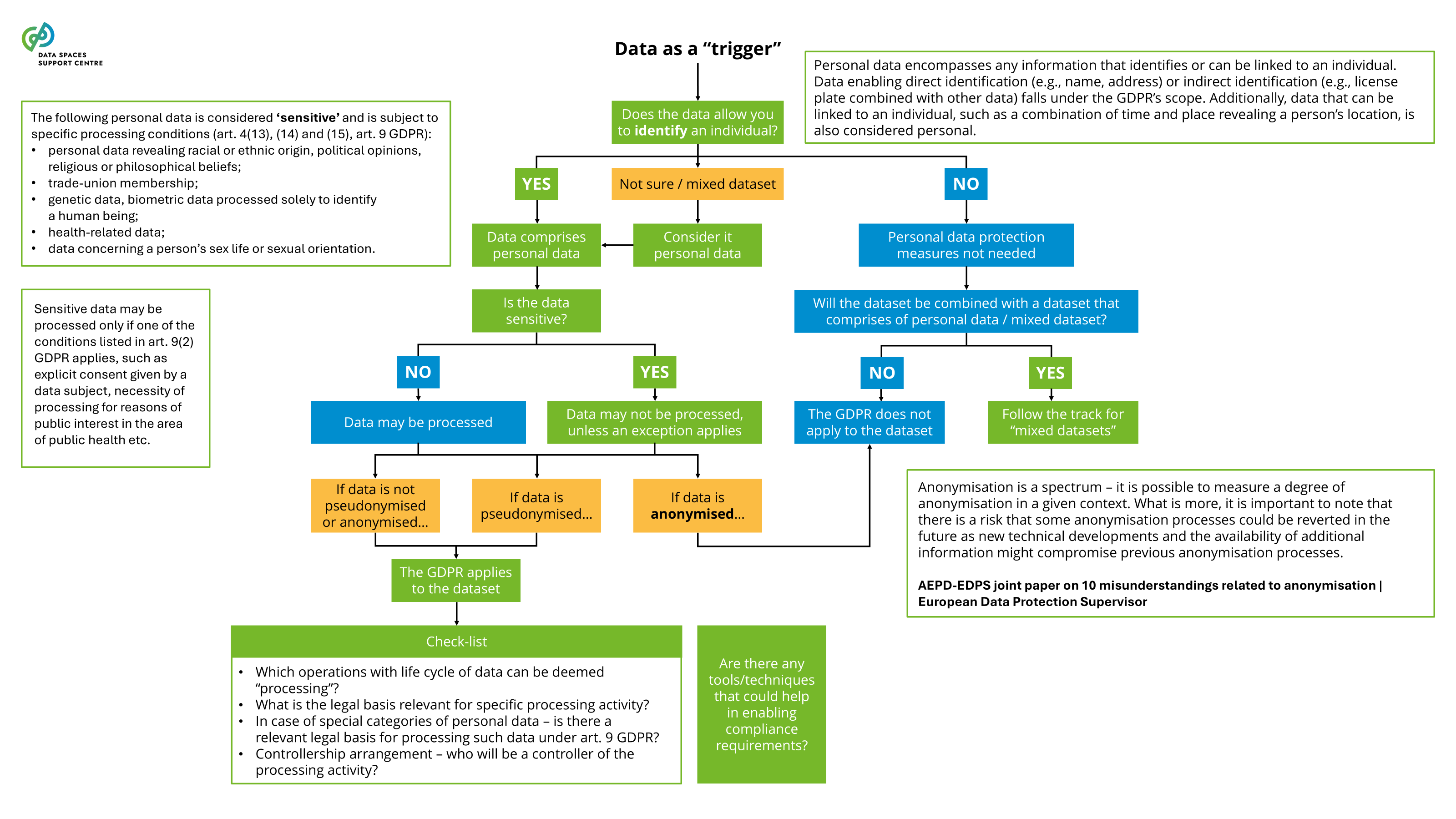

any freely given, specific, informed and unambiguous indication of the data subject's wishes by which he or she, by a statement or by a clear affirmative action, signifies agreement to the processing of personal data relating to him or her;( GDPR Article 4(11) ) |

| Consent Management

Source (vsn) : Access & Usage Policies Enforcement (bv20)

|

The process of obtaining, recording, managing, and enforcing user consent for data processing and sharing. |

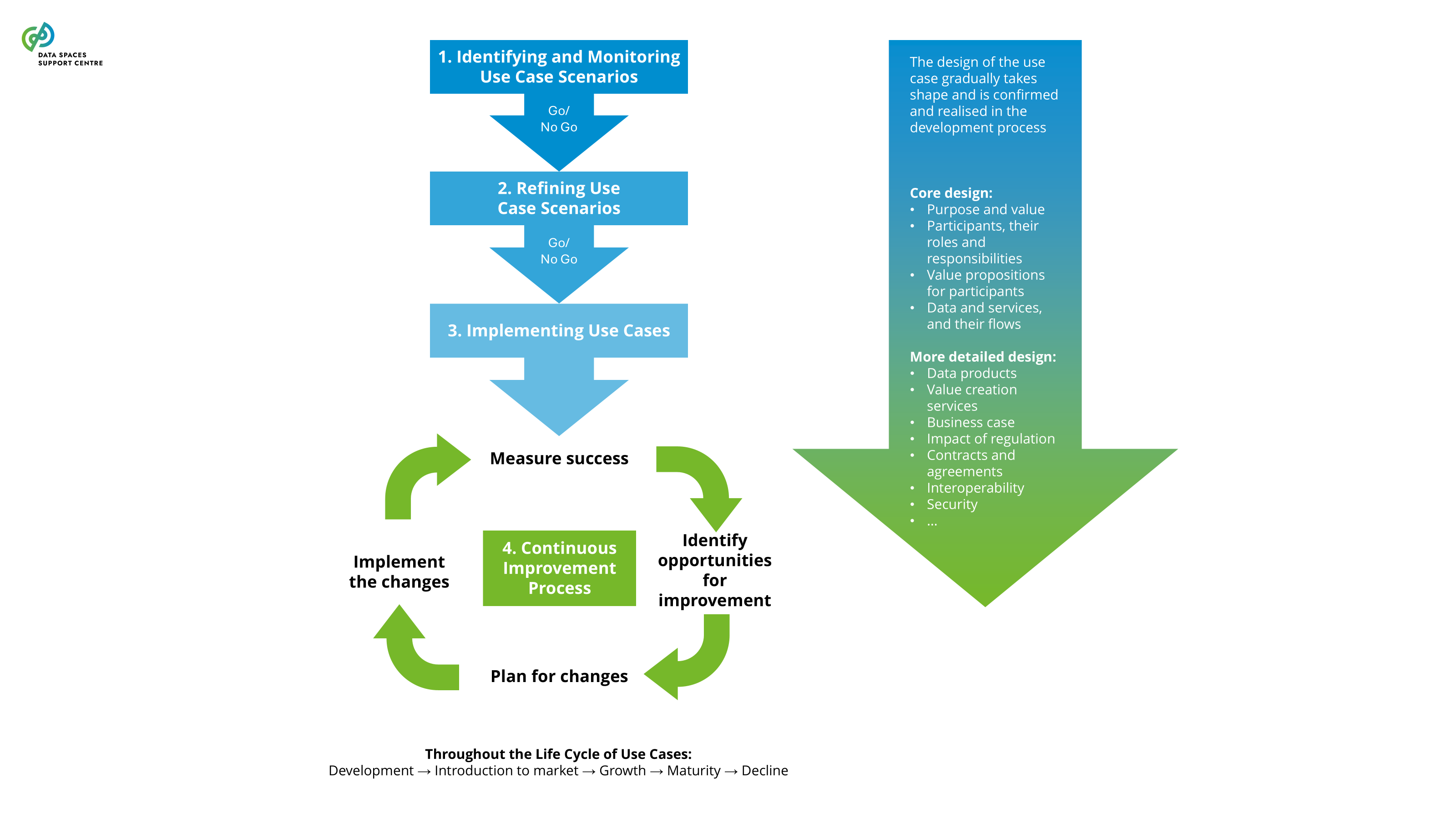

| Continuous Improvement Process

Sources (vsn) :

- Use Case Development (bv20)

- Use Case Development (bv30)

|

Continuously analyzing the performance of use cases, identifying improvement opportunities, and planning and implementing changes in a systematic and managed way throughout the life cycle of a use case. |

| Contract

Source (vsn) : Contractual Framework (bv30)

|

A formal, legally binding agreement between two or more parties with a common interest in mind that creates mutual rights and obligations enforceable by law. |

| Contractual Framework

Source (vsn) : Contractual Framework (bv30)

|

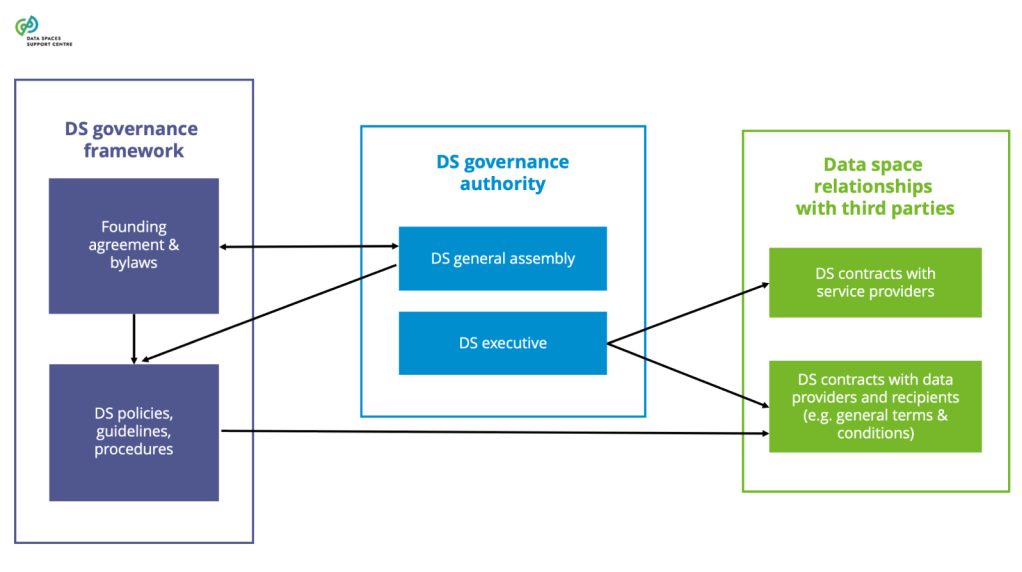

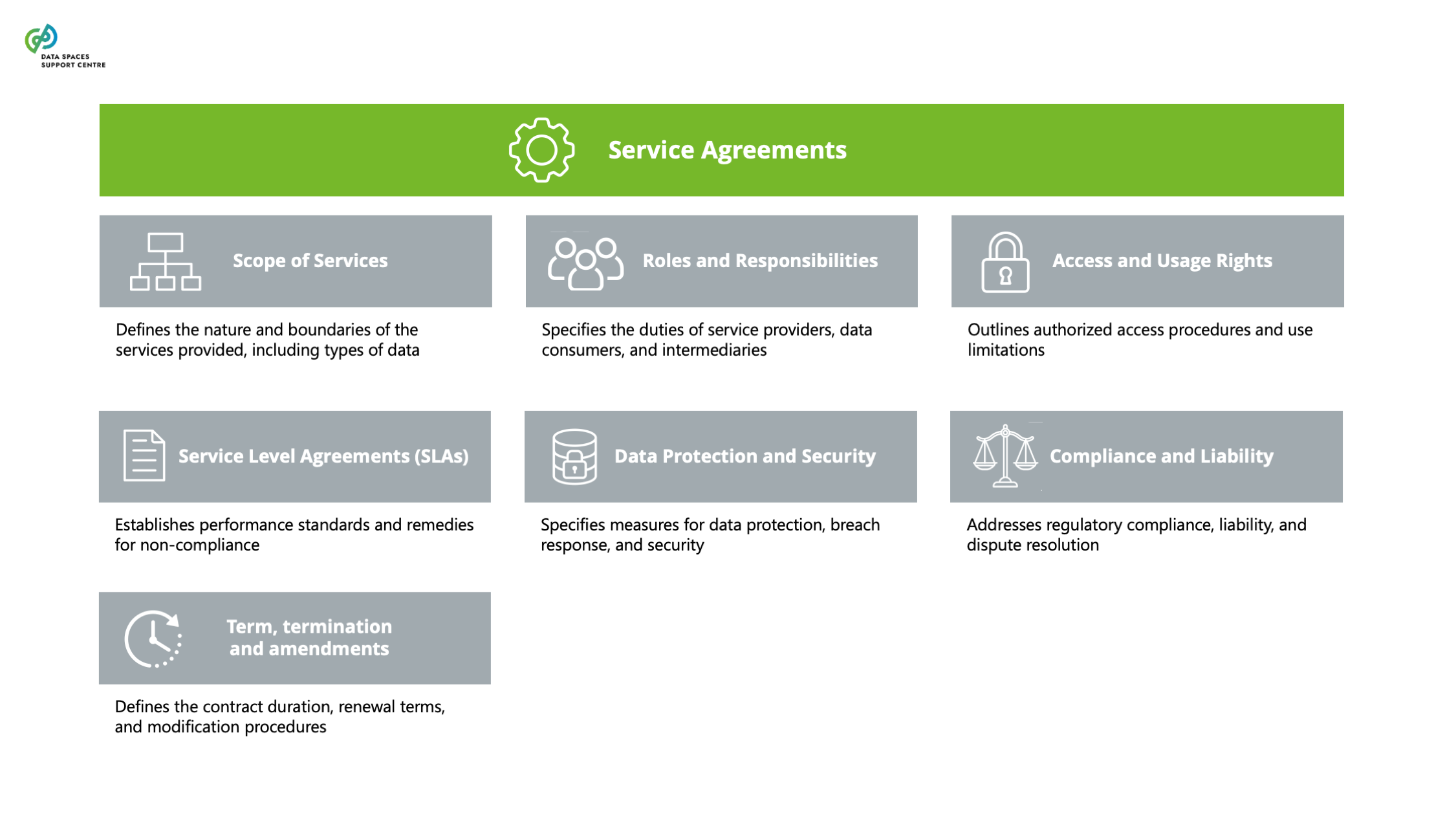

The set of legally binding agreements that regulates the relationship between data space participants and the data space (institutional agreements), transactions between data space participants (data-sharing agreements) and the provision of services (service agreements) within the context of a single data space. |

| Core Platform Service

Source (vsn) : Regulatory Compliance (bv20)

|

a service that means any of the following:

online intermediation services; online search engines; online social networking services; video-sharing platform services; number-independent interpersonal communications services; (f) operating systems;web browsers; virtual assistants; cloud computing services; online advertising services, including any advertising networks, advertising exchanges and any other advertising intermediation services, provided by an undertaking that provides any of the core platform services listed in points (1) to (9);

|

| Credential Schema

Source (vsn) : Identity & Attestation Management (bv30)

|

In the W3C Verifiable Credentials Data Model v2.0. the value of the “credentialSchema” property must be one or more data schemas that provide verifiers with enough information to determine whether the provided data conforms to the provided schema(s). (ref: https://www.w3.org/TR/vc-data-model-2.0/#data-schemas ) |

| Credential Store

Source (vsn) : Identity & Attestation Management (bv30)

|

A service used to issue, store, manage, and present Verifiable Credentials. |

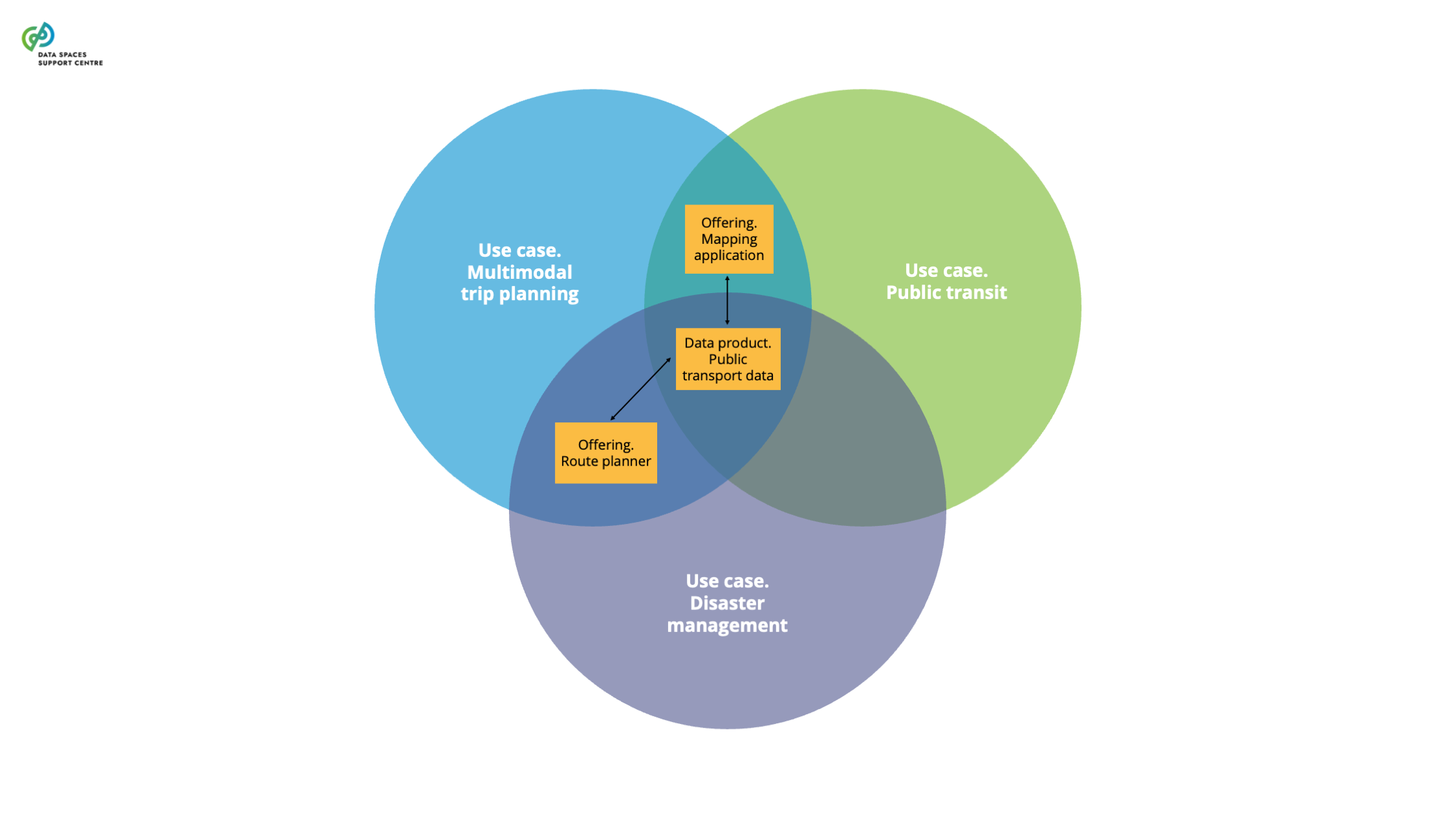

| Cross Data Space Use Case

Source (vsn) : 2 Data Space Use Cases and Business Model (bv30)

|

A specific setting in which participants of multiple data spaces create value (business, societal or environmental) from sharing data across these data spaces. |

| Cross Use Case

Source (vsn) : 2 Data Space Use Cases and Business Model (bv30)

|

A specific setting in which participants of multiple data spaces create value (business, societal or environmental) from sharing data across these data spaces.

Note : This term was automatically generated as a synonym for: cross-data-space-use-case

|

| Cross- Interoperability

Source (vsn) : 7 Interoperability (bv30)

|

The ability of participants to seamlessly access and/or exchange data across two or more data spaces. Cross-data spaces interoperability addresses the governance, business and technical frameworks to interconnect multiple data space instances seamlessly.

Explanatory Text : Inter-data space interoperability is an alternative term and both terms can be used interchangeably.

Note : This term was automatically generated as a synonym for: cross-data-space-interoperability

|

| Cross-data Space Interoperability

Source (vsn) : 7 Interoperability (bv30)

|

The ability of participants to seamlessly access and/or exchange data across two or more data spaces. Cross-data spaces interoperability addresses the governance, business and technical frameworks to interconnect multiple data space instances seamlessly.

Explanatory Text : Inter-data space interoperability is an alternative term and both terms can be used interchangeably.

|

| Data

Source (vsn) : Regulatory Compliance (bv20)

|

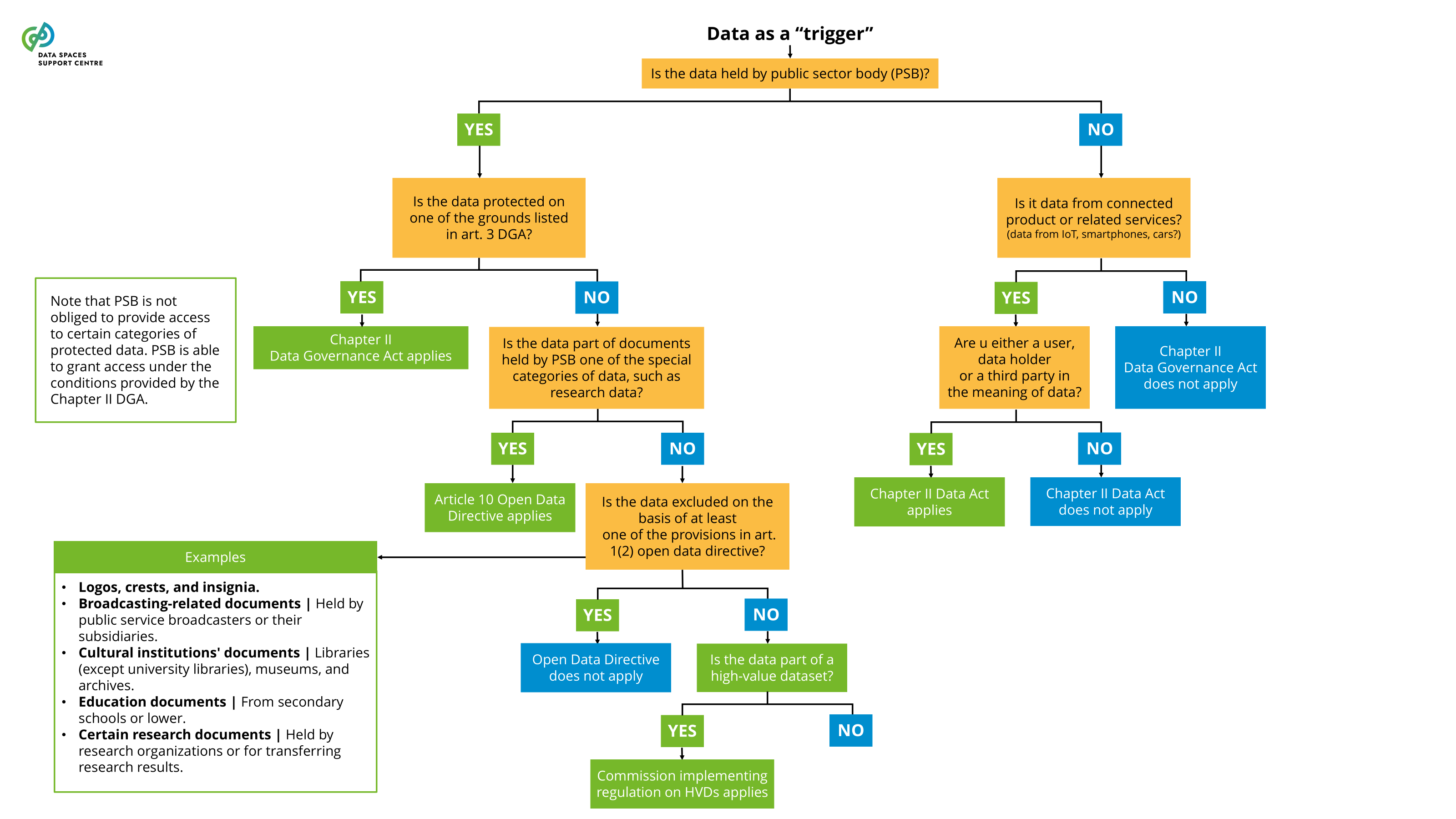

any digital representation of acts, facts or information and any compilation of such acts, facts or information, including in the form of sound, visual or audiovisual recording;( DGA Article 2(1) )

Explanatory Text : The definition is the same in the Open Data Directive and the Data Act.

|

| Data Access Policy

Source (vsn) : 6 Data Policies and Contracts (bv30)

|

A specific data policy defined by the data rights holder for accessing their shared data in a data space.

Explanatory Text : A data access policy that provides operational guidance to a data provider for deciding whether to process or reject a request for providing access to specific data. Data access policies are created and maintained by the data rights holders.

|

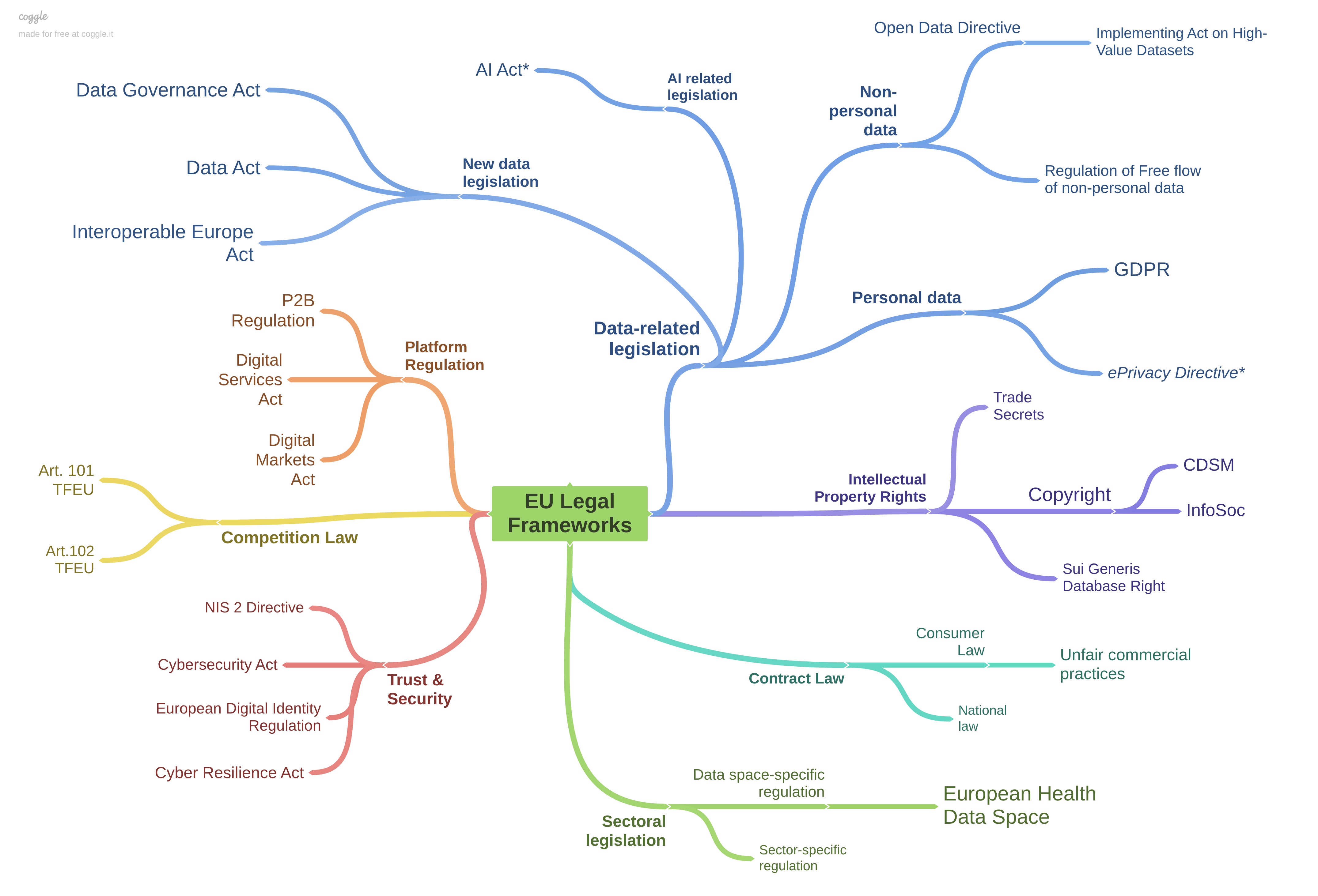

| Data Act

Sources (vsn) :

- 11 Foundation of the European Data Economy Concepts (bv20)

- 11 Foundation of the European Data Economy Concepts (bv30)

|

A European regulation that establishes EU-wide rules for access to the product or related service data to the user of that connected product or service. The regulation also includes essential requirements for the interoperability of data spaces (Article 33) and essential requirements for smart contracts to implement data sharing agreements (Article 36).

Explanatory Text : More info can be found here: Data Act explained | Shaping Europe’s digital future and the Data Act Frequently-asked-questions.

|

| Data Altruism

Source (vsn) : Regulatory Compliance (bv20)

|

the voluntary sharing of data on the basis of the consent of data subjects to process personal data pertaining to them, or permissions of data holders to allow the use of their non-personal data without seeking or receiving a reward that goes beyond compensation related to the costs that they incur where they make their data available for objectives of general interest as provided for in national law, where applicable, such as healthcare, combating climate change, improving mobility, facilitating the development, production and dissemination of official statistics, improving the provision of public services, public policy making or scientific research purposes in the general interest;( DGA Article 2(16) ) |

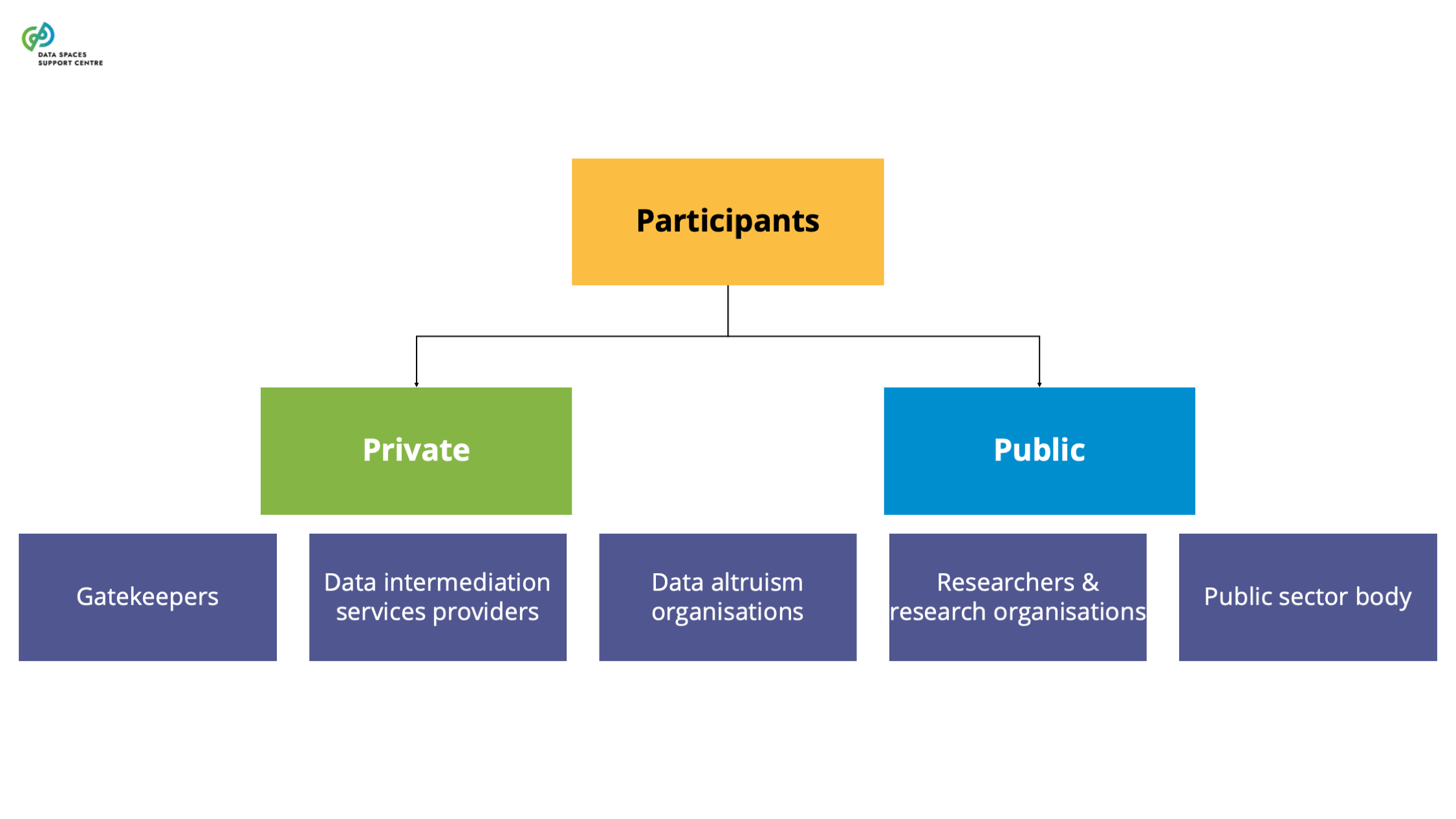

| Data Altruism Organisations (DAOs)

Source (vsn) : Types of Participants (Participant as a Trigger) (bv20)

|

In the context of data spaces, DAOs can take on a variety of roles as data space participants. They can be data providers, transaction participants, and data space intermediaries (e.g., personal data intermediaries). It is important to address their participation in the data space, especially regarding the value distribution aspects and their potential sponsoring by the data space . |

| Data Consumer

Source (vsn) : 5 Data Products and Transactions (bv30)

|

A synonym of data product consumer |

| Data Consumer

Source (vsn) : Publication and Discovery (bv30)

|

A consumer of data or service. |

| Data Element

Source (vsn) : Data Models (bv30)

|

the smallest units of data that carry a specific meaning within a dataset. Each data element has a name, a defined data type (such as text, number, or date), and often a description that explains what it represents. |

| Data Governance

Source (vsn) : Access & Usage Policies Enforcement (bv20)

|

framework of policies, processes, and standards that ensure effective management, quality, security, and proper use of data within an organization. |

| Data Governance Act

Sources (vsn) :

- 11 Foundation of the European Data Economy Concepts (bv20)

- 11 Foundation of the European Data Economy Concepts (bv30)

|

A European regulation that aims to create a framework to facilitate European data spaces and increase trust between actors in the data market. The DGA entered into force in June 2022 and applies from Sept 2023. The DGA defines the European Data Innovation Board (EDIB). |

| Data Holder

Source (vsn) : Regulatory Compliance (bv20)

|

a legal person, including public sector bodies and international organisations, or a natural person who is not a data subject with respect to the specific data in question, which, in accordance with applicable Union or national law, has the right to grant access to or to share certain personal data or non-personal data;( DGA Article 2(8) ) a natural or legal person that has the right or obligation, in accordance with this Regulation, applicable Union law or national legislation adopted in accordance with Union law, to use and make available data, including, where contractually agreed, product data or related service data which it has retrieved or generated during the provision of a related service;( DA Article 2(13))

Explanatory Text : In general context we use data holder within the meaning of DGA Art. 2(8) and in case the word data holder is used in the context of DA (IoT data), we identify it with DA at the end of the term. Please note that data rights holder has a specific and different meaning than data holder and is used especially in data transactions.

|

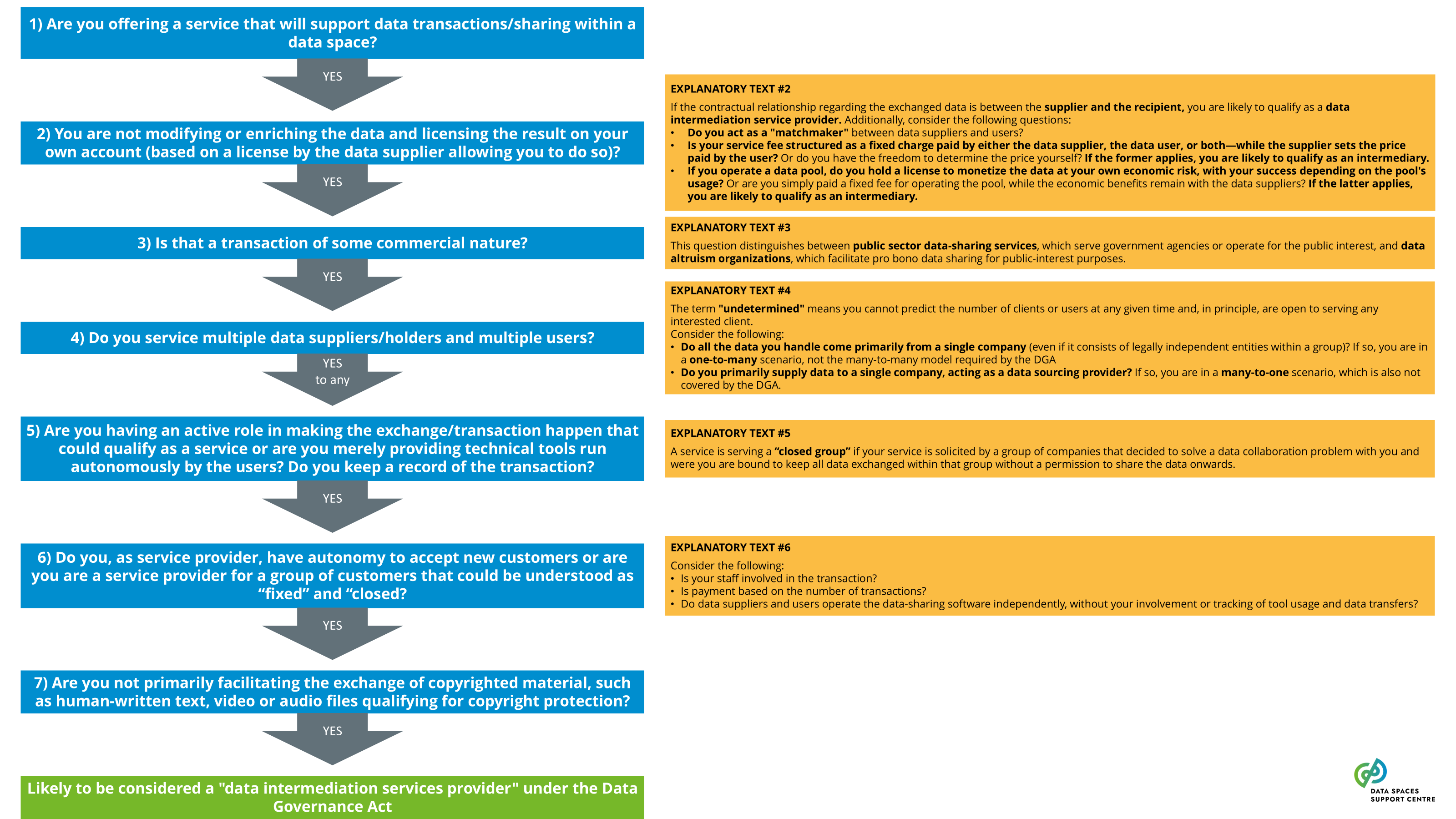

| Data Intermediation Service

Source (vsn) : Regulatory Compliance (bv20)

|

a service that is legally defined in the Data Governance Act and enforced by national agencies. An operator in a data space may qualify as a data intermediation service provider, depending on the scope of the services.DGA definition (simplified): ‘Data intermediation service’ means a service which aims to establish commercial relationships for the purposes of data sharing between an undetermined number of data subjects and data holders on the one hand and data users on the other through technical, legal or other means, including for the purpose of exercising the rights of data subjects in relation to personal data.( DGA Article 2 (11) )

Explanatory Text : Services that fall within this definition will be bound to comply with a range of obligations. The most important two are: (1) Service providers cannot use the data for other purposes than facilitating the exchange between the users of the service; (2) Services of intermediation (e.g. catalogue services, app stores) have to be separate from services providing applications. Both rules are meant to provide for a framework of truly neutral data intermediaries.

|

| Data Intermediation Service Providers (DISPs)

Source (vsn) : Types of Participants (Participant as a Trigger) (bv20)

|

The recent Data Governance Act (DGA) sets out specific requirements for providing data intermediation services. Certain service functions in data spaces are likely to qualify as data intermediation services (see: Data Intermediation Service Provider Flowchart ). A data space governance authority should also evaluate to what extent it organises any services that may qualify as data intermediation services under the DGA. If this is the case, it will need to ensure compliance with the provisions of the DGA.Data Intermediation Service under the Data Governance ActUnder the Data Governance Act, a “data intermediation service” (“DIS”) is defined as a service aiming to establish commercial relationships for the purposes of data sharing between an undetermined number of data subjects and data holders on the one hand and data users on the other.Data intermediation service providers intending to provide data intermediation services are required under the DGA to submit a notification to the competent national authority for data intermediation services. The provision of data intermediation services is subject to a range of conditions, including a limitation on the use by the provider of the data for which it provides data intermediation services.The European Commission hosts a register of data intermediation services recognised in the European Union: https://digital-strategy.ec.europa.eu/en/policies/data-intermediary-services Providers of data intermediation services and data space intermediaries/operatorsIntermediary services are covered more broadly in “ Data Space Intermediaries and Operators ”. The term “data space intermediary” refers to “a data space participant that provides one or more enabling services while not directly participating in the data transactions”. Enabling services refers to “a service that implements a data space functionality that enables data transactions for the transaction participants and/or operational processes for the governance authority.” (see the DSSC Glossary for more information)It is important to note that not all data space intermediaries would be subject to the provisions of the DGA by default. First of all, some of the potential enabling services they provide may not be aimed at establishing commercial relationships for the purposes of data sharing. It may also be the case that, in the circumstances at hand, the services may not result in commercial relationships between an undetermined number of data subjects and data holders on the one hand and data users on the other.Data intermediation services and personal dataThe services of data intermediation service providers may also relate to personal data. In such cases, it is important to appropriately consider the different roles and responsibilities under the DGA and the GDPR. For a transaction facilitated by a provider of data intermediation services, it is difficult to establish who is acting as the controller, whether there are multiple controllers acting as joint controllers, whether there is a processor and whether data users are data recipients. It may be important to clarify the respective responsibilities of particular data space participants by offering guidance to help ensure overall compliance with obligations under the DGA and the GDPR. |

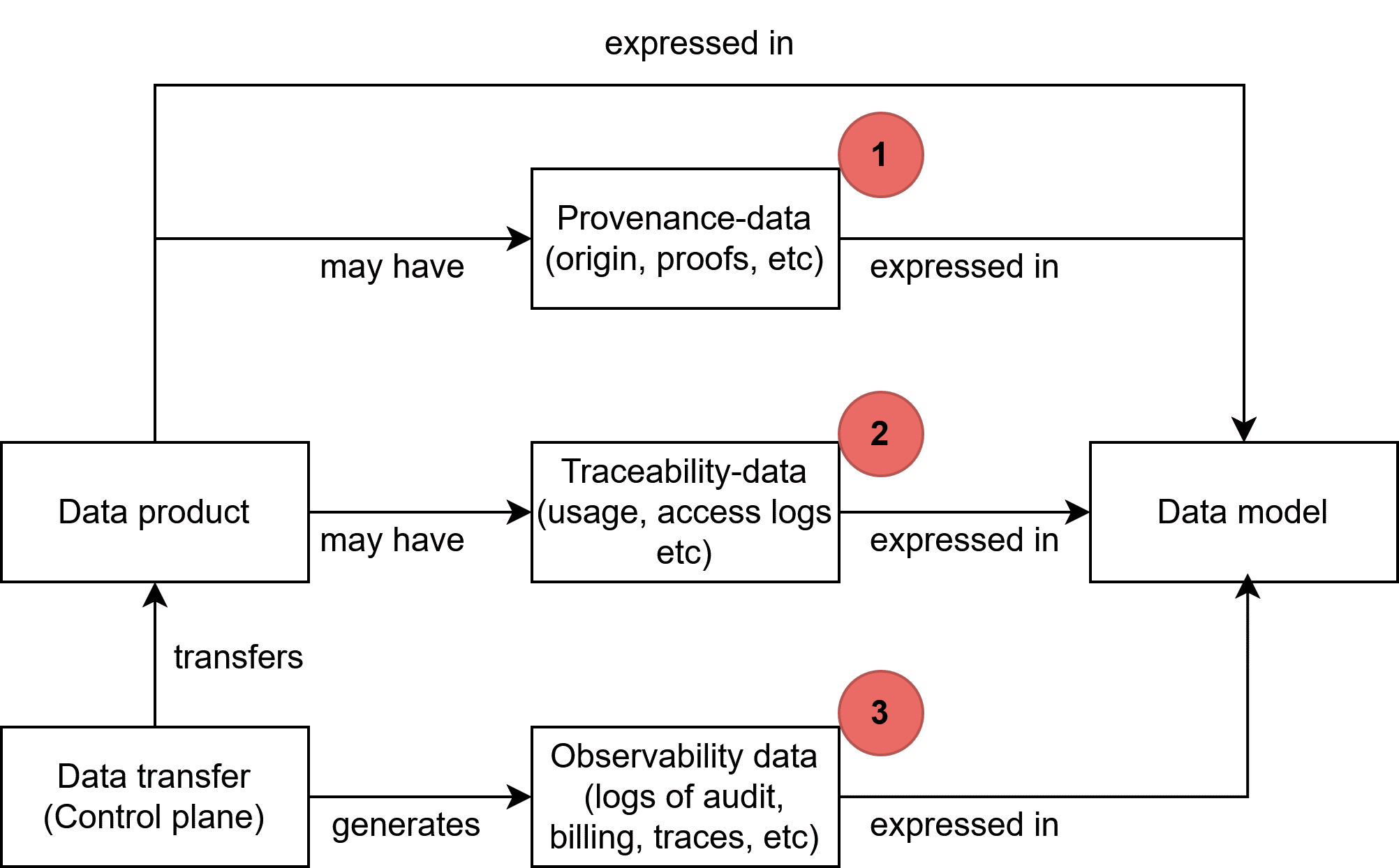

| Data Model

Sources (vsn) :

- Data Models (bv20)

- Data Models (bv30)

|

A structured representation of data elements and relationships used to facilitate semantic interoperability within and across domains, encompassing vocabularies, ontologies, application profiles and schema specifications for annotating and describing data sets and services.These abstraction levels may not need to be hierarchical; they can exist independently. |

| Data Model Provider

Sources (vsn) :

- Data Models (bv20)

- Data Models (bv30)

|

An entity responsible for creating, publishing, and maintaining data models within data spaces. This entity facilitates the management process of vocabulary creation, management, and updates. |

| Data Policy

Source (vsn) : 6 Data Policies and Contracts (bv30)

|

A set of rules, working instructions, preferences and other guidance to ensure that data is obtained, stored, retrieved, and manipulated consistently with the standards set by the governance framework and/or data rights holders.

Explanatory Text : Data policies govern aspects of data management within or between data spaces, such as access, usage, security, and hosting.

|

| Data Policy Enforcement

Source (vsn) : 6 Data Policies and Contracts (bv30)

|

A set of measures and mechanisms to monitor, control and ensure adherence to established data policies.

Explanatory Text : Policies can be enforced by technology or organisational manners.

|

| Data Policy Negotiation

Source (vsn) : 6 Data Policies and Contracts (bv30)

|

The process of reaching agreements on data policies between a data rights holder, a data provider and a data recipient. The negotiation can be fully machine-processable and immediate or involve human activities as part of the workflow. |

| Data Processing

Source (vsn) : Access & Usage Policies Enforcement (bv20)

|

Collection, manipulation, and transformation of raw data into meaningful information or results. |

| Data Product

Sources (vsn) :

- 1 Key Concept Definitions (bv30)

- 5 Data Products and Transactions (bv30)

- Data Space Offering (bv20)

|

Data sharing units, packaging data and metadata, and any associated license terms.

Explanatory Texts :

- We borrow this definition from the CEN Workshop Agreement Trusted Data Transactions.

- The data product may include, for example, the data products' allowed purposes of use, quality and other requirements the data product fulfils, access and control rights, pricing and billing information, etc.

|

| Data Product Consumer

Sources (vsn) :

- 5 Data Products and Transactions (bv30)

- Data Space Offering (bv20)

|

A party that commits to a data product contract concerning one or more data products.

Explanatory Texts :

- A data product consumer is a data space participant.

- The data product consumer can be referred to as the data user. In principle, the data product consumer could be a different party than the eventual data user, but in many cases these parties are the same and the terms are used exchangeably.

|

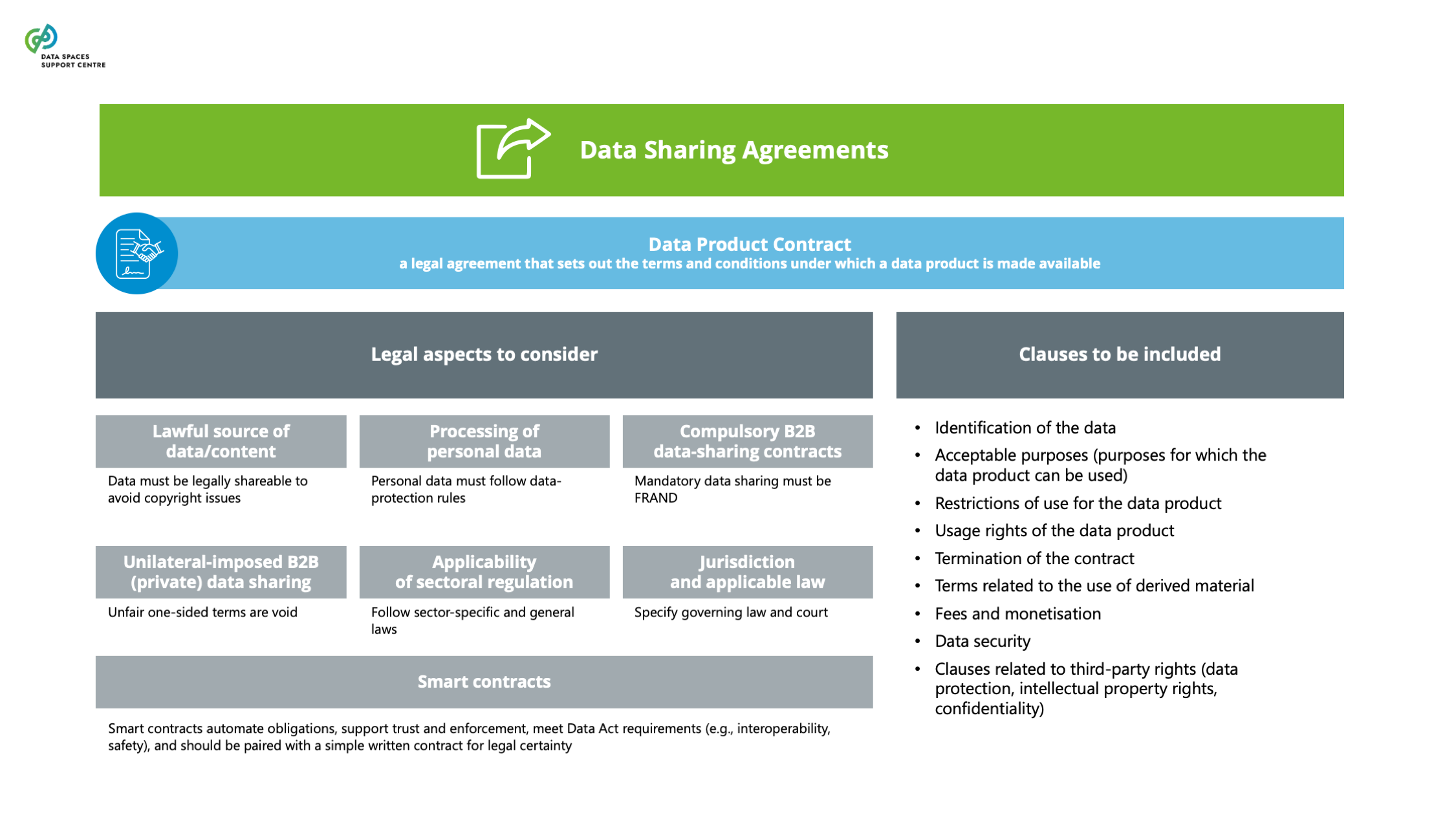

| Data Product Contract

Sources (vsn) :

- 5 Data Products and Transactions (bv30)

- Contractual Framework (bv30)

- Data Space Offering (bv20)

|

A legal contract that specifies a set of rights and duties for the respective parties that will perform the roles of the data product provider and data product consumer. |

| Data Product Owner

Sources (vsn) :

- 5 Data Products and Transactions (bv30)

- Data Space Offering (bv20)

|

A party that develops, manages and maintains a data product.

Explanatory Texts :

- The data product owner can be the same party as the data rights holder, or it can receive the right to process the data from the data rights holder (the right can be obtained through a permission, consent or license and may be ruled by a legal obligation or a contract).

- A data product owner is not necessarily a data space participant.

|

| Data Product Provider

Sources (vsn) :

- 5 Data Products and Transactions (bv30)

- Data Space Offering (bv20)

|

A party that acts on behalf of a data product owner in providing, managing and maintaining a data product in a data space.

Explanatory Texts :

- The data product provider can be referred to as the data provider. In general use that is fine, but in specific cases the data product provider might be a different party than the data rights holder and different than the data product owner.

- A data product provider is a data space participant.

- For reference, the definition from the CEN Workshop Agreement Trusted Data Transactions: a party that has the right or duty to make data available to data users through data products. Note: a data provider carries out several activities, ie.: - non-technical, on behalf of a data rights holder, including the description of the data products, data licence terms, the publishing of data products in a data product catalogue, the negotiation with the data users, and the conclusion of contracts, - technical, with the provision of the data products to the data users.

|

| Data Provider

Source (vsn) : 5 Data Products and Transactions (bv30)

|

A synonym of data product provider |

| Data Provider

Source (vsn) : Publication and Discovery (bv30)

|

A provider of data or service. |

| Data Recipient (Data Act)

Source (vsn) : Regulatory Compliance (bv20)

|

a natural or legal person, acting for purposes which are related to that person’s trade, business, craft or profession, other than the user of a connected product or related service, to whom the data holder makes data available, including a third party following a request by the user to the data holder or in accordance with a legal obligation under Union law or national legislation adopted in accordance with Union law;( DA Article 2(14) )

Explanatory Texts :

- Data recipient has a broader (not limited to IoT) meaning in the context of data transactions enabled by data space: ''A transaction participant to whom data is, or is to be technically supplied by a data provider in the context of a specific data transaction''.

- When we use data recipient in the meaning of DA (IoT data), we specify it with DA at the end of the word.

|

| Data Rights Holder

Sources (vsn) :

- 5 Data Products and Transactions (bv30)

- Access & Usage Policies Enforcement (bv20)

|

A party with legitimate interests to exercise rights under Union law affecting the use of data or imposing obligations on other parties in relation to the data.

Explanatory Texts :

- This party can be a data space participant, but not necessarily, when the party provides permission/consent for a data provider or data product provider to act on its behalf and participate in the actual data sharing.

- Previous definition (for reference): A party that has legal rights and/or obligations to use, grant access to or share certain personal or non-personal data. Data rights holders may transfer such rights to others.

- For reference, the definition from the CEN Workshop Agreement Trusted Data Transactions: a natural or legal person that has legal rights or obligations to use, grant access to or share certain data, or transfer such rights to others

|

| Data Schema

Sources (vsn) :

- Data Models (bv20)

- Data Models (bv30)

|

A data model that defines the structure, data types, and constraints. Such a schema includes the technical details of the data structure for the data exchange, usually expressed in metamodel standards like JSON or XML Schema. |

| Data Service

Sources (vsn) :

- 4 Data Space Services (bv30)

- 5 Data Products and Transactions (bv20)

|

A collection of operations that provides access to one or more datasets or data processing functions. For example, data selection, extraction, data delivery. |

| Data Sharing

Source (vsn) : Regulatory Compliance (bv20)

|

the provision of data by a data subject or a data holder to a data user for the purpose of the joint or individual use of such data, based on voluntary agreements or Union or national law, directly or through an intermediary, for example under open or commercial licences subject to a fee or free of charge;( DGA Article 2(10) )

Explanatory Text : In the context of data spaces, data sharing refers to a full spectrum of practices related to sharing any kind of data, including all relevant technical, financial, legal, and organisational requirements.

|

| Data Sovereignty

Sources (vsn) :

- 11 Foundation of the European Data Economy Concepts (bv20)

- 11 Foundation of the European Data Economy Concepts (bv30)

|

The ability of individuals, organisations, and governments to have control over their data and exercise their rights on the data, including its collection, storage, sharing, and use by others.

Explanatory Text : Data sovereignty is a central concept in the European data strategy and recent European laws and regulations are expanding upon these rights and controls. EU law applies to data collected in the EU and/or about data subjects in the EU.

|

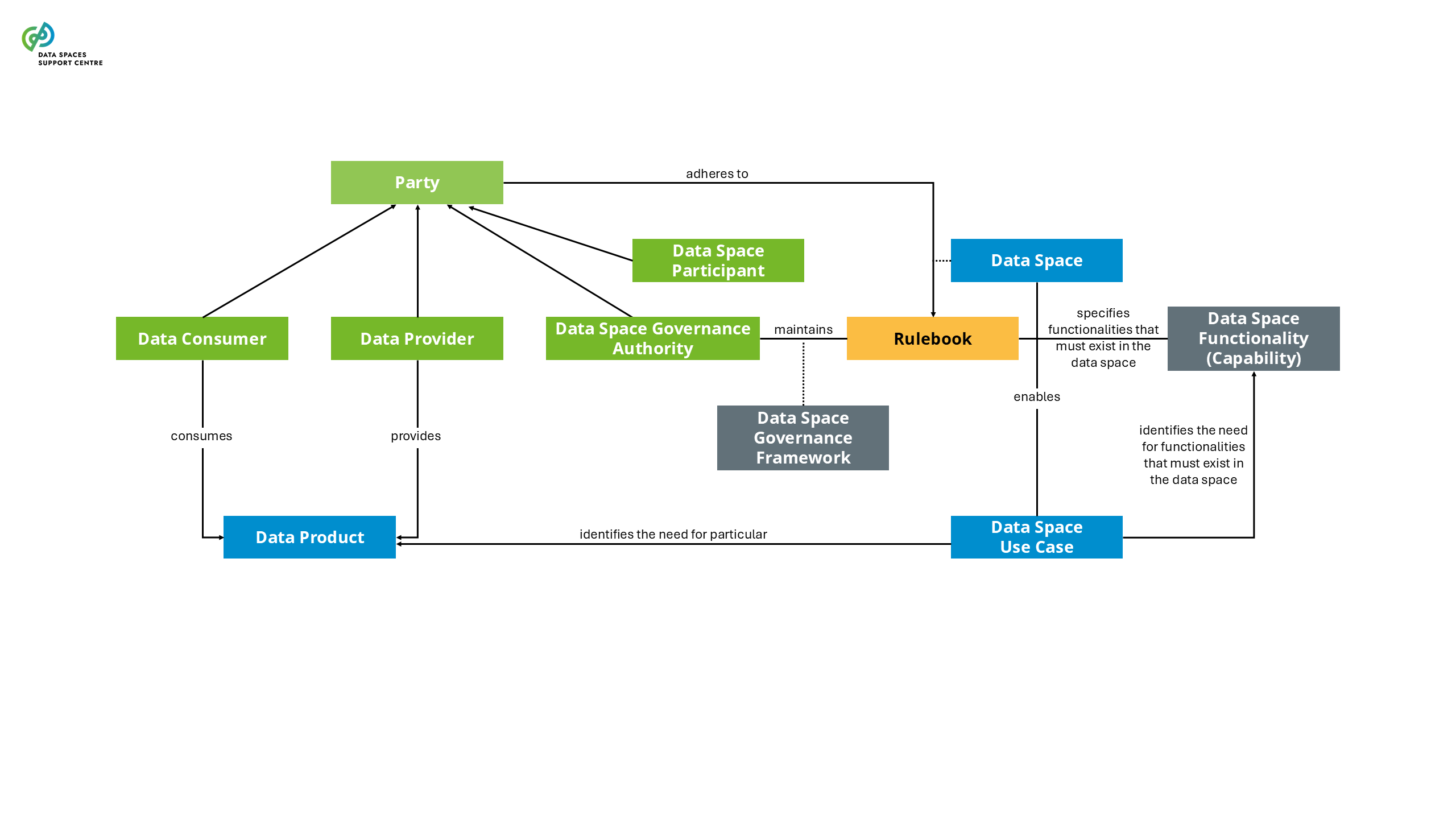

| Data Space

Sources (vsn) :

- 1 Key Concept Definitions (bv30)

- Data, Services, and Offerings Descriptions (bv20)

- Data, Services, and Offerings Descriptions (bv30)

- Participation Management (bv30)

|

Interoperable framework, based on common governance principles, standards, practices and enabling services, that enables trusted data transactions between participants.

Explanatory Texts :

- Note for users of V0.5 and V1.0 of this blueprint: we have adopted this new definition from CEN Workshop Agreement Trusted Data Transactions, in an attempt to converge with ongoing standardisation efforts. Please note that further evolution might occur in future versions. For reference, the previous definition was: “Distributed system defined by a governance framework that enables secure and trustworthy data transactions between participants while supporting trust and data sovereignty. A data space is implemented by one or more infrastructures and enables one or more use cases.”

- Note: some parties write dataspace in a single word. We prefer data space in two words and consider that both terms mean exactly the same.

|

| Data Space Agreement

Source (vsn) : Contractual Framework (bv30)

|

A contract that states the rights and duties (obligations) of parties that have committed to (signed) it in the context of a particular data space. These rights and duties pertain to the data space and/or other such parties. |

| Data Space Building Block

Sources (vsn) :

- 9 Building Blocks and Implementations (bv20)

- 9 Building Blocks and Implementations (bv30)

|

A description of related functionalities and/or capabilities that can be realised and combined with other building blocks to achieve the overall functionality of a data space.

Explanatory Texts :

- In the data space blueprint the building blocks are divided into organisational and business building blocks and technical building blocks.

- In many cases, the functionalities are implemented by Services

|

| Data Space Component

Sources (vsn) :

- 9 Building Blocks and Implementations (bv20)

- 9 Building Blocks and Implementations (bv30)

|

A specification for a software or other artefact that realises one service or a set of services that fulfil functionalities described by one or more building blocks.

Explanatory Text : For technical components, that would typically be software, but for business components, this could consist of processes, templates or other artefacts.

|

| Data Space Component Architecture

Sources (vsn) :

- 9 Building Blocks and Implementations (bv20)

- 9 Building Blocks and Implementations (bv30)

|

An overview of all the data space components and their interactions, providing a high-level structure of how these components are organised and interact within data spaces. |

| Data Space Connector

Source (vsn) : 4 Data Space Services (bv20)

|

A technical component that is run by (or on behalf of) a participant and that provides participant agent services, with similar components run by (or on behalf of) other participants.

Explanatory Text : A connector can provide more functionality than is strictly related to connectivity. The connector can offer technical modules that implement data interoperability functions, authentication interfacing with trust services and authorisation, data product self-description, contract negotiation, etc. We use “participant agent services” as the broader term to define these services.

|

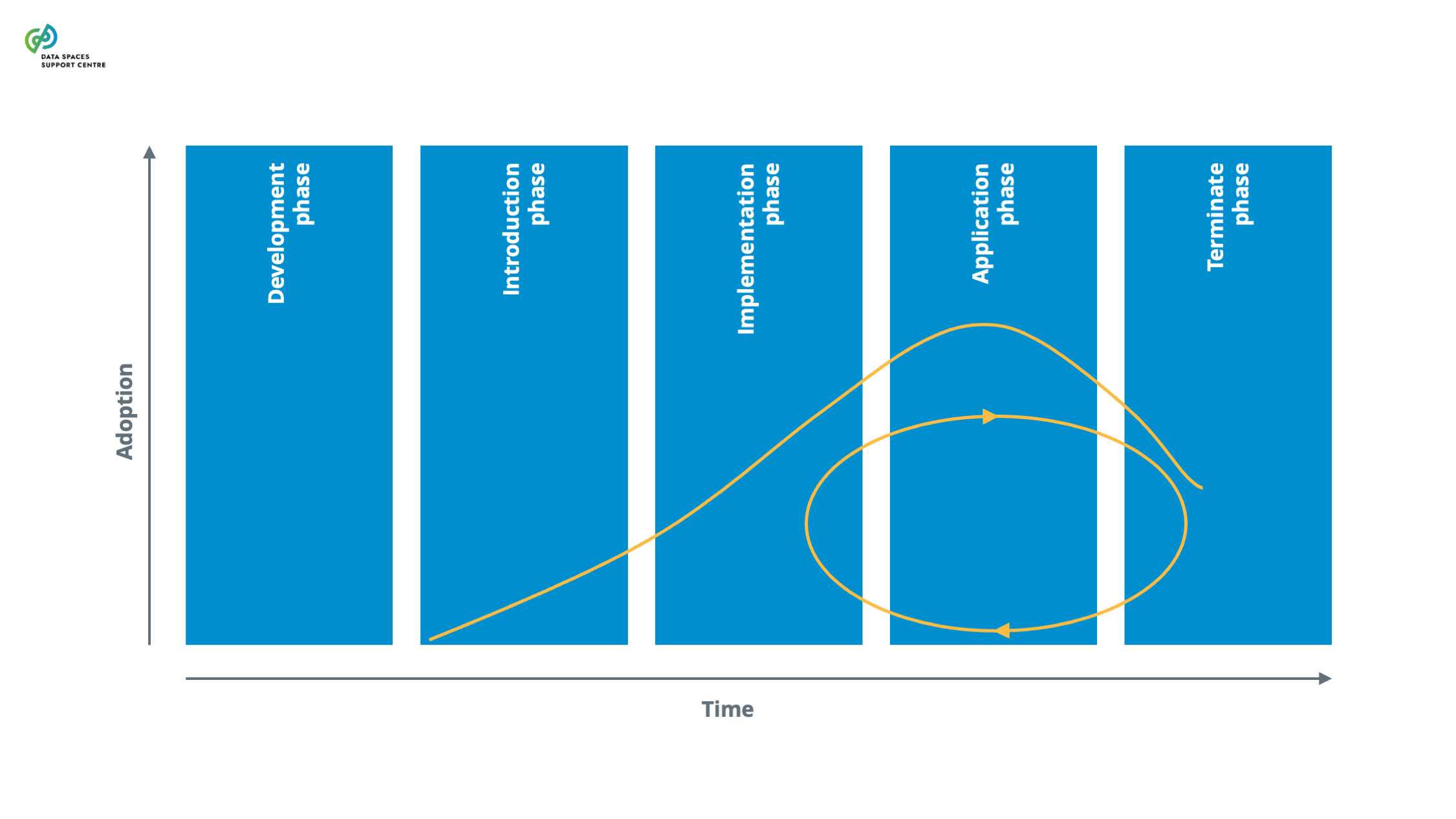

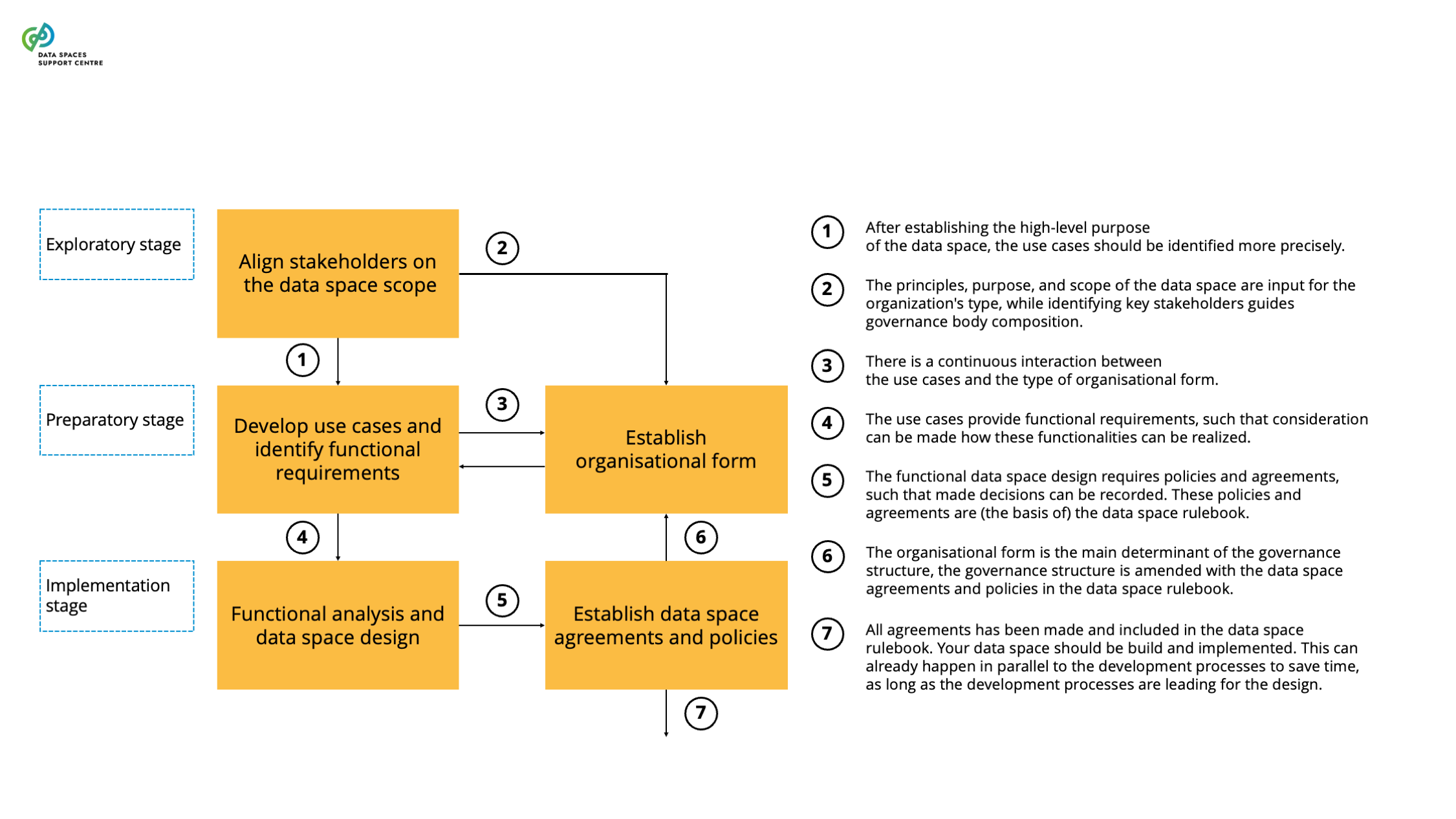

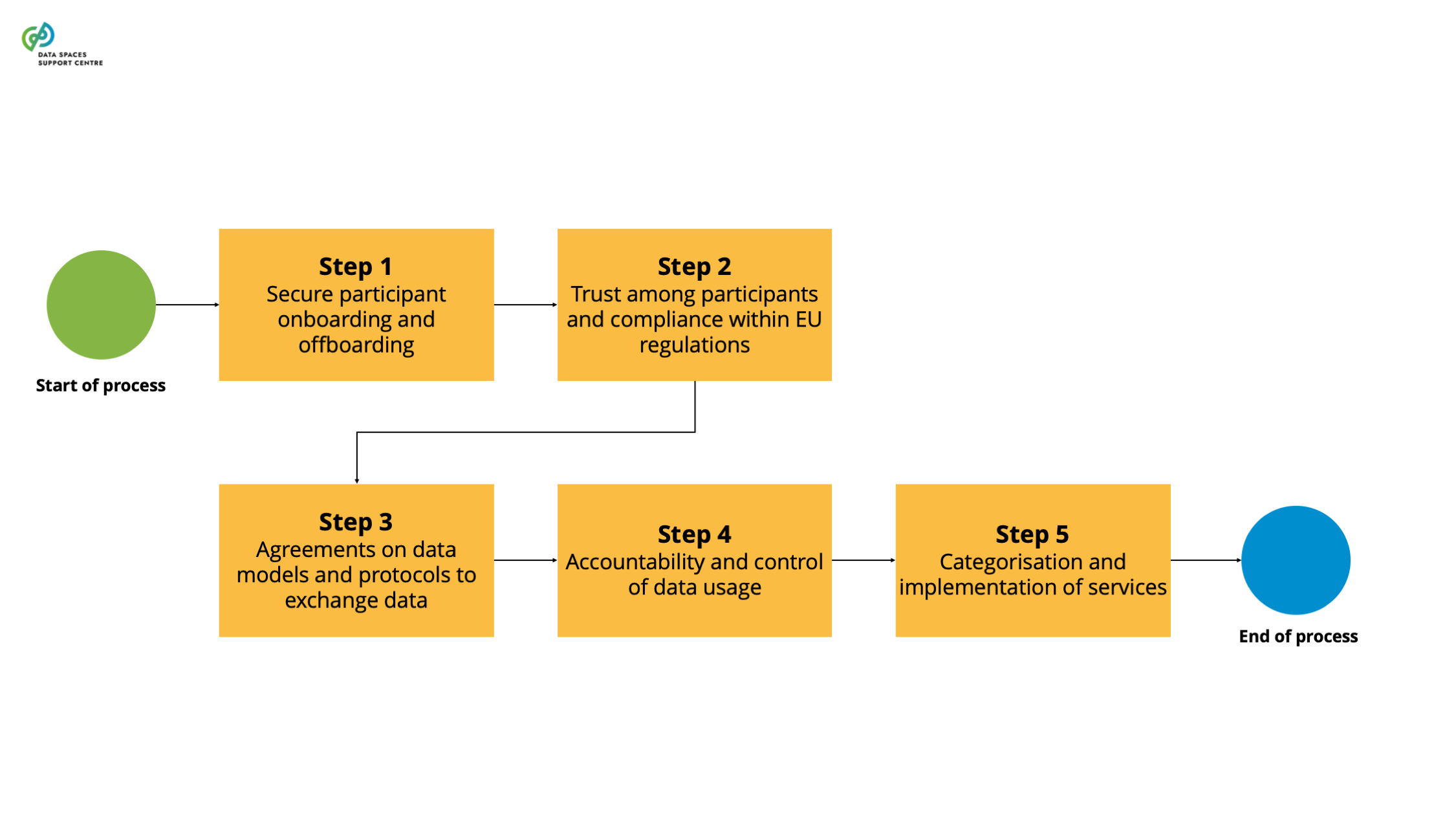

| Data Space Development Processes

Source (vsn) : 3 Evolution of Data Space Initiatives (bv30)

|

A set of essential processes the stakeholders of a data space initiative conduct to establish and continuously develop a data space throughout its development cycle. |

| Data Space Functionality

Source (vsn) : 1 Key Concept Definitions (bv30)

|

A specified set of tasks that are critical for operating a data space and that can be associated with one or more data space roles.

Explanatory Text : The data space governance framework specifies the data space functionalities and associated roles. Each functionality and associated role consist of rights and duties for performing tasks related to that functionality.

|

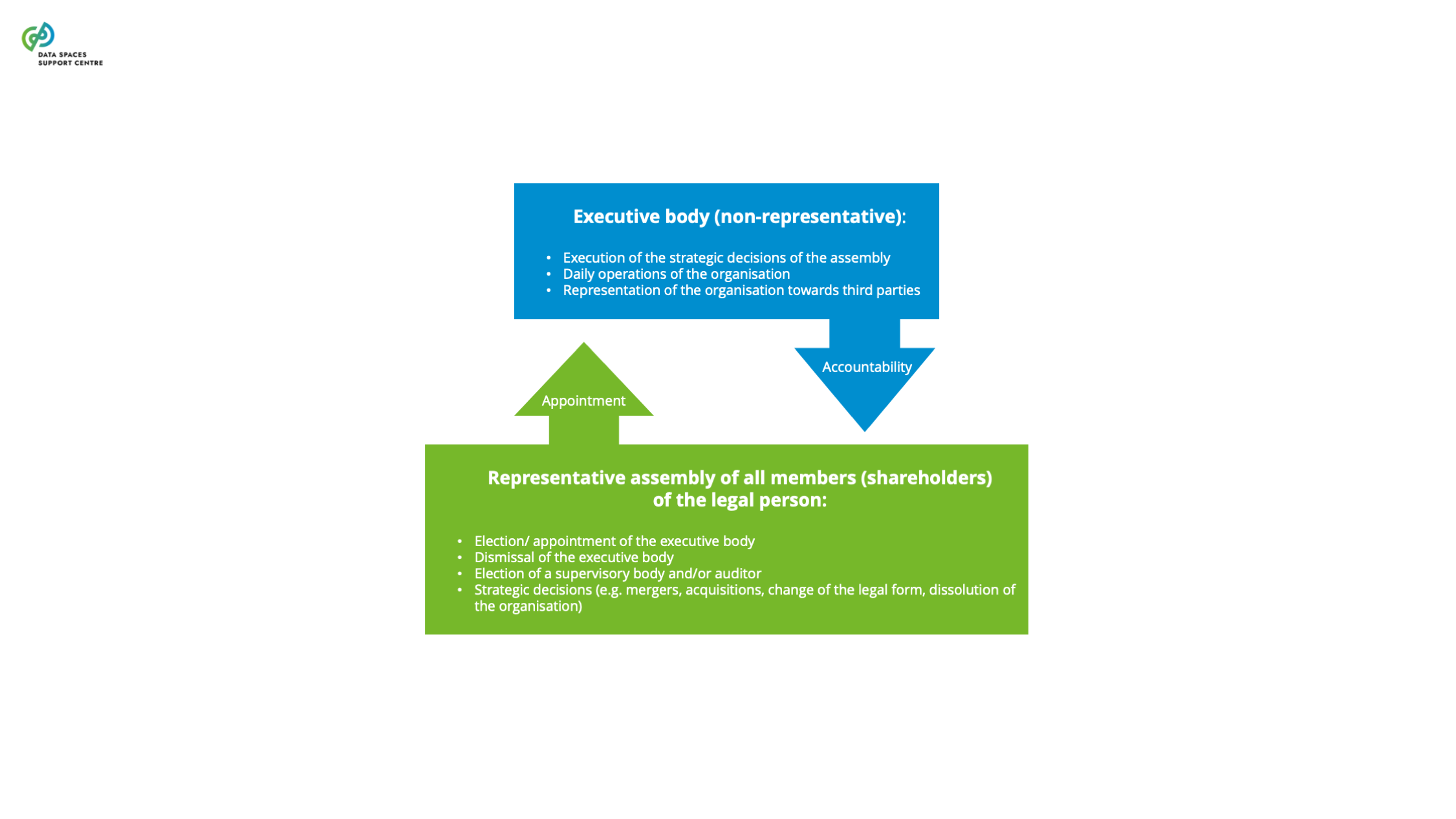

| Data Space Governance Authority

Source (vsn) : 1 Key Concept Definitions (bv30)

|

The body of a particular data space, consisting of participants that is committed to the governance framework for the data space, and is responsible for developing, maintaining, operating and enforcing the governance framework.

Explanatory Texts :

- A ‘body’ is a group of parties that govern, manage, or act on behalf of a specific purpose, entity, or area of law. This term, which has legal origins, can encompass entities like legislative bodies, governing bodies, or corporate bodies.

- The data space governance authority does not replace the role of public regulation and enforcement authorities.

- Establishing the initial data space governance framework is outside the governance authority's scope and needs to be defined by a group of data space initiating parties.

- After establishment, the governance authority performs the governing function (developing and maintaining the governance framework) and the executive function (operating and enforcing the governance framework).

- Depending on the legal form and the size of the data space, the governance and executive functions of a data space governance authority may or may not be performed by the same party.

|

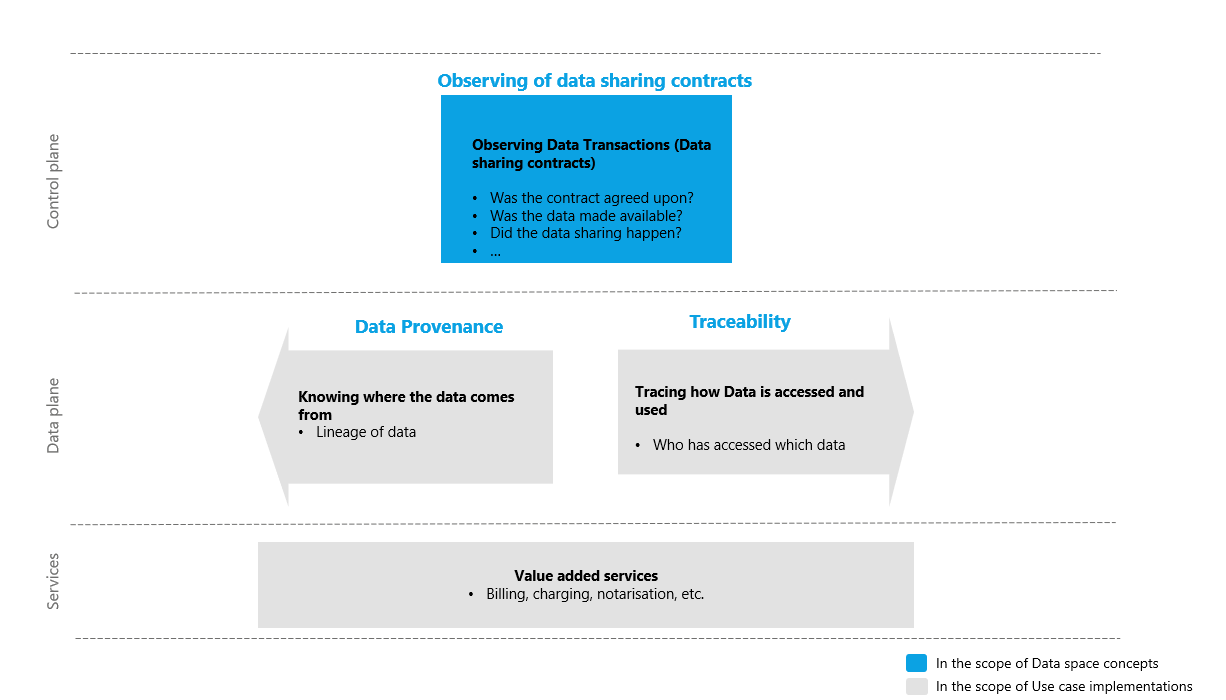

| Data Space Governance Authority

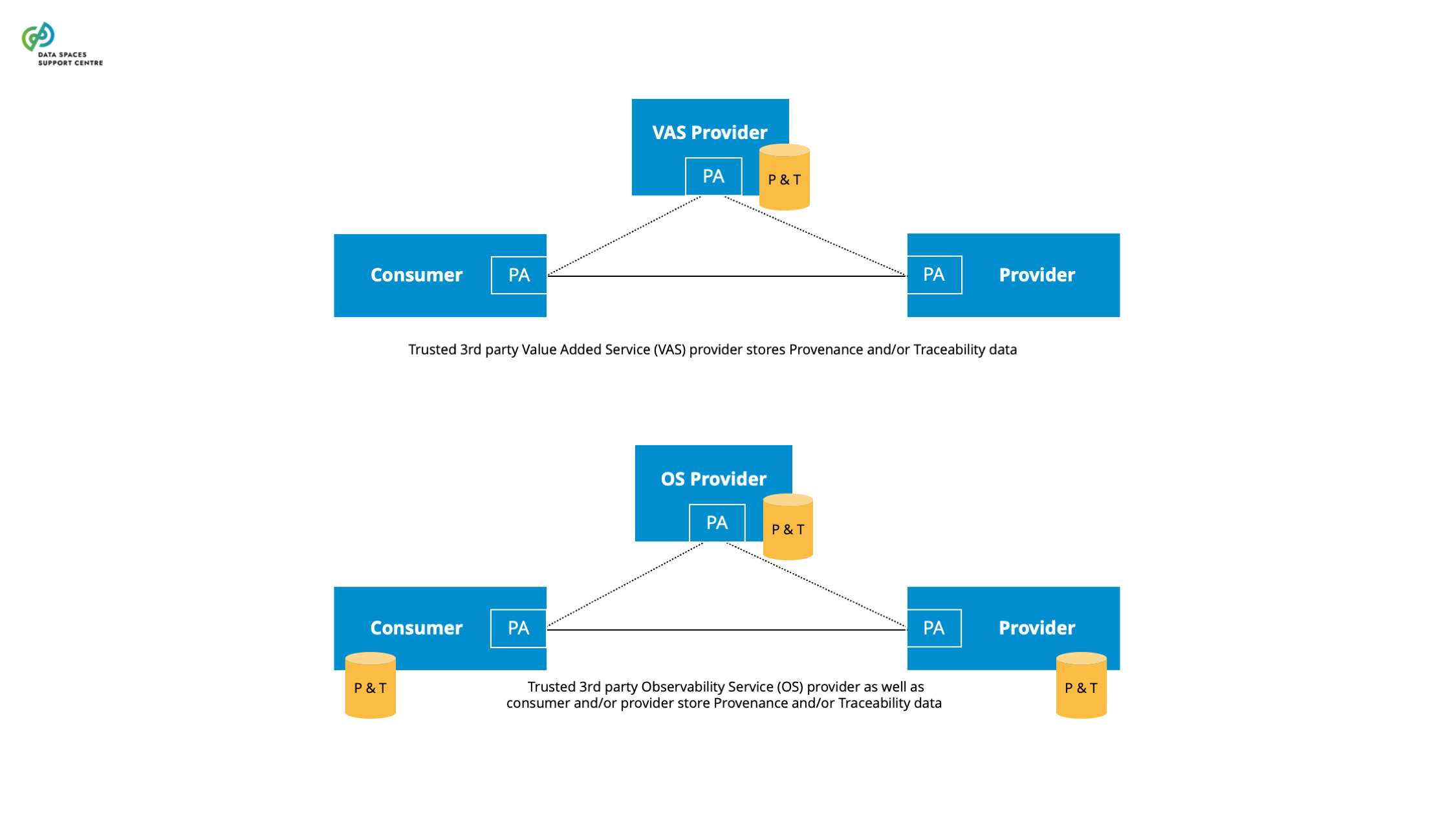

Source (vsn) : Provenance, Traceability & Observability (bv30)

|

A governance authority refers to bodies of a data space that are composed of and by data space participants responsible for developing and maintaining as well as operating and enforcing the internal rules. |

| Data Space Governance Authority

Sources (vsn) :

- Identity & Attestation Management (bv30)

- Trust Framework (bv30)

|

The body of a particular data space, consisting of participants that are committed to the governance framework for the data space, and is responsible for developing, maintaining, operating and enforcing the governance framework. |

| Data Space Governance Authority (DSGA)

Source (vsn) : Participation Management (bv30)

|

The body of a particular data space, consisting of participants that is committed to the governance framework for the data space, and is responsible for developing, maintaining, operating and enforcing the governance framework. |

| Data Space Governance Framework

Source (vsn) : 1 Key Concept Definitions (bv30)

|

The structured set of principles, processes, standards, protocols, rules and practices that guide and regulate the governance, management and operations within a data space to ensure effective and responsible leadership, control, and oversight. It defines the functionalities the data space provides and the associated data space roles, including the data space governance authority and participants.

Explanatory Texts :

- Functionalities include, e.g., the maintenance of the governance framework the functioning of the data space governance authority and the engagement of the participants.

- The responsibilities covered in the governance framework include assigning the governance authority and formalising the decision-making powers of participants.

- The data space governance framework specifies the procedures for enforcing the governance framework and conflict resolution.

- The operations may also include business and technology aspects.

|

| Data Space Infrastructure (deprecated Term)

Source (vsn) : 1 Key Concept Definitions (bv30)

|

A technical, legal, procedural and organisational set of components and services that together enable data transactions to be performed in the context of one or more data spaces.

Explanatory Text : This term was used in the data space definition in previous versions of the Blueprint. It is replaced by the governance framework and enabling services, and may be deprecated from this glossary in a future version.

|

| Data Space Initiative

Source (vsn) : 3 Evolution of Data Space Initiatives (bv30)

|

A collaborative project of a consortium or network of committed partners to initiate, develop and maintain a data space. |

| Data Space Intermediary

Sources (vsn) :

- Data, Services, and Offerings Descriptions (bv20)

- Data, Services, and Offerings Descriptions (bv30)

|

A data space intermediary is a service provider who provides an enabling service or services in a data space. In common usage interchangeable with ‘operator'. |

| Data Space Interoperability

Source (vsn) : 7 Interoperability (bv30)

|

The ability of participants to seamlessly exchange and use data within a data space or between two or more data spaces.

Explanatory Texts :

- Interoperability generally refers to the ability of different systems to work in conjunction with each other and for devices, applications or products to connect and communicate in a coordinated way without effort from the users of the systems. On a high-level, there are four layers of interoperability: legal, organisational, semantic and technical (see the European Interoperability Framework [EIF]).

- Legal interoperability: Ensuring that organisations operating under different legal frameworks, policies and strategies are able to work together.

- Organisational interoperability: The alignment of processes, communications flows and policies that allow different organisations to use the exchanged data meaningfully in their processes to reach commonly agreed and mutually beneficial goals.

- Semantic interoperability: The ability of different systems to have a common understanding of the data being exchanged.

- Technical interoperability: The ability of different systems to communicate and exchange data.

- Also the ISO/IEC 19941:2017 standard [20] is relevant here.

- Note: As per Art. 2 r.40 of the Data Act: ‘interoperability’ means the ability of two or more data spaces or communication networks, systems, connected products, applications, data processing services or components to exchange and use data in order to perform their functions. We describe this wider term as cross-data space interoperability.

|

| Data Space Maturity Model

Source (vsn) : 3 Evolution of Data Space Initiatives (bv30)

|

Set of indicators and a self-assessment tool allowing data space initiatives to understand their stage in the development cycle, their performance indicators and their technical, functional, operational, business and legal capabilities in absolute terms and in relation to peers. |

| Data Space Offering

Source (vsn) : Data Space Offering (bv20)

|

The set of offerings provided through the data space that aim to bring value to participants. |

| Data Space Operational Processes

Source (vsn) : 3 Evolution of Data Space Initiatives (bv30)

|

A set of essential processes a potential or actual data space participant goes through when engaging with a functioning data space that is in the operational stage or scaling stage. The operational processes include attracting and onboarding participants, publishing and matching use cases, data products and data requests and eventually data transactions. |

| Data Space Participant

Sources (vsn) :

- 1 Key Concept Definitions (bv30)

- Data, Services, and Offerings Descriptions (bv20)

- Data, Services, and Offerings Descriptions (bv30)

- Participation Management (bv30)

|

A party committed to the governance framework of a particular data space and having a set of rights and obligations stemming from this framework.

Explanatory Text : Depending on the scope of the said rights and obligations, participants may perform in (multiple) different roles, such as: data space members, data space users, data space service providers and others as described in this glossary.

|

| Data Space Pilot

Source (vsn) : 3 Evolution of Data Space Initiatives (bv30)

|

A planned and resourced implementation of one or more use cases within the context of a data space initiative. A data space pilot aims to validate the approach for a full data space deployment and showcase the benefits of participating in the data space. |

| Data Space Role

Sources (vsn) :

- 1 Key Concept Definitions (bv30)

- Participation Management (bv30)

|

A distinct and logically consistent set of rights and duties (responsibilities) within a data space, that are required to perform specific tasks related to a data space functionality, and that are designed to be performed by one or more participants.

Explanatory Texts :

- The governance framework of a data space defines the data space roles.

- Parties can perform (be assigned, or simply ‘be’) multiple roles, such as data provider, transaction participant, data space intermediary, etc.. In some cases, a prerequisite for performing a particular role is that the party can already perform one or more other roles. For example, the data provider must also be a data space participant.

|

| Data Space Rulebook

Source (vsn) : 1 Key Concept Definitions (bv30)

|

The documentation of the data space governance framework for operational use.

Explanatory Text : The rulebook can be expressed in human-readable and machine-readable formats.

|

| Data Space Service Offering Credential

Source (vsn) : Identity & Attestation Management (bv30)

|

A service description that follows the schemas defined by the Data Space Governance Authority and whose claims are validated by the Data Space Compliance Service. |

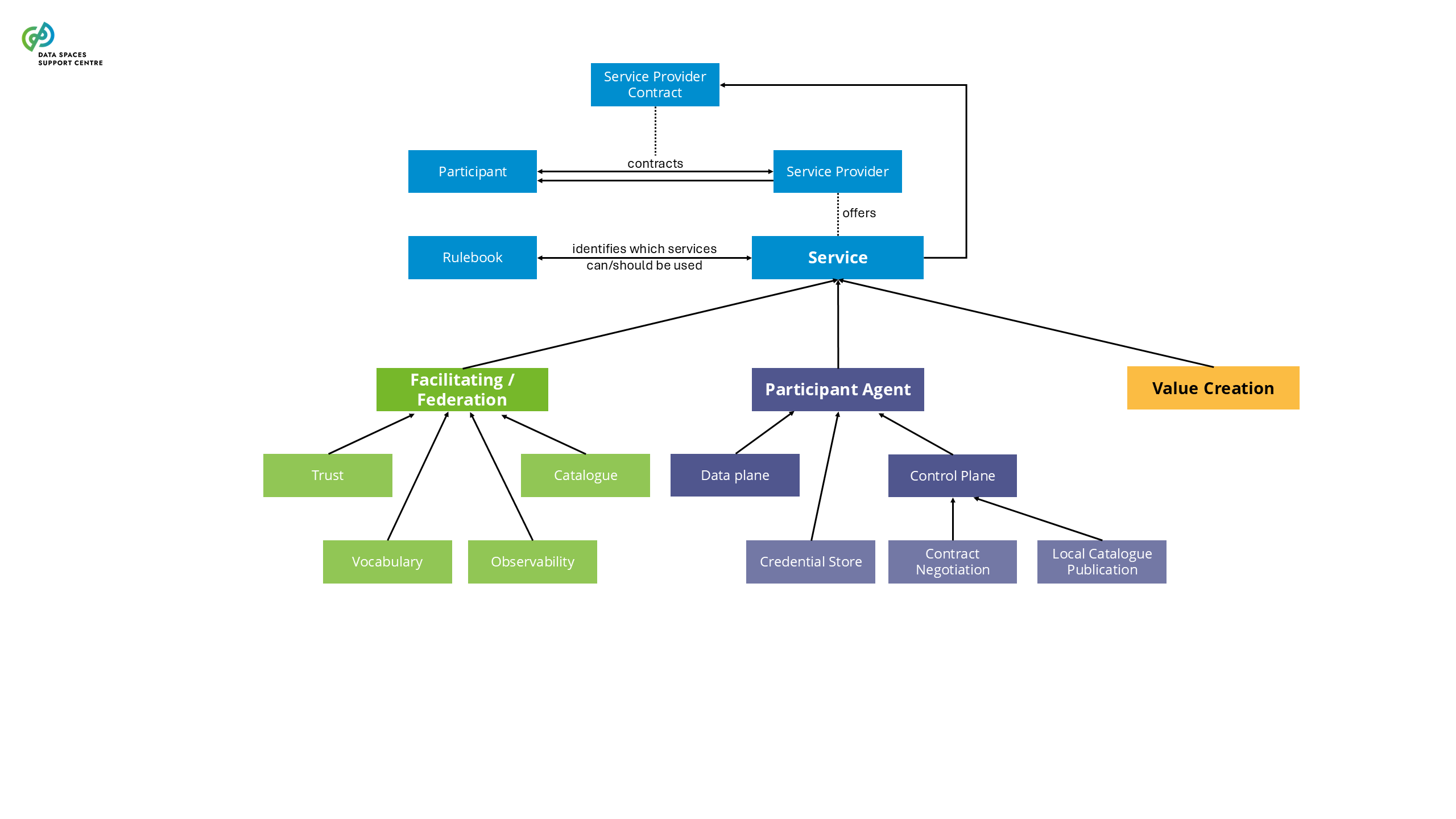

| Data Space Services

Sources (vsn) :

- 1 Key Concept Definitions (bv30)

- 4 Data Space Services (bv30)

|

Functionalities for implementing data space capabilities, offered to participants of data spaces.

Explanatory Texts :

- We distinguish three classes of technical services which are further defined in section 4 of this glossary: Participant Agent Services, Facilitating Services, Value-Creation Services. Technical (software) components are needed to implement these services.

- Also on the business and organisational side, services may exist to support participants and orchestrators of data spaces, further defined in section 4 of this glossary and discussed in the Business and Organisational building blocks introduction.

- Please note that a Data Service is a specific type of service related to the data space offering, providing access to one or more datasets or data processing functions.

|

| Data Space Support Organisation

Source (vsn) : 10 DSSC Specific Terms (bv30)

|

An organisation, consortium, or collaboration network that specifies architectures and frameworks to support data space initiatives. Examples include Gaia-X, IDSA, FIWARE, iSHARE, MyData, BDVA, and more. |

| Data Space Use Case

Source (vsn) : 1 Key Concept Definitions (bv30)

|

A specific setting in which two or more participants use a data space to create value (business, societal or environmental) from data sharing.

Explanatory Texts :

- By definition, a data space use case is operational. When referring to a planned or envisioned setting that is not yet operational we can use the term use case scenario.

- Use case scenario is a potential use case envisaged to solve societal, environmental or business challenges and create value. The same use case scenario, or variations of it, can be implemented as a use case multiple times in one or more data spaces.

|

| Data Space Value

Source (vsn) : 2 Data Space Use Cases and Business Model (bv30)

|

The cumulative value generated from all the data transactions and use cases within a data space as data space participants collaboratively use it.

Explanatory Text : The definition of “data space value” is agnostic to value sharing and value capture. It just states where the value is created (in the use cases). The use case orchestrator should establish a value-sharing mechanism within the use case to make all participants happy. Furthermore, to avoid the free rider problem, the data space governance authority may also want to establish a value capture mechanism (for example, a data space usage fee) to get its part from the value created in the use cases.

|

| Data Spaces Blueprint

Sources (vsn) :

- 9 Building Blocks and Implementations (bv20)

- 9 Building Blocks and Implementations (bv30)

|

A consistent, coherent and comprehensive set of guidelines to support the implementation, deployment and maintenance of data spaces.

Explanatory Text : The blueprint contains the conceptual model of data space, data space building blocks, and recommended selection of standards, specifications and reference implementations identified in the data spaces technology landscape.

|

| Data Spaces Information Model

Source (vsn) : 3 Evolution of Data Space Initiatives (bv30)

|

A classification scheme used to describe, analyse and organise a data space initiative according to a defined set of questions. |

| Data Spaces Radar

Source (vsn) : 3 Evolution of Data Space Initiatives (bv30)

|

A publicly accessible tool to provide an overview of the data space initiatives, their sectors, locations and approximate development stages. |

| Data Spaces Starter Kit

Source (vsn) : 10 DSSC Specific Terms (bv30)

|

A document that helps organisations and individuals in the network of stakeholders to understand the requirements for creating a data space. |

| Data Spaces Support Centre

Source (vsn) : 10 DSSC Specific Terms (bv30)

|

The virtual organisation and EU-funded project which supports the deployment of common European data spaces and promotes the reuse of data across sectors. |

| Data Spaces Technology Landscape

Source (vsn) : 10 DSSC Specific Terms (bv30)

|

A repository of standards (de facto and de jure), specifications and open-source reference implementations available for deploying data spaces. The Data Space Support Centre curates the repository and publishes it with the blueprint. |

| Data Storage

Source (vsn) : Access & Usage Policies Enforcement (bv20)

|

Process of saving digital data in a physical or virtual location for future retrieval and use. |

| Data Subject

Source (vsn) : Regulatory Compliance (bv20)

|

an identified or identifiable natural person that personal data relates to.( GDPR Article 4(1) )

Explanatory Text : Data subject is implicitly defined in the definition of ‘personal data’. In the context of data spaces we use the broader term data rights holder, to refer to the party that has (legal) rights and/or obligations to use, grant access to or share certain personal or non-personal data. For personal data, this would equal the data subject.

|

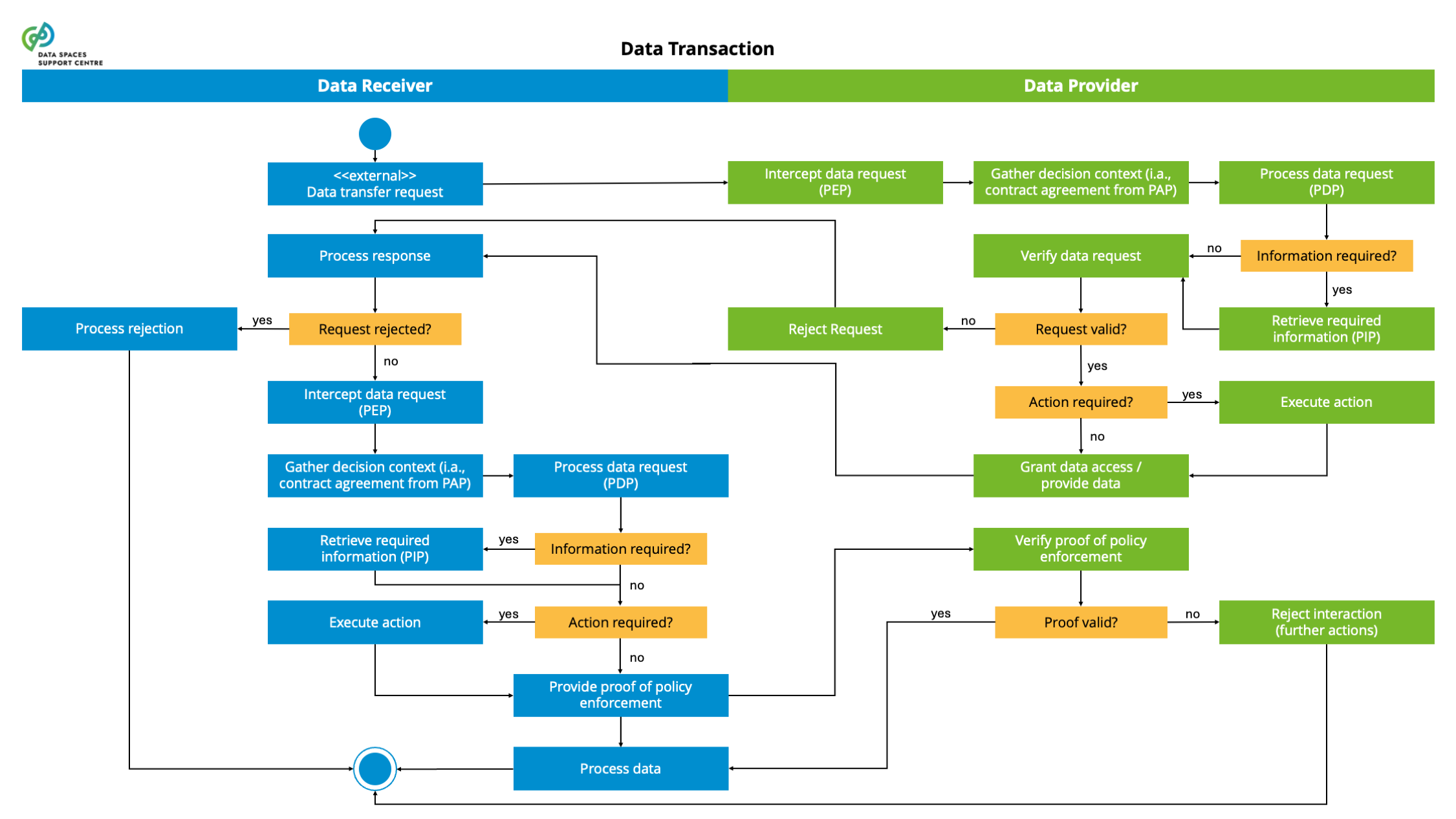

| Data Transaction

Sources (vsn) :

- 1 Key Concept Definitions (bv30)

- Participation Management (bv30)

|

A structured interaction between data space participants for the purpose of providing and obtaining/using a data product. An end-to-end data transaction consists of various phases, such as contract negotiation, execution, usage, etc.

Explanatory Texts :

- In future work we may align further with the definition from CEN Workshop Agreement Trusted Data Transactions: result of an agreement between a data provider and a data user with the purpose of exchanging, accessing and using data, in return for monetary or non-monetary compensation.

- A data transaction implies data transfer among involved participants and its usage on a lawful and contractual basis. It relates to the technical, financial, legal and organisational arrangements necessary to make a data set from Participant A available to Participant B. The physical data transfer may or may not happen during the data transaction.

- Prerequisites:

- the specification of data products and the creation and publication of data product offerings so parties can search for offerings, compare them and engage in data transactions to obtain the offered data product.

- Key elements related to data transactions are:

- negotiation (at the business level) of a contract between the provider and user of a data product, which includes, e.g., pricing, the use of appropriate intermediary services, etc.

- negotiation (at the operational level) of an agreement between the provider and the user of a data product, which includes, e.g., technical policies and configurations, such as sending

- ensuring that parties that provide, receive, use, or otherwise act with the data have the rights/duties they need to comply with applicable policies and regulations (e.g. from the EU)

- accessing and/or transferring the data (product) between provider, user, and transferring this data and/or meta-data to (contractually designated) other participants, such as observers, clearing house services, etc.

- Data access and data usage by the data consumer.

- All activities listed above do not need to be conducted in every transaction and that parts of the activities may be visited in loops or conditional flows.

|

| Data Transaction

Source (vsn) : Participation Management (bv20)

|

In the operational and scaling stage of a data space, the number of participants and use cases grows organically. The Data Space Governance Framework defines roles, responsibilities, and policies for data management, while the task of the Data Space Governance Authority is to enable seamless interaction among the participants. While use cases are executed and data products and data requests are published by the participants, the Data Space Governance Authority must carefully consider imbalances between supply and demand and consequently establish means to attract new participants to the data space to tackle the imbalances.As the data space grows, the Data Space Governance Authority needs to regularly screen the governance framework and eventually adapt it to address emerging needs and challenges. These adaptations may arise from various factors, such as regulatory changes that impose new requirements on participants, or the strategic goal of expanding the data space to include new industries, companies, or countries with distinct regulations and standards. To successfully accommodate such expansions, the governance framework must remain flexible and inclusive, enabling the integration of diverse stakeholders while maintaining robust compliance, security, and interoperability. Potential adaptations must remain in line with the data space’s central mission/vision. |

| Data Usage Policy

Source (vsn) : 6 Data Policies and Contracts (bv30)

|

A specific data policy defined by the data rights holder for the usage of their data shared in a data space.

Explanatory Text : Data usage policy regulates the permissible actions and behaviours related to the utilisation of the accessed data, which means keeping control of data even after the items have left the trust boundaries of the data provider.

|

| Data User

Source (vsn) : Regulatory Compliance (bv20)

|

a natural or legal person who has lawful access to certain personal or non-personal data and has the right, including under Regulation (EU) 2016/679 in the case of personal data, to use that data for commercial or noncommercial purposes;( DGA Article 2 (9)) |

| Data User

Source (vsn) : 5 Data Products and Transactions (bv30)

|

a natural or legal person who has lawful access to certain personal or non-personal data and has the right, including under Regulation (EU) 2016/679 in the case of personal data, to use that data for commercial or noncommercial purposes; ( DGA Article 2 (9))

Explanatory Texts :

- In many cases the data user is the same party as the data consumer or data product consumer, but exceptions may exist where these roles are separate.

- For reference, the definition from the CEN Workshop Agreement Trusted Data Transactions which considers the term to be synonymous with data consumer: person or organization authorized to exploit data (ISO 5127:2017) Note 1: Data are in the form of data products. Note 2: Data consumer is considered as a synonym of data user.

|

| Dataset Description

Sources (vsn) :

- Data, Services, and Offerings Descriptions (bv20)

- Data, Services, and Offerings Descriptions (bv30)

|

A description of a dataset includes various attributes, such as spatial, temporal, and spatial resolution. The description encompasses attributes related to distribution of datasets such as data format, packaging format, compression format, frequency of updates, download URL, and more. These attributes provide essential metadata that enables data recipients to understand the nature and usability of the datasets. |

| Dataspace Protocol

Source (vsn) : Provenance, Traceability & Observability (bv30)

|

The Eclipse data space Protocol is a set of specifications that enable secure, interoperable data sharing between independent entities by defining standardized models, contracts, and processes for publishing, negotiating, and transferring data within data space s.The current specification can be found at: https://eclipse-dataspace-protocol-base.github.io/DataspaceProtocol |

| Declaration

Source (vsn) : Identity & Attestation Management (bv30)

|

first-party attestation (ref. ISO/IEC 17000:2020(en), Conformity assessment — Vocabulary and general principles ) |

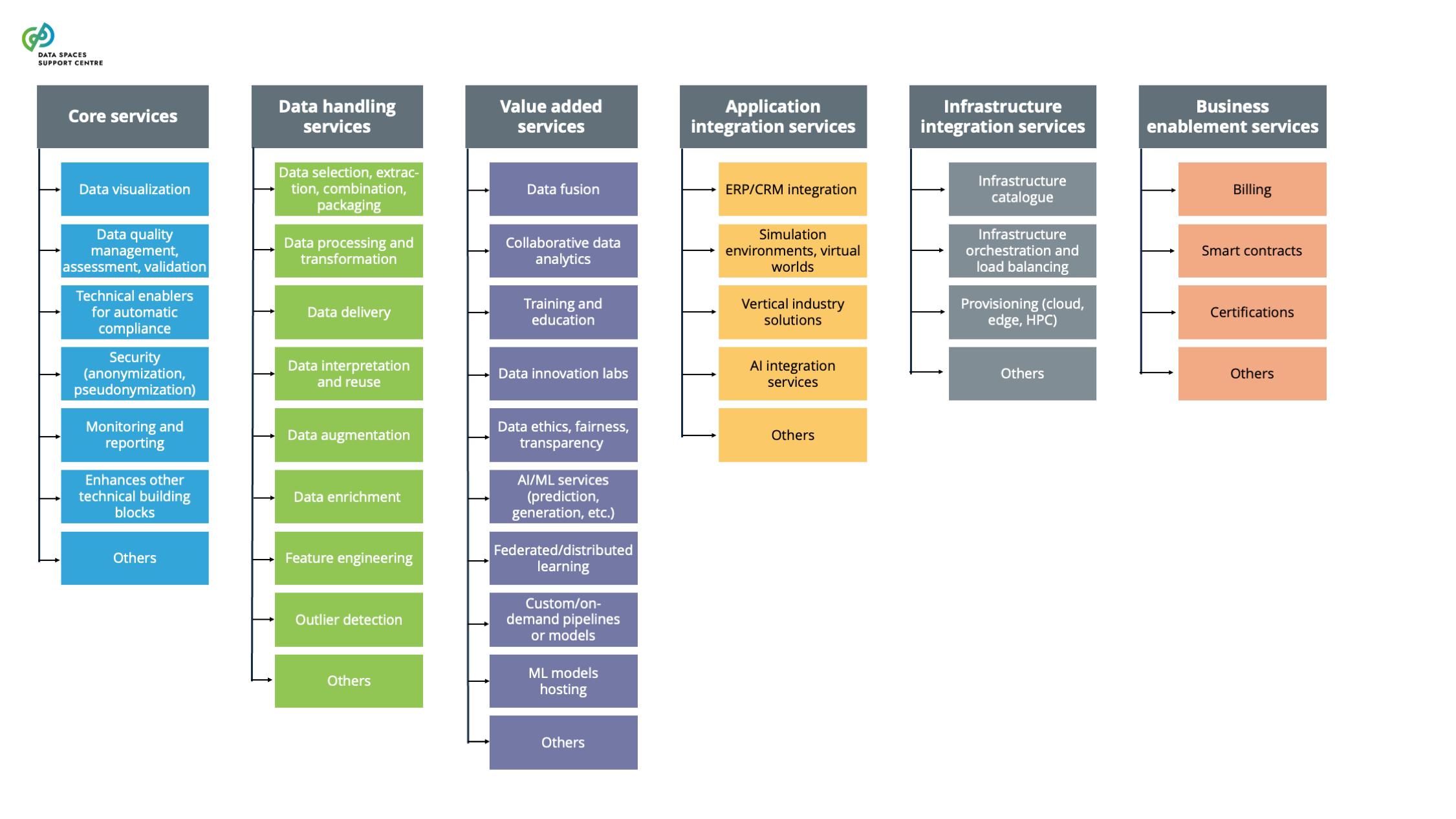

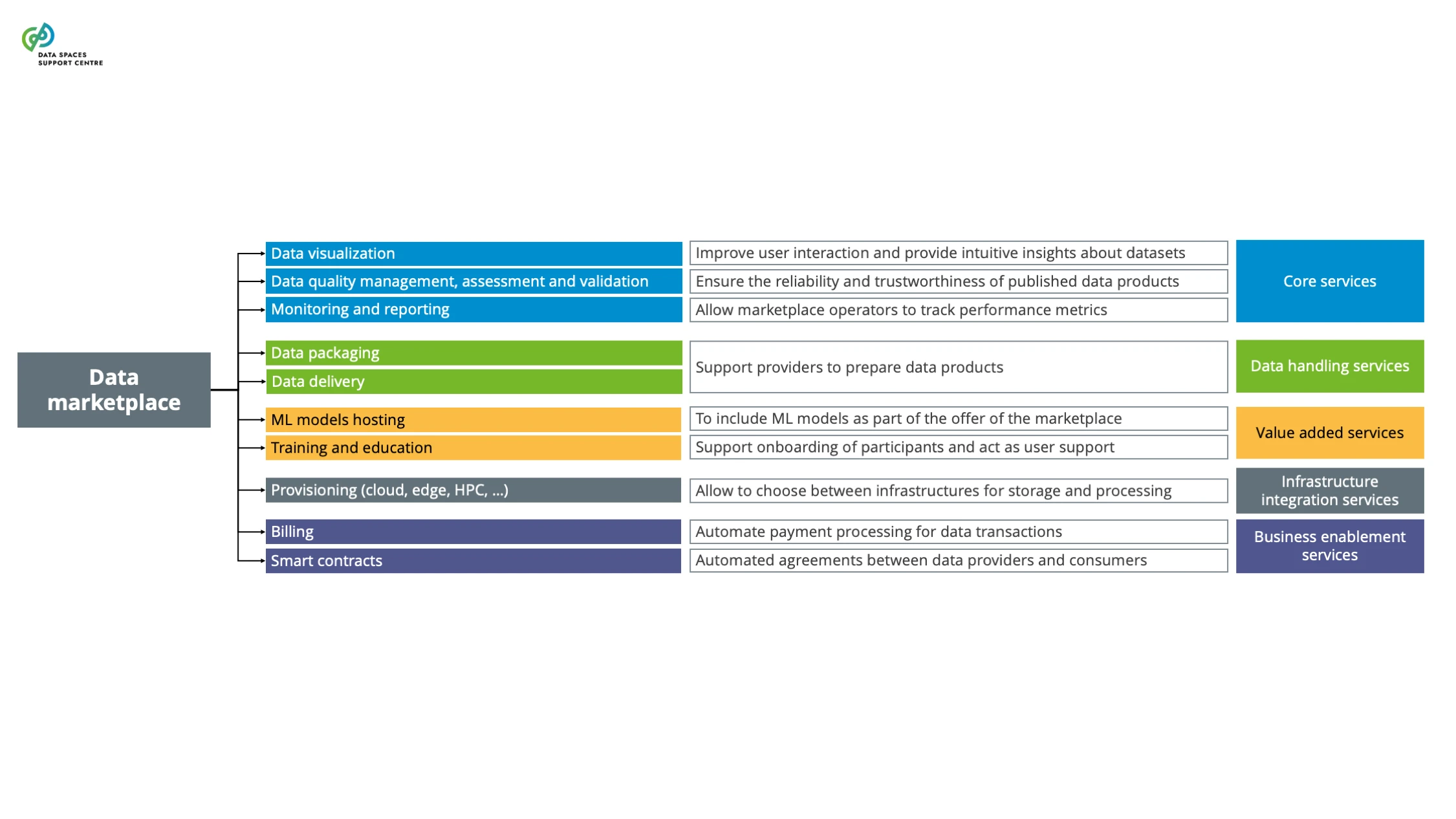

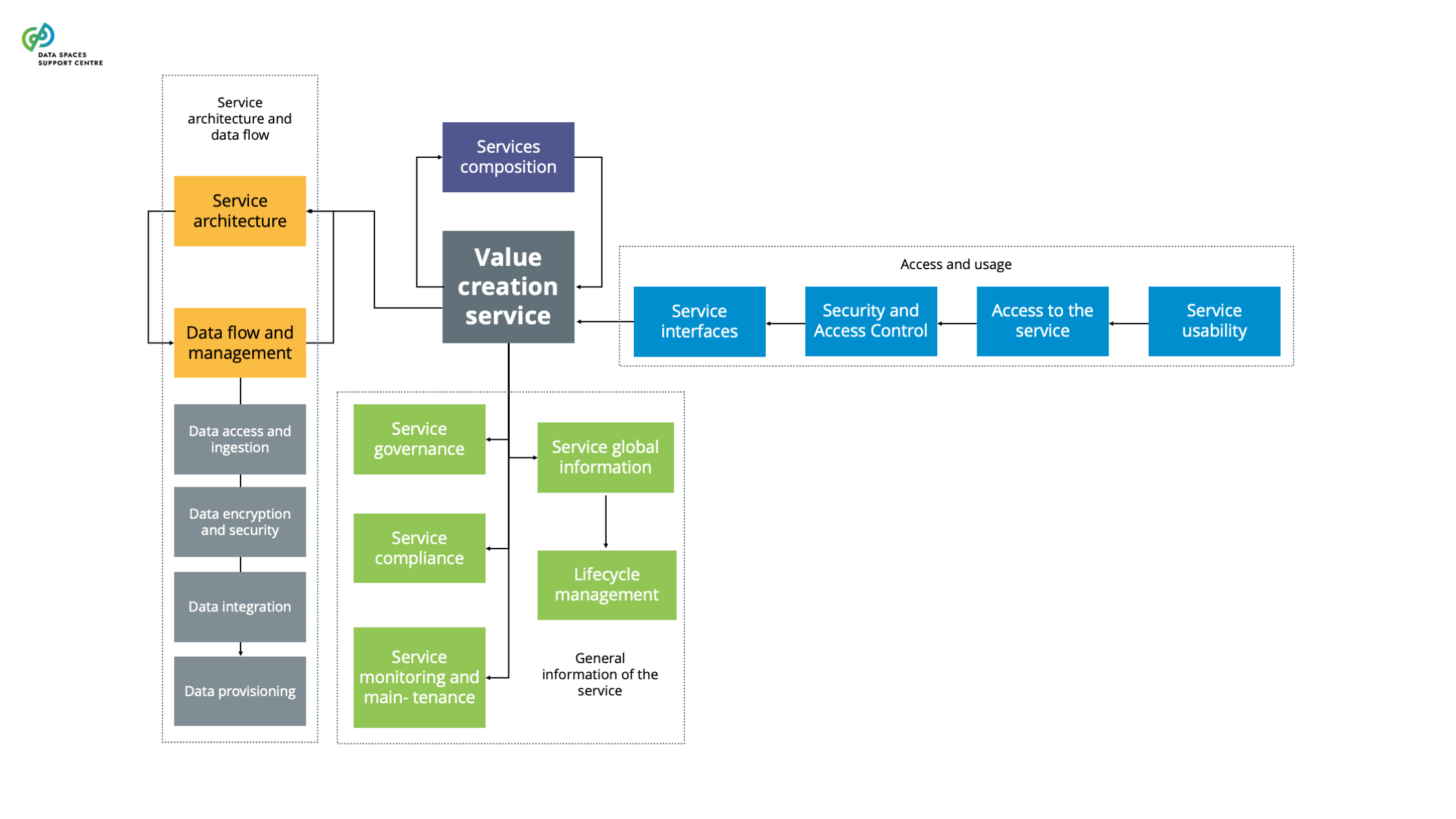

| Deployment And Use Of Services

Source (vsn) : Value creation services (bv20)

|

Deployment models suitable for the data space, depending on objectives, scalability and operational requirements (cloud-native architectures, hybrid cloud solutions, in-premises, serverless deployment)Define and adhere to Service Level Agreements that specify service availability, performance metrics, and support response times. Consider the SLA baseline of the specific service.Intuitive user interfaces (UI) and user experience design to make services easy to use and navigate |

| Development Cycle

Source (vsn) : 3 Evolution of Data Space Initiatives (bv30)

|

The sequence of stages that a data space initiative passes through during its progress and growth. In each stage, the initiative has different needs and challenges, and when progressing through the stages, it evolves regarding knowledge, skills and capabilities. |

| Development Processes

Source (vsn) : 3 Evolution of Data Space Initiatives (bv30)

|

A set of essential processes the stakeholders of a data space initiative conduct to establish and continuously develop a data space throughout its development cycle.

Note : This term was automatically generated as a synonym for: data-space-development-processes

|

| Digital Europe Programme

Sources (vsn) :

- 11 Foundation of the European Data Economy Concepts (bv20)

- 11 Foundation of the European Data Economy Concepts (bv30)

|

An EU funding programme that funds several data space related projects, among other topics. The programme is focused on bringing digital technology to businesses, citizens and public administrations. |

| DMA Gatekeeper

Source (vsn) : Regulatory Compliance (bv20)

|

an undertaking providing core platform services, designated by the European Commission if:

it has a significant impact on the internal market;it provides a core platform service which is an important gateway for business users to reach end users; andit enjoys an entrenched and durable position, in its operations, or it is foreseeable that it will enjoy such a position in the near future (art. 3 (1) DMA). An undertaking shall be presumed to satisfy the respective requirements in paragraph 1:

as regards paragraph 1, point (a), where it achieves an annual Union turnover equal to or above EUR 7,5 billion in each of the last three financial years, or where its average market capitalisation or its equivalent fair market value amounted to at least EUR 75 billion in the last financial year, and it provides the same core platform service in at least three Member States;as regards paragraph 1, point (b), where it provides a core platform service that in the last financial year has at least 45 million monthly active end users established or located in the Union and at least 10 000 yearly active business users established in the Union, identified and calculated in accordance with the methodology and indicators set out in the Annex;as regards paragraph 1, point (c), where the thresholds in point (b) of this paragraph were met in each of the last three financial years. (art. 3 (2) DMA).

|

| DSSC Asset

Source (vsn) : 10 DSSC Specific Terms (bv30)

|

A sustainable open resource that is developed and governed by the Data Spaces Support Centre. The assets can be used to develop, deploy and operationalise data spaces and to enable knowledge sharing around data spaces. The DSSC also develops and executes strategies to provide continuity for the main assets beyond the project funding. |

| DSSC Glossary

Source (vsn) : 10 DSSC Specific Terms (bv30)

|

A limited set of data spaces related terms and corresponding descriptions. Each term refers to a concept, and the term description contains a criterion that enables people to determine whether or not something is an instance (example) of that concept. |

| DSSC Toolbox

Source (vsn) : 10 DSSC Specific Terms (bv30)

|

A catalogue of data space component implementations curated by the Data Space Support Centre. |

| Dynamic Capabilities (7)

Source (vsn) : Examples (bv20)

|

The governance authority is in constant discussion with the service providers and gives feedback on whether it is necessary to alter or change the business model.The board looks for ways in which to break even. There is no structural process in place to evaluate and monitor the business model.No structural process for innovating the business model is in placeThere is a board and a supervisory board, and there are three documents which define the governance of the data space:Statutes or Articles of Association of SCSNAccession AgreementCode of Conduct |

| EIDAS 2 Regulation

Source (vsn) : Identity & Attestation Management (bv30)

|

It is an updated version of the original eIDAS regulation, which aims to further enhance trust and security in cross-border digital transactions with the EU. |

| EIDAS Regulation

Source (vsn) : Identity & Attestation Management (bv30)

|

The EU Regulation on electronic identification and trust services for electronic transactions in the internal market |

| Enabling Services

Source (vsn) : 4 Data Space Services (bv30)

|

Refer mutually to facilitating services and participant agent services, hence the technical services that are needed to enable trusted data transaction in data spaces. |

| European Data Innovation Board

Source (vsn) : 11 Foundation of the European Data Economy Concepts (bv20)

|

The expert group established by the Data Governance Act (DGA) to assist the European Commission in the sharing of best practices, in particular on data intermediation, data altruism and the use of public data that cannot be made available as open data, as well as on the prioritisation of cross-sectoral interoperability standards, which includes proposing guidelines for common European data spaces (DGA Article 30 ). The European Data Innovation Board (EDIB) will have additional competencies under the Data Act. |

| European Data Innovation Board

Source (vsn) : 11 Foundation of the European Data Economy Concepts (bv30)

|

The expert group established by the Data Governance Act (DGA) to assist the European Commission in the sharing of best practices, in particular on data intermediation, data altruism and the use of public data that cannot be made available as open data, as well as on the prioritisation of cross-sectoral interoperability standards, which includes proposing guidelines for Common European Data Spaces (DGA Article 30 ). The European Data Innovation Board received additional additional assignments under the Data Act (DA Article 42 ). |

| European Digital Identification (EUDI) Wallet

Source (vsn) : Identity & Attestation Management (bv30)

|

The European Digital Identity Regulation introduces the concepts of EU Digital Identity Wallets. They are personal digital wallets that allow citizens to digitally identify themselves, store and manage identity data and official documents in electronic format. These documents may include a driving licence, medical prescriptions or education qualifications. |

| European Single Market For Data

Sources (vsn) :

- 11 Foundation of the European Data Economy Concepts (bv20)

- 11 Foundation of the European Data Economy Concepts (bv30)

|

A genuine single market for data – open to data from across the world – where personal and non-personal data, including sensitive business data, are secure and businesses also have easy access to high-quality industrial data, boosting growth and creating value.

Explanatory Text : Source: European strategy for data

|

| European Strategy For Data

Sources (vsn) :

- 11 Foundation of the European Data Economy Concepts (bv20)

- 11 Foundation of the European Data Economy Concepts (bv30)

|

A vision and measures ( a strategy ) that contribute to a comprehensive approach to the European data economy, aiming to increase the use of, and demand for, data and data-enabled products and services throughout the Single Market. It presents the vision to create a European single market for data. |

| Evidence

Source (vsn) : Trust Framework (bv30)

|

Evidence can be included by an issuer to provide the verifier with additional supporting information in a verifiable credential (ref. Verifiable Credentials Data Model v2.0 (w3.org) ) |

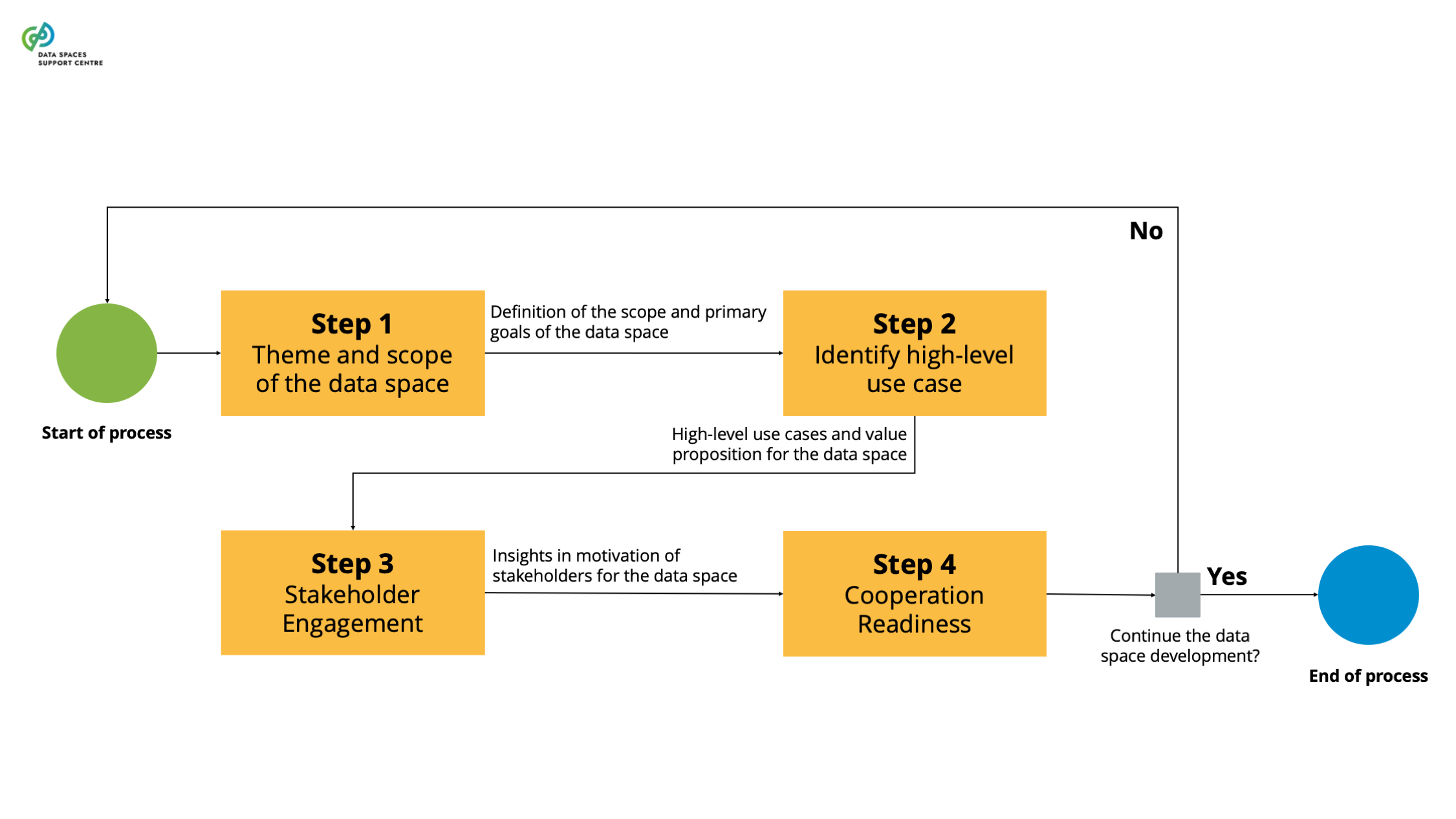

| Exploratory Stage

Source (vsn) : 3 Evolution of Data Space Initiatives (bv30)

|

The stage in the development cycle in which a data space initiative starts. Typically, in this stage, a group of people or organisations starts to explore the potential and viability of a data space. The exploratory activities may include, among others, identifying and attracting interested stakeholders, collecting requirements, discussing use cases or reviewing existing conventions or standards. |

| Facilitating Services

Source (vsn) : 4 Data Space Services (bv30)

|

Services which facilitate the interplay of participants in a data space, enabling them to engage in (commercial) data-sharing relationships of all sorts and shapes.

Explanatory Text : They are sometimes also called ‘federation services’.

|

| FAIR Principles

Source (vsn) : Regulatory Compliance (bv20)

|

a collection of guidelines by which to improve the Findability, Accessibility, Interoperability, and Reusability of data objects. These principles emphasize discovery and reuse of data objects with minimal or no human intervention (i.e. automated and machine-actionable), but are targeted at human entities as well ( Common Fund Data Ecosystem Documentation) .

Explanatory Text : In 2016, the ‘FAIR Guiding Principles for scientific data management and stewardship’ were published in Scientific Data. The authors intended to provide guidelines to improve the Findability, Accessibility, Interoperability, and Reuse of digital assets. The principles emphasise machine-actionability (i.e., the capacity of computational systems to find, access, interoperate, and reuse data with none or minimal human intervention) because humans increasingly rely on computational support to deal with data as a result of the increase in volume, complexity, and creation speed of data (GO FAIR Initiative)

|

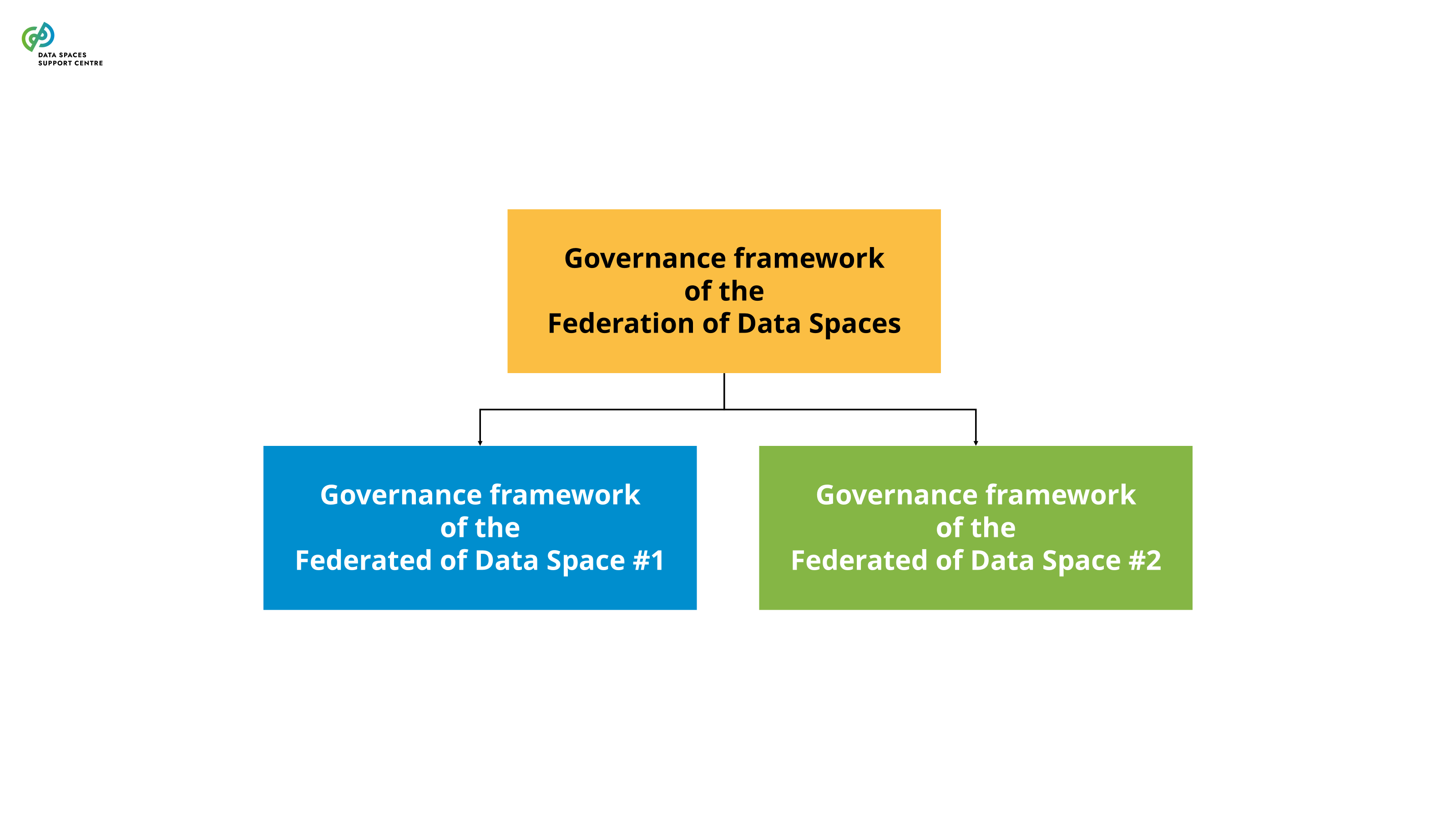

| Federated Data Spaces

Sources (vsn) :

- Data Exchange (bv20)

- Data Exchange_ (bv30)

|

A data space that enables seamless data transactions between the participants of multiple data spaces based on agreed common rules, typically set in a governance framework.

Explanatory Texts :

- The definition of a federation of data spaces is evolving in the data space community.

- A federation of data spaces is a data space with its own governance framework, enabled by a set of shared services (federation and value creation) of the federated systems, and participant agent services that enable participants to join multiple data spaces with a single onboarding step.

|

| Federation Services

Source (vsn) : 4 Data Space Services (bv20)

|

Services which facilitate the interplay of participants in a data space, enabling them to engage in (commercial) data-sharing relationships of all sorts and shapes. They perform an intermediary role in the data space. |

| Finite Data

Sources (vsn) :

- Data Exchange (bv20)

- Data Exchange_ (bv30)

|

Data that is defined by a finite set, such as a fixed dataset. |

| First-party Conformity Assessment Activity

Source (vsn) : Identity & Attestation Management (bv30)

|

conformity assessment activity that is performed by the person or organization that provides or that is the object of conformity assessment (ref. ISO/IEC 17000:2020(en), Conformity assessment — Vocabulary and general principles ) |

| Functionality

Source (vsn) : 1 Key Concept Definitions (bv30)

|

A specified set of tasks that are critical for operating a data space and that can be associated with one or more data space roles.

Explanatory Text : The data space governance framework specifies the data space functionalities and associated roles. Each functionality and associated role consist of rights and duties for performing tasks related to that functionality.

Note : This term was automatically generated as a synonym for: data-space-functionality

|

| Gatekeepers

Source (vsn) : Types of Participants (Participant as a Trigger) (bv20)

|

The Digital Markets Act defines gatekeepers as undertakings providing so-called “core platform services”, such as online search engines, social networking services, video-sharing platform services, number-independent interpersonal communications services, operating systems, web browsers, etc. According to Art. 3 (1) DMA, for an undertaking to be designated by the European Commission as a “gatekeeper”, it has to have a significant impact on the internal market; provide a core platform service which is an important gateway for business users to reach end users; and it enjoys an entrenched and durable position in its operations, or it is foreseeable that it will enjoy such a position in the near future. It also has to meet the requirements regarding an annual turnover above the threshold determined by the regulation. So far, the European Commission has designated the following gatekeepers: Alphabet, Amazon, Apple, Booking, ByteDance, Meta, and Microsoft. This position is determined in relation to a specific core platform service (for instance, Booking has been designated a gatekeeper for its online intermediation service “ http://Booking.com ” ). While the DMA does not aim to establish a framework for data sharing, it challenges the “data monopoly” of gatekeepers. Specific data-related obligations addressed to gatekeepers that may be relevant in the context of a data space include:Ban on data combination - For example, combining personal data from one core platform service with personal data from any further core platform services, or from any other services provided by the gatekeeper or with personal data from third-party services (art. 5(2) (b) DMA);Data silos - Prohibition to use, in competition with business users, not publicly available data generated or provided by those business users in the context of their use of the relevant core platform services (art. 6(2) DMA);Data portability - Obligation to provide end users and third parties authorised by an end user with effective portability of data provided by the end user or generated through the activity of the end user in the context of the use of the relevant core platform service (Article 6(9) DMA);Access to data generated by users - Obligation to provide business users and third parties authorised by a business user access and use of aggregated/non-aggregated data, including personal data, that is provided for or generated in the context of the use of the relevant core platform services (Article 6(10) DMA);Access search data for online search engines - Providing online search engines fair, reasonable and non-discriminatory terms to ranking, query, click and view data in relation to free and paid search generated by end users on its online search engines (Article 6(11) DMA). More information about the data-sharing obligations of the gatekeepers can be found here: data_sharing_obligations_under_the_dma_-_challenges_and_opportunities_-_may24.pdf (informationpolicycentre.com) |

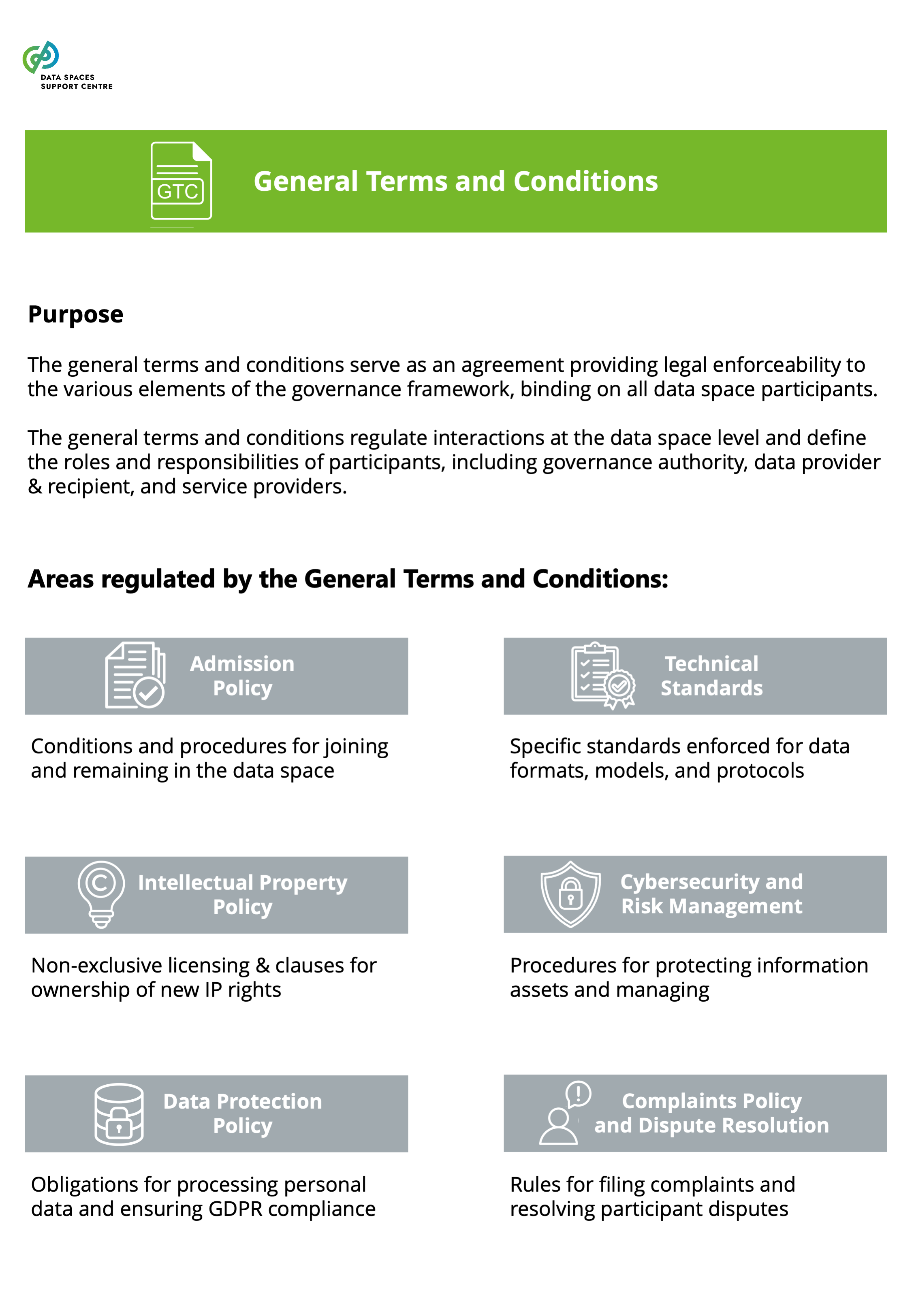

| General Terms & Conditions

Source (vsn) : Contractual Framework (bv20)

|